2010-07-01

Abstract

With 23 full anti-spam solutions on the test bench, this month’s VBSpam comparative review is the largest to date, with a record 22 product achieving certification. Martijn Grooten has the details.

Copyright © 2010 Virus Bulletin

With 23 full anti-spam solutions on the test bench, this month’s VBSpam comparative review is the largest to date, and the number of products achieving a VBSpam award also exceeds all previous records. It is certainly good to see that there are so many decent solutions on offer to fight the ever-present problem of spam, but what pleased me the most in this month’s test was the record number of products that had no false positives.

The problem of false positives in spam filtering is regularly played down. After all, unlike anti-malware false positives, a missed legitimate email does no harm to a computer network or to an end-user’s PC. However, this also means that false positives frequently go by unnoticed – they may disappear among the vast amount of real spam that is blocked by a filter, so that neither the customer nor the vendor realize the extent of the problem.

This is why false positives play a significant role in the VBSpam tests and why we add extra weight to the false positive score. In the calculation of a product’s final score, the false positive rate is weighed three times as heavily as the spam catch rate, while a single false positive is considered as undesirable as over 200 false negatives. It is also why we are continuously trying to improve the quantity and quality of the legitimate emails used in the test.

The test methodology has not been changed since the previous test; readers are advised to read the methodology at http://www.virusbtn.com/vbspam/methodology/ or to refer to previous reviews for more details. Email is still sent to the products in parallel and in real-time, and products have been given the option to block email pre-DATA. Once again, three products chose to make use of this.

As in previous tests, the products that needed to be installed on a server were installed on a Dell PowerEdge R200, with a 3.0GHz dual core processor and 4GB of RAM. The Linux products ran on SuSE Linux Enterprise Server 11; the Windows Server products ran on either the 2003 or the 2008 version, depending on which was recommended by the vendor. (It should be noted that most products run on several different operating systems.)

To compare the products, we calculate a ‘final score’, defined as the spam catch (SC) rate minus three times the false positive (FP) rate. Products earn VBSpam certification if this value is at least 96:

SC - (3 x FP) ≥ 96

The test ran from 0:00am BST on 11 June 2010 until 8:00am BST on 28 June 2010. During this two-and-a-half week period products were required to filter 176,137 emails, 173,635 of which were spam, while the other 2,502 were ham. The former were provided by Project Honey Pot and the latter consisted of the traffic to a number of email discussion lists; for details on how some of these messages were modified to make them appear to have been sent directly to us by the original sender, readers should consult the previous review (see VB, May 2010, p.24). These legitimate emails were in a number of different languages and character sets.

In the last test, products’ performance on the ‘VB corpus’ (consisting of legitimate email and spam sent to @virusbtn.com addresses) was included for comparison with earlier reviews. However, the numerous downsides in having our own legitimate email sent to two dozen products easily outweighed the extra information this provided, and as a result we have decided to no longer include the VB corpus. Now is a good moment to thank my colleagues for the many hours they have spent on the tedious task of manually classifying all their email into ‘ham’ and ‘spam’.

The daily variation in the amount of spam sent through the products reflects the variation in spam received by Project Honey Pot, which in turn reflects the variation in spam sent worldwide. However, we are able to dictate what percentage of the spam we receive from Project Honey Pot is sent through the products; this explains the smaller size of the spam corpus compared to that of the previous test.

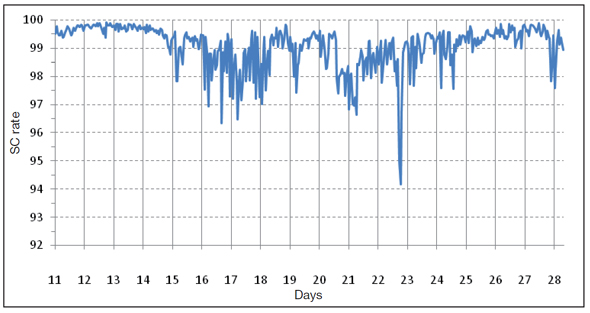

What we cannot influence is the kind of spam sent through the products – this reflects the real-world situation, with new spam campaigns occurring here, and botnets taken down there. The graph below shows the average product’s spam catch rate for every hour the test is run. It shows that the easier-to-filter spam was sent during the first few days of the test, while the spam sent during the second week presented the most problems for the products.

Figure 1. Average product's spam catch rate for every hour the test is run. (For the computation of the average spam catch rate per hour, the best performing and worst performing products during that hour have not been included. This should prevent the averages from being skewed by a possible problem a single product may have during that time.)

Using these hourly spam catch rates, we have also computed each product’s standard deviation from their average; these numbers are included in the results table. The standard deviation is probably of little interest to potential customers; it is, however, interesting for researchers and, especially, developers. When developers want to improve their product’s spam catch rate, they want to know whether it simply misses a certain, more or less constant, percentage of spam (indicated by a low standard deviation) or whether it has good and bad periods (indicated by a high standard deviation), which may suggest a slow response to new spam campaigns. (Note: the ‘averages’ used in the calculation of the standard deviations are the averages of the hourly spam catch rates. This is approximately, but not necessarily exactly, equal to the overall spam catch rate.)

SC rate: 99.78%

SC rate (image spam): 99.67%

SC rate (large spam): 99.28%

FP rate: 0.16%

Final score: 99.30

AnubisNetworks is a small Lisbon-based company that offers a number of anti-spam solutions, ranging from hardware appliances to a hosted solution; we tested the latter. All of the company’s products use its in-house anti-spam technology, which is built around a fingerprinting technology and an IP reputation system. I found the product’s web interface very easy to use and, had I needed to, I would have been able to make a large number of adjustments so as to customize the product to my needs.

I would, however, have had little reason to do so. The product debuted with the third highest spam catch rate overall and with only four false positives this gives the product a final score of well over 99: an excellent performance, earning the product its first VBSpam award.

SC rate: 99.55%

SC rate (image spam): 99.89%

SC rate (large spam): 99.69%

FP rate: 0.00%

Final score: 99.55

In the last test, BitDefender combined a very good spam catch rate with just three false positives – which the developers considered to be three too many. They will thus be pleased to know that there were no false positives this time, while the spam catch rate was unchanged. With one of the highest final scores once again, the Romanian product wins its eighth consecutive VBSpam award.

SC rate: 99.08%

SC rate (image spam): 97.99%

SC rate (large spam): 96.02%

FP rate: 0.00%

Final score: 99.08

Berlin-based eleven is the largest email security provider in Germany. That the company doesn’t call itself an ‘anti-spam’ vendor is no coincidence: its eXpurgate products classify emails into 16 categories and the absolute avoidance of false positives is one of its highest priorities. It attempts to achieve this by correlating the volume of individual fingerprints, not just of the sending system.

Of the various solutions the company offers (including software and virtual hardware), we tested a hosted solution: eXpurgate Managed Service 3.2. It was set up easily and there was no need to make any changes. The product caught over 99% of all spam but, in line with the company’s philosophy, its developers will be more pleased with the fact that no legitimate email was incorrectly filtered. This impressive debut more than deserves a VBSpam award.

SC rate: 97.81%

SC rate (image spam): 96.52%

SC rate (large spam): 96.91%

FP rate: 0.00%

Final score: 97.81

This test sees Fortinet’s FortiMail appliance win its seventh consecutive VBSpam award, but this is the first time it has achieved an award with no false positives. The product’s customers can be confident that there is little chance of legitimate mail being blocked.

SC rate: 98.10%

SC rate (image spam): 96.93%

SC rate (large spam): 97.94%

FP rate: 0.16%

Final score: 97.62

After a run of products without any false positives, four incorrectly classified legitimate emails will be a reminder for Kaspersky’s developers that this is an area that should not be forgotten. However, a decent spam catch rate ensures that the final score is sufficient to earn the security giant its sixth VBSpam award.

SC rate: 99.97%

SC rate (image spam): 99.89%

SC rate (large spam): 99.78%

SC rate pre-DATA: 98.24%

FP rate: 0.12%

Final score: 99.61

Esva’s impressive debut in the last test may have come as a surprise to many who had not heard of the Italian company – the product blocked more spam than any other solution in the test. This month Esva proves its last performance wasn’t a one-off by once again producing the highest spam catch rate in the test. A reduction in the number of false positives – of which there were only three this time – gives the product the third best final score and a well deserved VBSpam award.

SC rate: 99.62%

SC rate (image spam): 100.00%

SC rate (large spam): 100.00%

FP rate: 0.04%

Final score: 99.50

Since first entering our tests, M86’s MailMarshal has achieved four VBSpam awards in a row. This month it still managed to improve on previous scores: both the product’s spam catch rate and its false positive rate improved significantly, which should make M86’s developers extra proud of the product’s fifth VBSpam award.

SC rate: 99.46%

SC rate (image spam): 99.65%

SC rate (large spam): 99.64%

FP rate: 0.04%

Final score: 99.34

McAfee’s Email Gateway appliance was one of a few products that had a relatively hard time blocking legitimate email in foreign character sets in the last test. While not enough to deny the product a VBSpam award, there was definitely some room for improvement.

The developers have obviously been hard at work since then, and in this month’s test there was just a single false positive; the product’s spam catch rate improved too. The product’s sixth consecutive VBSpam award is well deserved.

SC rate: 96.52%

SC rate (image spam): 94.27%

SC rate (large spam): 93.02%

FP rate: 0.04%

Final score: 96.40

McAfee’s developers will probably be a little disappointed by the performance this month from the Email and Web Security Appliance: its spam catch rate was rather low for several days. No doubt the developers will scrutinize the appliance’s settings and try to find the root cause of this problem. However, a low false positive rate was enough to tip the final score over the threshold, earning the product a VBSpam award.

SC rate: 99.45%

SC rate (image spam): 99.16%

SC rate (large spam): 99.15%

FP rate: 0.08%

Final score: 99.21

After six consecutive VBSpam awards, MessageStream missed out on winning one for the first time in the last test; the product had a very hard time coping with legitimate Russian email. However, the developers took our feedback seriously, and since the last test have made some improvements to the hosted solution. Their hard work has been rewarded this month with just two false positives, a decent spam catch rate and a seventh VBSpam award for their efforts.

SC rate: 98.60%

SC rate (image spam): 99.67%

SC rate (large spam): 85.23%

FP rate: 1.20%

Final score: 95.00

The M+Guardian appliance had been absent from our tests for several months, but having worked hard on a new version of the product, its developers decided it was time to re-submit it. I was pleasantly surprised by the intuitive user interface, which enables a system administrator to configure various settings to fine-tune the appliance. Unfortunately, a large number of false positives mean that M+Guardian misses out on a VBSpam award this time.

SC rate: 99.96%

SC rate (image spam): 100.00%

SC rate (large spam): 100.00%

FP rate: 0.00%

Final score: 99.96

With the second best spam catch rate overall and just a handful of false positives on the last occasion, Microsoft’s Forefront Protection 2010 for Exchange Server seemed unlikely to improve on its past performance in this test. However, the product still managed to do that and a stunning spam catch rate of 99.96% combined with a total lack of false positives not only wins the product its sixth consecutive VBSpam award, but also gives it the highest final score for the third time in a row.

SC rate: 98.14%

SC rate (image spam): 99.02%

SC rate (large spam): 93.96%

FP rate: 0.04%

Final score: 98.02

Vircom’s modusGate product re-joined the tests in May, when a few days of over-zealous filtering of Russian email caused too many false positives to win a VBSpam award. The developers made sure that this wouldn’t happen again and, indeed, a single false positive was nothing but a barely visible stain on a decent spam catch rate. A VBSpam award is more than deserved.

SC rate: 97.76%

SC rate (image spam): 99.10%

SC rate (large spam): 94.85%

FP rate: 0.04%

Final score: 97.64

Pro-Mail, a solution developed by Prolocation, offers a hosted solution but is also available for ISPs as a private-label or co-branded solution for their customers. What I found interesting about the product is that the results of its filtering are also used to improve the SURBL URI blacklist (since some of Pro-Mail’s developers are involved in the project) – thus helping many spam filters to detect spam by the URLs mentioned in the email bodies.

In this test, of course, we focused on Pro-Mail’s own filtering capabilities, which were rather good. True, the spam catch rate could be improved upon, but a single false positive indicated that this might be a matter of modifying the threshold. A VBSpam award was easily earned.

SC rate: 99.65%

SC rate (image spam): 99.65%

SC rate (large spam): 99.24%

FP rate: 0.04%

Final score: 99.53

After two tests and as many decent performances, Sophos’s developers still found things to improve upon in their appliance. Indeed, the number of false positives was reduced from five in the last test to just one this time, while the spam catch rate remained almost the same; one of the highest final scores wins the product its third VBSpam award.

SC rate: 98.26%

SC rate (image spam): 98.94%

SC rate (large spam): 97.27%

FP rate: 0.36%

Final score: 97.18

With nine false positives, the filtering of legitimate mail is an area that SPAMfighter’s developers still need to focus on. On the positive side, however, the FP rate has decreased slightly since the last test, while the spam catch rate saw a small increase. A fifth VBSpam award will be welcomed in the company’s Copenhagen headquarters.

SC rate: 99.57%

SC rate (image spam): 99.92%

SC rate (large spam): 99.37%

FP rate: 0.20%

Final score: 98.97

Unlike most other products, SpamTitan had few problems with the ‘new ham’ that was introduced in the previous test. Rather, the virtual solution had set its filtering threshold to be so relaxed that the developers were a little disappointed by the relatively low spam catch rate. They adjusted it slightly this time and, while there were a few more false positives, a significantly higher spam catch rate means the product wins its fifth VBSpam award with an improved final score.

SC rate: 98.28%

SC rate (image spam): 98.05%

SC rate (large spam): 96.69%

FP rate: 0.76%

Final score: 96.00

Sunbelt’s VIPRE anti-spam solution failed to win a VBSpam award in the previous test because of a high false positive rate. A new version of the product was expected to make a difference – which it did, although only just enough to push the final score over the VBSpam threshold.

SC rate: 99.54%

SC rate (image spam): 99.67%

SC rate (large spam): 99.19%

FP rate: 0.04%

Final score: 99.42

Despite the fact that it was one of the top performers in the previous test, Symantec’s Brightmail virtual appliance still managed to see a tiny improvement to its spam catch rate, while its false positive rate was reduced to just one missed legitimate email. Yet another very high final score wins the product its fourth consecutive VBSpam award.

SC rate: 99.68%

SC rate (image spam): 99.78%

SC rate (large spam): 99.28%

SC rate pre-DATA: 99.00%

FP rate: 0.00%

Final score: 99.68

The people at The Email Laundry were happy with their product’s debut in the last test – in particular with its high spam catch rate – but they believed the false positive rate could be improved upon. This test’s results show they were right: some small tweaks resulted in a zero false positive score, while hardly compromising on the spam catch rate (and not at all on the pre-DATA catch rate). Knowledge that its has the second highest final score in the test will make The Email Laundry’s VBSpam award shine even more brightly.

SC rate: 98.56%

SC rate (image spam): 99.67%

SC rate (large spam): 95.88%

FP rate: 0.28%

Final score: 97.72

Vade Retro’s hosted solution won a VBSpam award on its debut in the last test and repeats the achievement in this test. Both the spam catch rate and the false positive rate were a little less impressive this time around, so there is some room for improvement, but for a vendor that is so focused on R&D this will be seen as a good challenge.

SC rate: 98.78%

SC rate (image spam): 99.05%

SC rate (large spam): 98.25%

FP rate: 0.00%

Final score: 98.78

Vamsoft’s ORF, which debuted in the last test, was one of only two full solutions that managed to avoid false positives; it is the only one to repeat that this time – an excellent performance, particularly as this is combined with a decent spam catch rate. Another VBSpam award is well deserved.

SC rate: 98.36%

SC rate (image spam): 99.43%

SC rate (large spam): 98.97%

FP rate: 0.12%

Final score: 98.00

The effect of a higher standard deviation of the hourly spam catch rate may be most clearly visible in Webroot’s results. The hosted solution caught well over 99% of the spam on most days, but had a hard time with apparently more difficult spam sent during the middle of the test. Still, the overall spam catch rate, combined with just three false positives, is high enough to easily win the product its seventh VBSpam award.

SC rate: 98.57%

SC rate (image spam): 98.67%

SC rate (large spam): 97.76%

SC rate pre-DATA: 98.02%

FP rate: 0.00%

Final score: 98.57

Spamhaus’s IP blacklists have been helping spam filters for many years now and the recently added domain blacklist DBL is seeing increasing use as well. A fourth consecutive decent performance – and yet another without false positives – demonstrates that Spamhaus is a valuable addition to any filter.

The previous test saw several changes – in particular to the ham corpus – that caused problems for a number of products. It is good to see that the developers have acted on the feedback from the last test and that as a result many products have shown an improved performance in this test.

We too are continuously working on making improvements to the test set-up. In particular, we are looking at adding to the quantity of the ham corpus, while we also expect to have a second spam corpus included in the tests in the near future.

The next test is set to run throughout August; the deadline for product submission is 16 July 2010. Any developers interested in submitting a product should email [email protected].

Following publication of this review, careful investigation of the results brought to light some errors in the calculation of the figures. The revised figures can be seen in PDF format here. (There was no change in the VBSpam certifications awarded.)