2012-02-24

Abstract

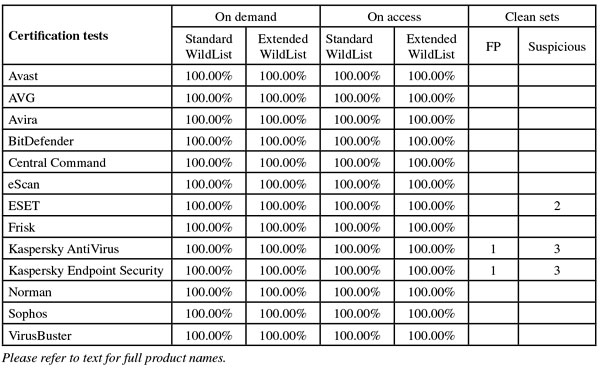

On this occasion, the VB100's annual visit to the Linux platform almost resulted in a clean sweep of passes - just a single file tripping up two products to prevent a full house. John Hawes has all the details.

Copyright © 2012 Virus Bulletin

The annual Linux test usually affords the VB lab team some respite from the non-stop VB100 test schedule – with a far smaller range of solutions on offer, and greater opportunities for automation of tests, the Linux comparative generally requires considerably less time and effort than the Windows tests that make up the bulk of the year’s work. However, with some major changes to our testing procedures introduced in the last comparative, things were likely to take rather longer this year – and with a fairly full test bench, a relatively new and unfamiliar Linux distribution, and a late start thanks to the new year, there was little time to rest up.

The platform for this test was Red Hat Enterprise Linux. The latest version, 6.2, was released some months before the test began, but for several of the participants the fact that we would be using the latest version came as something of a surprise (perhaps not helped by a lack of specific information in the initial announcements). The platform itself was fairly simple to set up, with a pleasantly slick and efficient install process. However, this did seem to be lacking in some areas, notably the disk partitioning arrangements which were completely unable to cope with NTFS partitions on our test systems. Some of these were scrapped and replaced with the latest EXT4 file systems, while some spare partitions were left as they were, and thus remained invisible throughout the test. Defaults were used throughout the set-up, with some additional software selections including development tools that were likely to be needed for compiling some of the on-access components. Additional dependencies would be resolved as needed on a per-product basis, to allow us to monitor what extra items might be required. Previous experience with Linux tests has taught us to expect three main approaches to the on-access tests, with most products either protecting the entire system with the open-source dazuko file access hooking system, or only covering Samba shares using VFS objects, while a few would doubtless use their own proprietary approaches. An additional system was set up with Windows XP SP3, to use as a client for the on-access tests, and file shares on all the test servers were connected to this machine.

The test deadline was set for 5 January, which caused the usual problems for developers in countries where this date falls in the middle of a major national holiday, but submissions were dealt with smoothly. Most of the usual suspects were present, although two of the larger names in the industry chose not to submit their products, with little reason given for their absence. At the final count numbers were pleasingly manageable, and with a test bench full of seasoned regulars we looked forward to a smooth and straightforward test.

The test sets were put together using the November 2011 WildLists (Standard and Extended). These contained little of note, comprising mainly the usual worms, online gaming password stealers and the like, with a smattering of slightly more controversial items in the Extended list. Some changes to the Extended list were made at a very late stage (a handful of items had been adjudged to be ‘grey’ rather than truly malicious), the announcement from The WildList Organization coming just in time for us to incorporate the changes into the final publication of this report. We also made a few changes of our own, removing a handful of Android and similar items from the list as per our current policies. With no new polymorphic items to deal with, the test sets were rather smaller than usual and promised fewer issues for the vendors. The prospect of a clean sweep of passes loomed large.

The clean sets presented the only additional worry for the participants, and here we made the usual changes, removing older items and updating the set with a selection of new items, focusing on business software to suit the corporate focus of this month’s test. With the removals and additions more or less balancing out, the set remained much the same size at close to half a million unique files, 180GB total size.

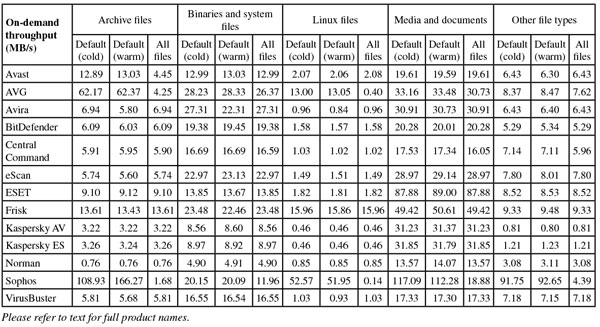

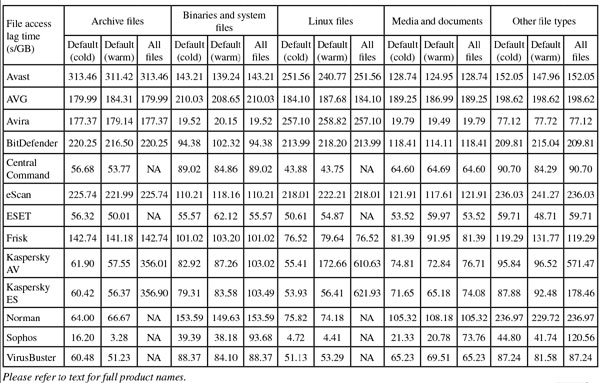

The speed sets remained unchanged from the last test (before which they were refreshed considerably), and an additional set of Linux samples was added to measure scanning speed over these types of files – taken from the /bin, /sbin, /opt and /user areas on a basic install of the platform under test. Some adjustments to the speed scripts were required, including the removal of the activities tests which were not appropriate for this platform.

With everything ready in good time, testing proceeded rapidly with the new format of four test runs per product split into the RAP test (with updates frozen on the deadline day), and three runs through the certification components. These runs took place roughly a week apart throughout late January and early February, with testing completing just in time to repurpose the test network for the next comparative in mid-February.

Avast’s Linux product was provided as a trio of RPM packages, totalling around 50MB. The set-up process was fairly simple, with the RPMs dropping everything neatly into place. Some simple instructions were provided for compiling and installing the dazuko modules, which ran smoothly too, and everything was ready to go in next to no time. The product operates in a standard manner, with a daemon controlled by normal init scripts, configuration files where one might expect to find them, and a simple and clear command line syntax. Man pages were provided to help work out how to use things, but were not really required after reading the basic instructions provided along with the submission.

Scanning was fairly quick for the most part, and tests ran through in good time, although a few suspect files in the RAP sets did cause scans to crash out with a segmentation fault error. Re-running things from where they had left off proved fairly simple though. On-access overheads were surprisingly high – notably over archived files which are inspected in depth by default – but the real-time component remained stable and solid under pressure. Updates were simple and reasonably quick, generally completing in just a few minutes.

Detection rates were very solid, with an excellent showing in the Response tests and good scores in the reactive part of the RAP test, dropping noticeably in the proactive week. The certification components were handled impeccably, with no issues in the WildList or clean sets, and a VB100 award is comfortably earned by Avast. After a slight blip in the last test, the vendor’s history now shows five passes and one fail in the last six tests; 11 passes in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

AVG’s submission came as a single RPM, and again some additional compilation and installation work was needed to add in the on-access component – in this case a combination of redirfs and avflt modules. The operation and layout was simple and followed standard practices, making it easy to work out without reference to the man pages, which were provided nevertheless. Everything ran smoothly and without problems, and again updates were simple and fairly zippy.

Speed tests showed good scan times on demand, which were slightly slower with archive scanning enabled, as expected. However, on-access overheads seemed rather heavy across the board. Detection rates were very good though, with another set of high scores in the Response sets and high catch rates in the reactive weeks of the RAP sets, again dropping off fairly sharply into the proactive week.

The WildList and clean sets were dealt with without problems, and a VB100 award is well earned, giving AVG five passes and one fail in the last six tests; 11 passes in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Avira’s Linux product came as a simple archive containing an install script which sets everything up, running through a set of question-and-answer stages. This seemed to run OK up until the addition of the on-access module, which tried to install the dazuko3 system but had some trouble (which we had been warned about by the developers). This proved fairly severe, locking up the test machine completely, and a hard reboot was needed to correct things. After cleaning off the machine and reinstalling the product, we opted instead to go with the simpler dazuko2 approach. This seemed to work much better, although the installer warned that it was not officially supported.

With this hurdle overcome, tests proceeded without further issues. An absence of man pages was noted, but ample information was provided in PDF and text format documentation. With the software following standard approaches it proved simple to operate, with a number of scripts provided to perform standard tasks. Scanning speeds were pretty decent, and overheads fairly light, although archives again took some time to process – as did the set of Linux samples, which contained a number of archived files (many of them JAR archives included with the Java subsystem).

Detection rates were pretty splendid, with a superb showing in the Response tests, and excellent scores in the RAP tests too, dropping a little in the proactive week but remaining impressive even there. No problems were noted in the certification sets, and a VB100 award was easily earned, maintaining Avira’s clean sweep with 12 passes out of 12 in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

BitDefender’s Linux solution was provided as an RPM.run file which, once executed, ran through the set-up process swiftly and simply. Some additional manual work was required, including copying of VFS modules and making the appropriate changes to the Samba configuration file to provide on-access protection of shares, but this was fairly straightforward. Configuration is held in XML format and modified using a control program. This proved a little more fiddly than hoped, but with a little practice it soon became simple to operate. A number of daemons are used, but these are all controlled from a single init script, so starting and stopping the program was also fairly simple.

Scanning speeds were fairly average on demand and notably slow over the archive-heavy Linux set. Overheads were a little high on access too, but detection rates were solid in the Response sets and splendid in the RAP sets.

The WildList and clean sets presented no difficulties, and a VB100 award is duly granted, giving BitDefender six passes in the last six tests; ten passes, a single fail and one no-entry in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Central Command opted for the archive-containing-an-install-script approach, and set-up was fairly straightforward. Once again, some adjustments had to be made to the Samba configuration file to make it use a VFS object to check files before granting access to them. Configuration was fairly limited for the on-access component, with a basic configuration file providing minimal controls. No man page was provided for the on-demand scanner, but it proved fairly self-explanatory. The information on the on-access component was pretty rudimentary, but operation was stable and reliable.

Scanning speeds were fairly average on demand, but on-access overheads seemed on the light side. Detection rates were decent if unspectacular, with reasonable scores in the Response sets and the reactive weeks of the RAP sets, the proactive week dropping off fairly sharply. The certification sets were handled well though, and a VB100 award is duly earned. Central Command now has five passes and one fail in the last six tests; 11 passes in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

The eScan product incorporates the BitDefender engine, and on Linux also includes a Clam component controlled by a separate init script. The install process involves applying several RPMs, but is fairly simple. The on-access component is again provided via a Samba VFS object, which requires several lines to be added to the Samba configuration file. Operation was fairly simple and logical, with clear documentation.

Scanning speeds were not bad on demand, a little heavy on access, but things seemed to operate stably under pressure. Detection scores were good, with the RAP sets particularly well handled. The WildList and clean sets threw up no surprises, and a VB100 award is comfortably earned, giving eScan six passes in the last six tests; things are a little rockier longer term, with ten passes and two fails in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

ESET’s product came as a single RPM file, making initial set-up fairly straightforward, and a flexible system allows either dazuko or Samba VFS approaches to on-access protection. At the suggestion of the developers we went for the Samba method, which involved adding a line to the Samba init script, thus covering all Samba shares rather than providing protection on a share-by-share basis as in previous products.

On-demand settings can be configured either by adjusting defaults in the configuration file or by passing in options on the command line; the latter method generally proved the simplest. The configuration file is lengthy and covers the full range of controls – including anti-spam protection – in a single, internally consistent file, making for simple operation. On-access controls included options to check archives fully, but these seemed to make no difference to the product’s operation, and archives seemed to go unexamined regardless of the settings.

Scanning speeds were thus fairly impressive, with overheads very light indeed, and detection was impressive too, with great scores in the Response sets and solid levels in the RAP sets too, not dropping off too sharply in the proactive week. The WildLists were handled well, and with just a few hacker-esque items alerted on in the clean sets a VB100 award is duly earned. ESET maintains its impeccable record with every test entered and passed for close to a decade.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Frisk’s product was one of the most basic on the test bench this month, with a simple install script which simply links init and configuration scripts to the real components, allowing installation and operation from wherever the user wishes. The product is another which can use either dazuko or Samba methods, and again Samba was chosen as the simplest. The Samba init file is tweaked to provide protection of all shares. The layout and operation is straightforward and unsurprising, and everything ran smoothly and stably.

Scanning speeds were pretty good, and overheads fairly light, but detection rates were rather disappointing – barely rising above 50% on some days in the Response sets. Nevertheless, the certification sets were dealt with properly, and a VB100 award is duly earned. Frisk’s test history shows five passes and a single fail in the last six tests; four fails and eight passes in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

As usual, Kaspersky entered two products for the test, representing server and desktop offerings. The differences between them seemed fairly minimal though. Packages downloaded for the submission were supplemented by an offline update system which involved installing a downloader tool and setting it off to fetch data to build a local update repository. Setting up both the products was fairly simple, with install scripts performing a comprehensive set-up. Given the few differences between the two products, we opted to make the server version use Samba protection only, and it was one of few products to perform all the necessary changes automatically.

Once up and running, making changes and running tasks is a rather less straightforward process; a lengthy PDF manual had to be consulted in depth before the design of the product became clear. A master control program is provided, but the syntax of its commands is complex and esoteric in the extreme. The manual advises passing in huge multi-line commands to perform the simplest of tasks, with the traditional Linux approach of holding configuration in a simple, humanly readable test file eschewed for some reason. The easiest way we found of adjusting settings was to output the settings to a file, make changes there and read it back in again. We were baffled as to why the product couldn’t simply have a normal configuration file. After much practice however, it became fairly usable.

Scanning was very slow, and frequently used up large amounts of disk space – presumably due to archives being unpacked into temporary folders. On-access overheads were high too, particularly once the settings had been adjusted to cover all file types. Detection rates were good though, with solid showings across the Response sets and good scores in the RAP sets, dropping off fairly steeply into the proactive week.

The WildList sets were dealt with well, but in the clean sets a single sample was alerted on – a driver file for a popular gaming controller which was labelled as a FakeAV trojan. This was enough to deny Kaspersky a VB100 award for this product, and to spoil our hopes of a comparative with a 100% pass rate. Kaspersky’s corporate line has three passes and two fails, with one no-entry, in the last six tests, and seven passes and four fails from 11 entries in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 1

As mentioned, the desktop version of Kaspersky’s product is fairly similar to the server version in approach. The most noticeable difference was a desktop alert system, which seemed clear and responsive throughout testing. Again, the installation process was thorough and efficient, with the company’s own on-access system (based on the redirfs module) compiled and installed cleanly. Operation remains opaque and bizarre, with the control script hidden away and demanding lengthy and complex syntax. For non-Linux users, the approach is a little like having a Windows program that is run not by double-clicking an icon, but instead by right-clicking it and selecting the ‘Sproing!’ option, then pressing CTRL-ALT-F13 while licking the left side of the screen to access the controls.

Joking aside, the configuration system does become reasonably usable once it has been bullied into something approaching a normal set-up (by dumping the configuration to a file and adjusting it there), and things seemed generally stable and responsive. Scanning speeds were again sluggish, and overheads fairly heavy, with our set of media and documents the only area to be handled rapidly on demand. Detection was decent though, with a dependably high level throughout the Response sets and the earlier parts of the RAP sets, the proactive week dropping considerably. The WildList was dealt with without issues, but in the clean sets the same file was falsely accused, and Kaspersky’s desktop offering is also denied certification by a whisker. The desktop product’s test history shows four passes and one fail from five entries in the last six tests; nine passes and two fails in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 1

Norman’s product was unique in this month’s line-up in being provided without any way to update offline. The install and set-up process requires Internet access, and was thus run on the deadline day. It took some time, much of which was spent waiting for things to happen in the background – the script returns control with a warning that it won’t actually be finished for several minutes, and the product’s desktop interface (which seems to be the only way to monitor and control many of its activities) was unavailable for quite a while. When it finally reappeared, it continued to warn that updating and other tasks were ongoing.

Operating the on-demand scanner was at least fairly simple, but controls for the on-access component – which uses Norman’s own unique approach of mounting protected file systems in a special way – was limited and unreliable. As with the Windows products, we noted that despite setting the controls not to delete or remove infected items, some files disappeared after attempts to access them.

Scanning speeds were very slow, but overheads were not much worse than average, and detection rates were fairly reasonable – decent if not stellar in the Response sets and actually quite impressive in the earlier few weeks of the RAP sets. The WildList and clean sets were handled well, and Norman earns a VB100 award. The vendor’s test history shows a strong recovery from a rocky period, with five passes and a single fail in the last six tests; eight passes and four fails in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

The Sophos Linux product is apparently due to be connected to the company’s cloud look-up system in the next few months, but for now remains locally based only. The set-up process was one of the simplest this month, with the installer script compiling and installing the company’s proprietary on-access system without complaint and with no need for manual intervention. Operation is a little less transparent than some, with no simple configuration file, and we could find no way of enabling archive scanning on access. Testing proceeded well though, with good stability throughout.

Scanning speeds were very good indeed, with overheads very light, even in the sets containing no archives – hinting that the on-access system is more efficient than most. Detection rates were a little disappointing in the Response sets, and it looks like the product’s integration with the cloud is long overdue. Performance across the RAP sets was a little more impressive, with the older items handled noticeably better.

The core sets presented no surprises, and after a bad month last time Sophos returns to form, earning a VB100 award without trouble. Sophos now has five passes and one fail in the last six tests, and 11 passes in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Last up this month, VirusBuster’s product as usual provides few surprises thanks to its close similarity to the Central Command solution which is based on it. The set-up again revolves around an install script, and some simple tweaks to the Samba configuration are required, but we got things up and running fairly quickly with minimal effort. Syntax is clear and simple, and operation generally proved straightforward.

Scanning speeds were not bad, and overheads fairly light, with scores a little below par in most areas but far from disastrous. The core sets were handled well, and another VB100 is duly earned, giving VirusBuster a solid record of six passes in the last six tests; 12 in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

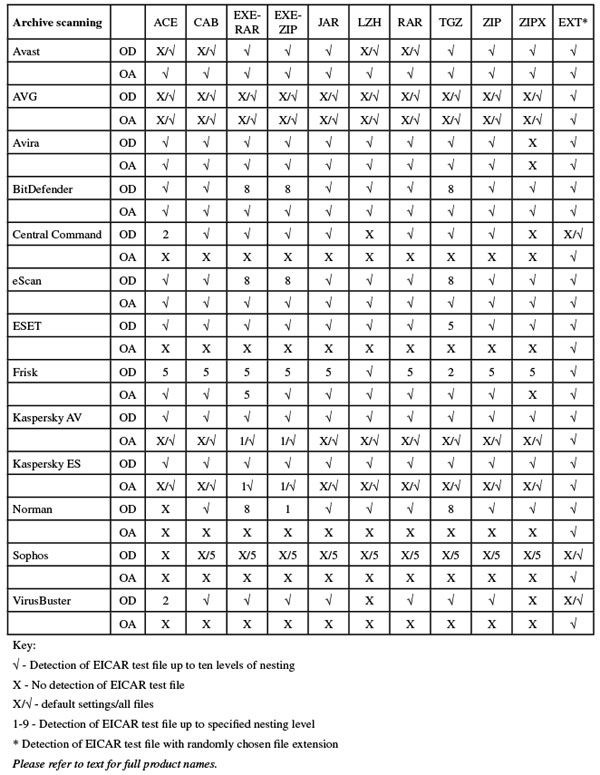

(Click for a larger version of the table)

(Click for a larger version of the table)

On-demand throughput graph

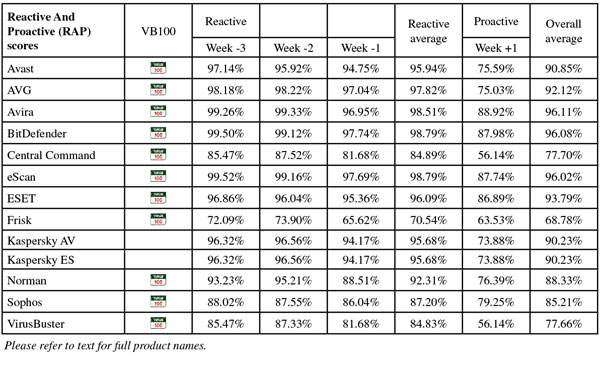

(Click for a larger version of the table)

File access lag time graph

(Click for a larger version of the table)

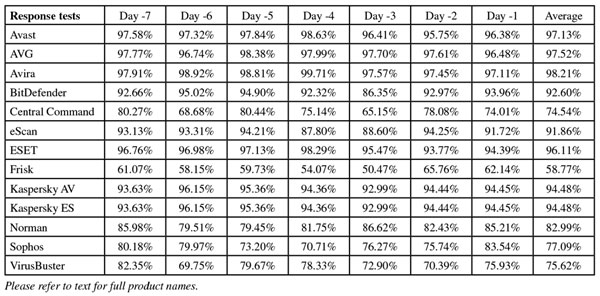

Response graph

(Click for a larger version of the table)

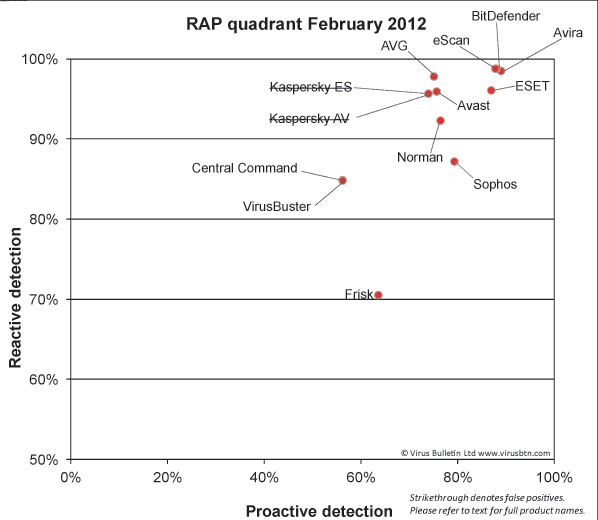

(Click for a larger version of the table)

(Click for a larger version of the chart)

As we hoped, there were few major issues this month. The main surprise came in the speed sets, where sluggishness seemed to be the order of the day. This was not helped by our selection of samples for the Linux speed set, which contained many more complex archive files than we had expected and thus took considerably longer than hoped to get through. The use of only a single client system also slowed things down, as only one product could be put through the on-access tests at a time, and in most cases the test took longer than a full working day to complete.

In certification terms, only a single file prevented a clean sweep. The file affected two products from the same vendor, which can perhaps count itself rather unlucky on this occasion.

On the whole, products proved reasonably tractable, with some good, clear set-up processes and in general simple operating procedures. A few chose to break free from the standard Linux approach to software design, and in places this presented some serious issues, but most of these were overcome with careful reference to documentation. Whether busy server admins would be willing to spend time figuring out products which have wilfully ignored almost universal best practices is up for debate.

As we put the final touches to this report, the next comparative – on Windows XP – is already under way. It will be several times larger than this test and is likely to be a much more demanding task. Unfortunately, the unexpected long running time of this test has left little time for the maintenance work we had hoped to fit in, or the long-planned lab move, but we continue to welcome feedback, suggestions, comments and criticisms.

Test environment. All products were tested on identical machines with AMD Phenom II X2 550 processors, 4GB RAM, dual 80GB and 1TB hard drives, running Red Hat Enterprise Linux 6.2, AMD64 Server Edition. On-access tests were run from a client machine with the same hardware specification running Microsoft Windows XP SP3 Professional Edition, x86, connected via Samba 3.5.10. For the full testing methodology see http://www.virusbtn.com/vb100/about/methodology.xml.

Any developers interested in submitting products for VB's comparative reviews, or anyone with any comments or suggestions on the test methodology, should contact [email protected]. The current schedule for the publication of VB comparative reviews can be found at http://www.virusbtn.com/vb100/about/schedule.xml.