2012-05-01

Abstract

As expected, the annual VB100 test on Windows XP was an epic. A higher than usual pass rate was tempered by numerous stability issues with the products under test, prompting the unveiling of a new stability rating system. John Hawes has all the details.

Copyright © 2012 Virus Bulletin

The approach of spring has always been met with mixed emotions by the VB lab team. As the semi-rural surroundings of the VB office erupt in greenery and blossom, and the sun begins to nudge its way through the winter cloud, our joy at the environmental improvements is dampened by the knowledge that the annual XP test is upon us. The platform itself is as simple and comforting as a familiar old blanket, and its ongoing global popularity ensures solid and unquestioning support from every anti-malware vendor under the sun. As a result, this test is all but guaranteed to be the biggest of the year.

As the deadline day approached, all the evidence pointed to yet another monstrous haul of submissions. On the deadline day itself, the flood of submissions became a torrent and the numbers rose still further, with a selection of new faces appearing on top of our ever-growing clutch of regulars. In the final reckoning though, things did not look too bad, with a cunningly late deadline announcement helping fend off those with slower reactions and a handful of expected entries failing to materialize.

The initial total came in at 60 entries, plus a pair of new faces which were accepted as tentative submissions only, pending some experimental test runs to see if their design would fit in with our testing methodologies. So, not quite the behemoth we’d feared, but still comfortably inside the top five biggest hauls in the history of VB100 testing.

This month saw several companies submitting multiple products. This is something which we have been quite open to up until now – our policy has been to accept and test up to two products per company free of charge, and to impose a small fee for any additional products to help support the extra work involved. In the future, though, we will be tightening our policy to allow only one product free of charge per vendor.

A remarkable chunk of this month’s participants were based on the same underlying engine, with some 17 products making use of the ubiquitous VirusBuster engine in some form or another.

One change introduced this month was the intention to be stricter in dealing with highly unstable products. In the past, where submissions have proven difficult we have done our best to nurse them through the tests, but with time so tight under our new multi-part testing processes, we decided that more than a handful of crashes or failed scans would be enough to see a product excluded from further testing, freeing up the test bench for more reliable products. We also decided that we would not keep the names of the most troublesome products to ourselves – they will be called out at the end of this report as ‘untestable products’.

A final related development we had hoped to add this month was a table of data listing such details as install and update time. One of the core things we planned to include here was an indication of product stability, evolving from the comments which have been made in the written reviews for some time now. We worked out a simple system, splitting problems into levels of severity and rating them depending on whether they occurred in normal use or only in high-stress situations, ranging from ‘Solid’ for products with no issues at all, through ‘Stable’, ‘Fair’ and ‘Buggy’ for those with increasing numbers of minor issues, and ‘Flaky’ for the most unreliable. Once this system has settled in and become reasonably repeatable and scientific, we hope to be able to include stability as one of the VB100 certification requirements.

A more detailed breakdown of this system will be merged into our procedures once it is finalized – unfortunately, as this month’s testing went on, and it became clear how much time it would take to gather the existing fields of data, our hopes of having the new system fully operational this month fell by the wayside. Nevertheless, we will include some indication of how it would have looked as we go through the products – we hope to provide a full table as part of the next comparative.

Windows XP has been with us for well over a decade, and even now it is only just being supplanted as the most popular operating system worldwide. Most estimates put it on between 25% and 35% of end-user systems, with the much more modern Windows 7 on somewhere around 35%. The lab team have been through the process of setting up XP machines dozens, perhaps hundreds of times, so it presented few difficulties. In fact, for this comparative an old image was recycled, refreshed and updated with a few handy tools before the final test image was taken and spread to the suite of test machines.

Sample sets were then prepared and placed on all systems, with the bulk of the work going into the clean set – alongside the usual tidying up, a hefty bundle of end-user software was added to the set, mainly harvested from a large collection of DVDs given away with popular magazines. With much of the stuff on these disks being either somewhat obscure or (in some cases) clearly a little less than trustworthy, we limited it to only the most popular and mainstream items.

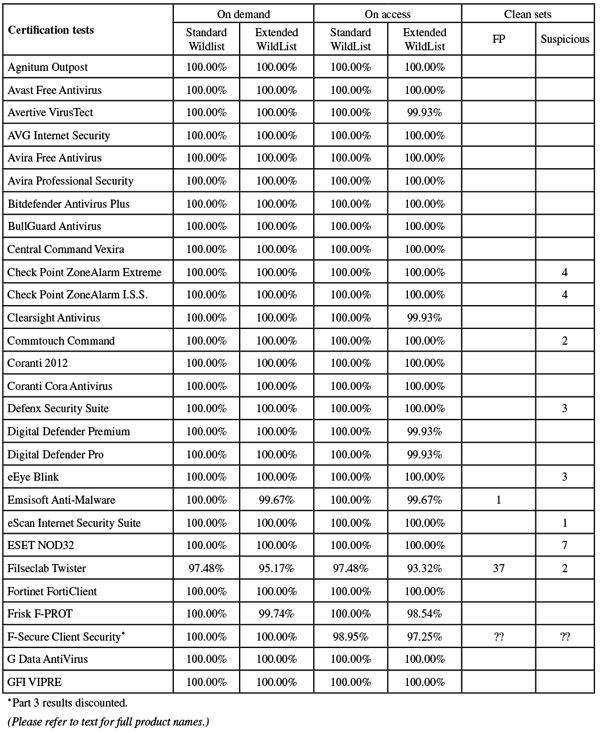

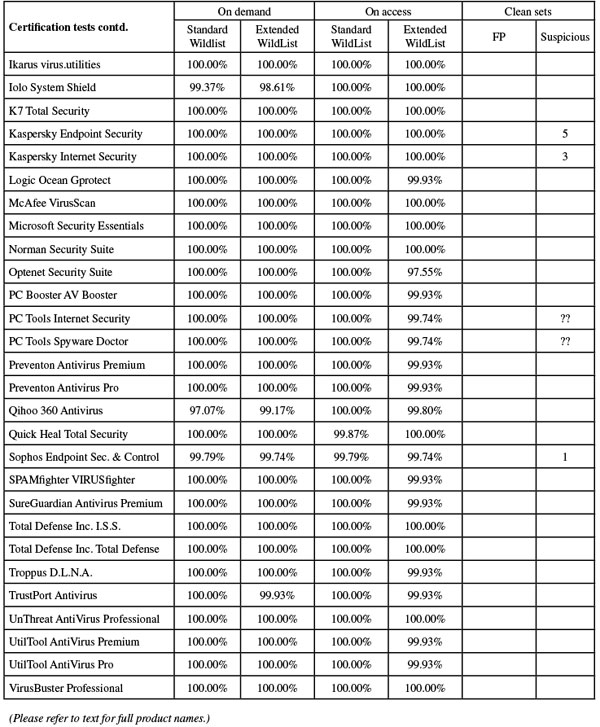

The WildList sets were built using the January 2012 lists, which were released just a few days before the deadline for this comparative (15 February). With the Extended WildList still going through a settling-in process, and many items still being removed from the list well after it has officially been finalized each month, we needed to be fairly flexible about what we included. Essentially, we accepted any challenge against any item in the list which our contacts felt to be inappropriate, excluding anything that did not fully satisfy our requirements to be labelled as malware. In the end, most of these decisions proved justified, with several post-release addenda confirming the removal of the items.

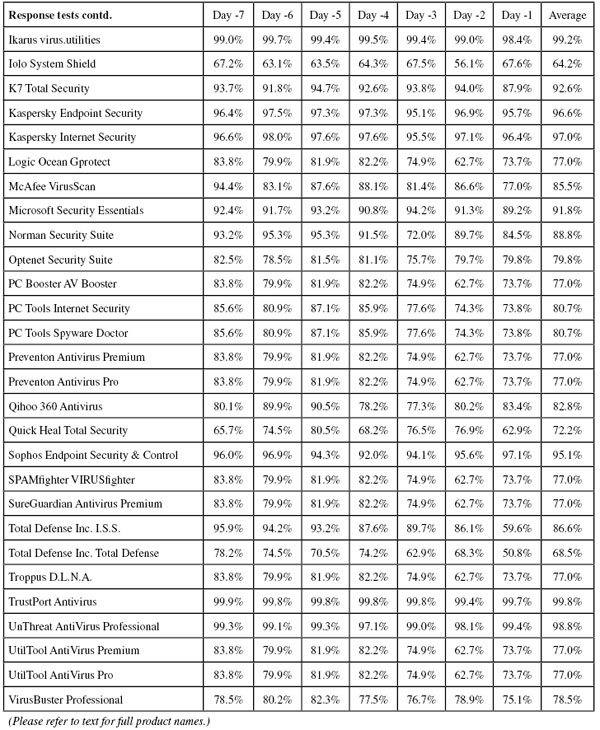

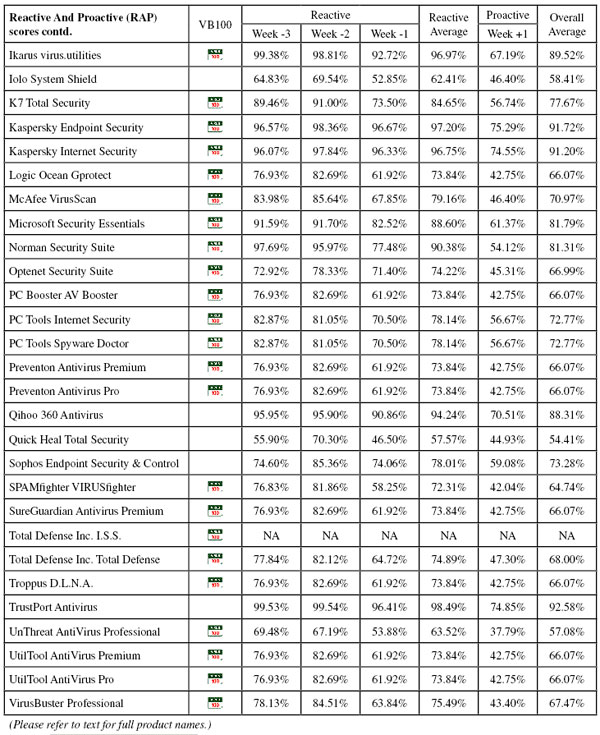

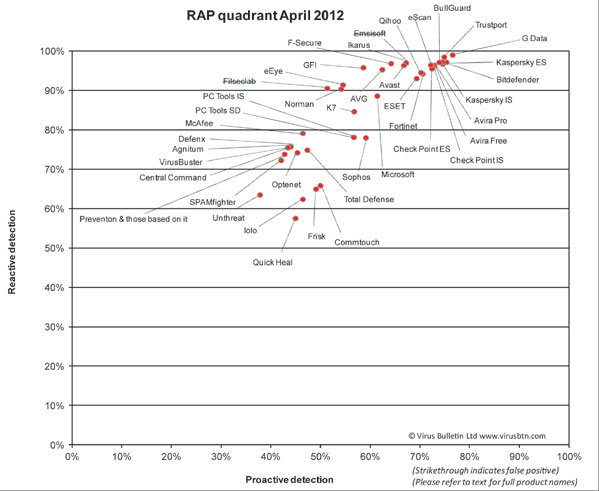

The RAP sets were built, as usual, in the weeks around the deadline, and the Response sets were compiled on a daily basis from the latest available samples. Some additional work has gone into honing the content of the Response sets. We have improved automation and fine-tuned the measures we take to ensure that no single provider swamps a set with unexpectedly large contributions, while at the same time aligning the contents more closely with prevalence information. This has had a knock-on effect for the RAP sets, which are put together using the same basic set-up – we hoped to see the impact of this in the form of a more rigorous exercise of the products’ detection capabilities, especially in the proactive set. In the end, the RAP sets averaged around 30,000 samples, with the daily sets used for the Response tests ranging from 2,000 to 6,000 samples, averaging around 4,000.

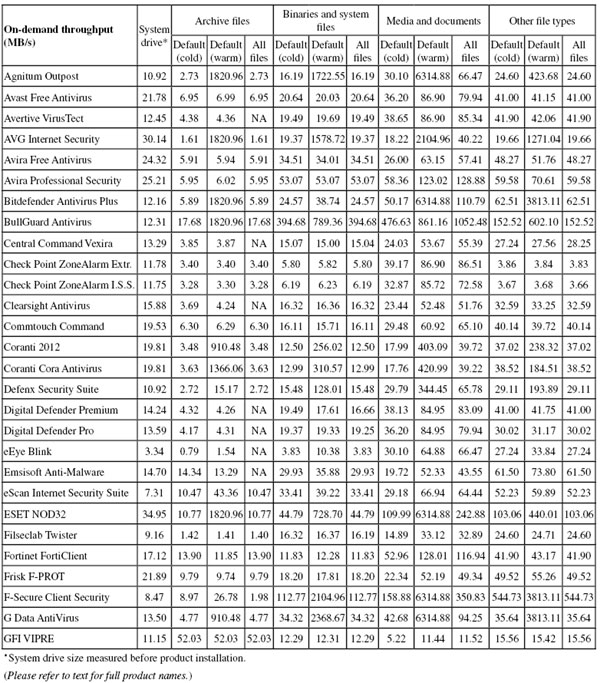

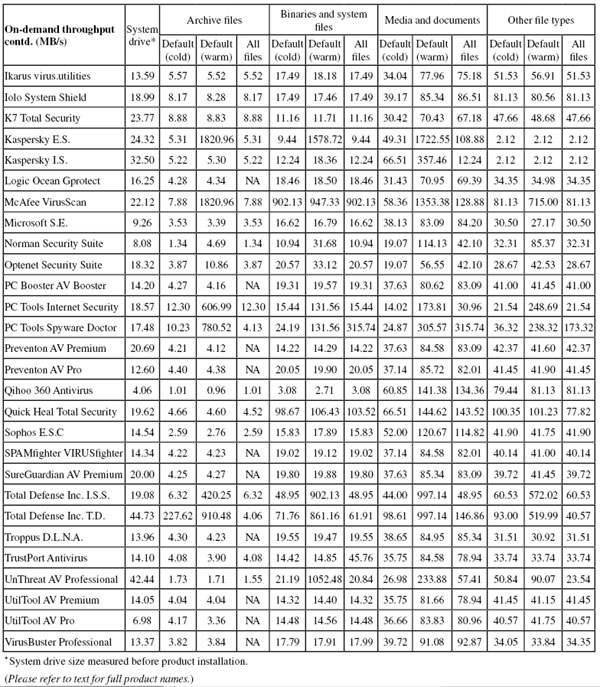

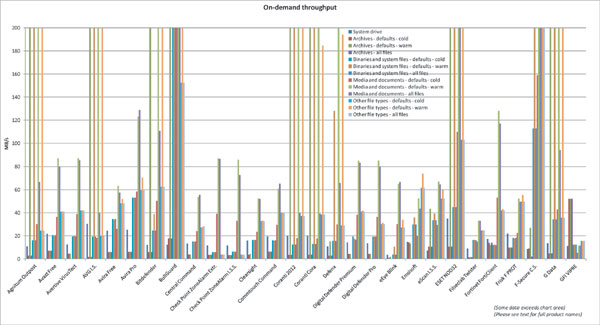

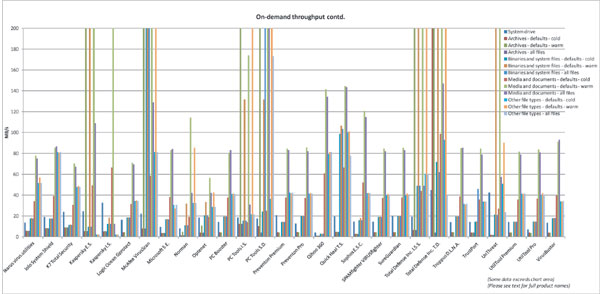

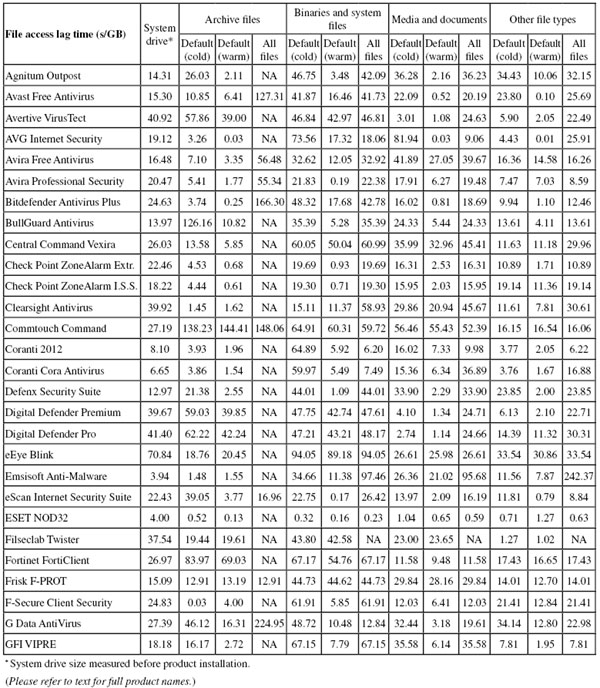

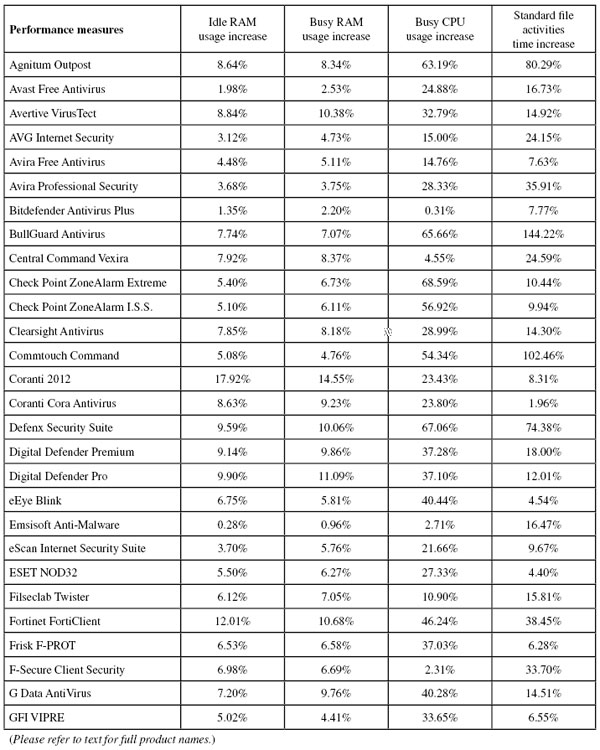

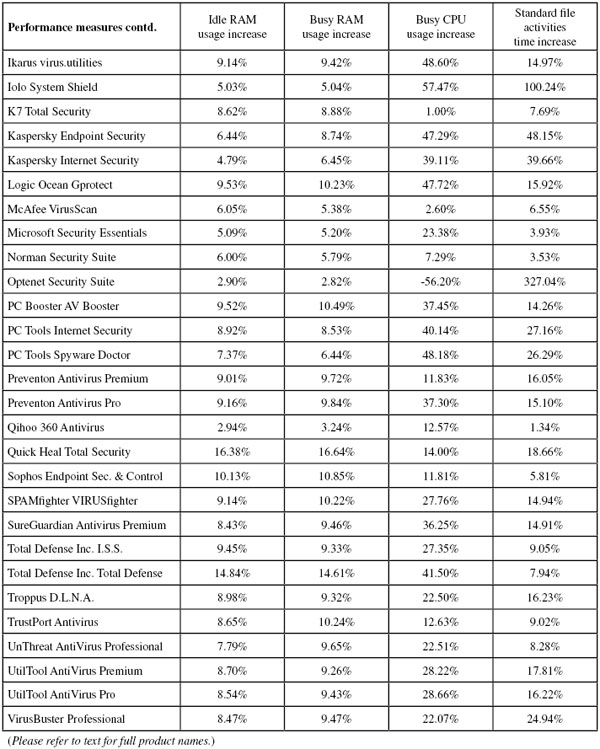

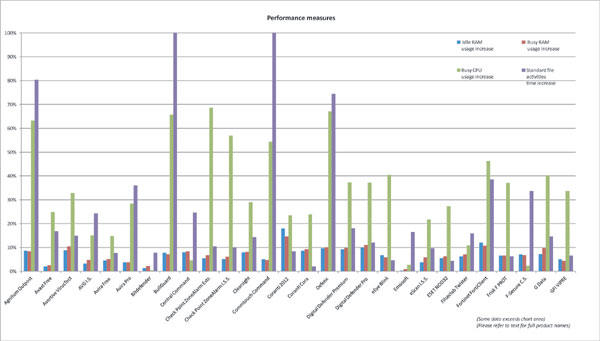

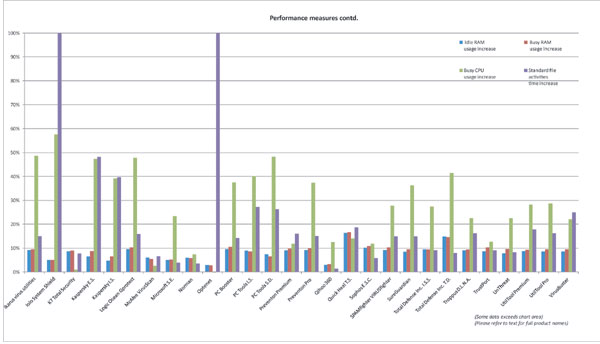

Speed and performance sets remained essentially unchanged, but we hope to find time to expand our selection of samples used in the speed measures, and to soup up our set of performance measures a little, in time for the next test.

With everything in place, we were ready to begin our epic trawl through the seemingly endless list of products.

Product version: 7.5.1 (3791.596.1681)

Update versions: 15/02/2012, 14/03/2012, 21/03/2012, 27/03/2012

First up this month is Agnitum’s Outpost suite, a very regular participant in our tests, and one which rarely gives us much trouble. The package provided measured 112MB, including an initial set of updates. The set-up process is fairly lengthy, much of the time being devoted to initiation of the firewall components. As in recent tests, we observed that the option to join a feedback scheme is rather sneakily hidden on the EULA acceptance page of the install process. Updates for the main part of the test – which were run between two and five weeks after the initial installer was sent to us – averaged around 14 minutes.

The interface is clear and sensible, providing a fairly decent set of controls for the anti-malware component, although most space is devoted to the firewall. Operation was smooth and stable, with no problems observed throughout the tests, and all jobs (including high-stress scans of infected sample sets) completed in good time. Scanning speed measures showed some decent initial rates, speeding up massively in the warm runs, but on-access lag times seemed a little on the high side. Resource use was also fairly high, and our set of tasks took noticeably longer than the baseline measures.

Detection rates were reasonable, dropping off somewhat in the last few days of the Response sets, and falling rather sharply in the proactive week of the RAP sets too, as expected. There were no problems in the certification sets though, with flawless coverage of the WildList sets and no false alarms in the clean sets, and Agnitum starts this month’s test off with an easy pass. Testing took no more than the 48 hours allotted to each product, and with no bugs or problems encountered, the product was rated as ‘Solid’ for stability. In the vendor’s recent test history, we see ten passes from ten attempts in the last two years.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 6.0.1367

Update versions: 120215-1, 120315-0, 120312-0, 120327-0

Avast released its latest upgrade, version 7, shortly after the deadline for this month’s test, so we were stuck with version 6 – which was no great pain for us, given its solid performance to date. For a change, the install package came separately from the latest updates, making for a slightly larger download than usual: 62MB for the main installer and 51MB for the updates. The set-up process is simple with no big surprises, completing in under a minute, and in general updates ran quickly too. In one of the three runs there was a bit of a snag though, when updating seemed not to be working at all. After leaving it sitting at 0% for quite some time, we finally gave up, and from there two reboots were required to get things moving. Once it had got over this temporary glitch, things got back on track and testing continued; ignoring this period, updates averaged only a couple of minutes.

The interface is beautifully designed, combining eye-pleasing visuals with mind-pleasing clarity of layout and an extremely thorough set of controls made easily usable and unthreatening to the novice. Testing was generally fairly straightforward, although at one point when setting up the on-access tests the system became unresponsive and required another reboot. Having learned from past experiences, we whitelisted some of the test scripts and tools, and the behavioural monitoring was artially disabled to enable tests to proceed unimpeded.

From here on things moved along very nicely indeed, although speeds were a little slower than expected. On-access overheads were also a little high, but our set of tasks ran through in good time and resource usage was on the low side. Detection rates were excellent, with some superb scores in the Response sets and the reactive parts of the RAP sets; the proactive week did show quite a decline compared to recent tests, but it is likely that this is mainly due to adjustments in how the sets were developed, and we expected to see similar if not steeper drops in other products.

The core certification sets were handled impeccably, with no problems achieving the required standard for certification. After a small hiccup a few months ago, things seem to be back on track for Avast, with a single fail and 11 passes in the last two years. A few fairly minor bugs were noted during testing, for which reboots were required. These occurred outside the high-stress parts of the test, thus the product achieves only a ‘Fair’ rating for stability.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 1.1.81

Update versions: 14.1.218, 14.1.267, 14.1.278, 14.1.283

The first of a raft of products from the same root source, Avertive’s VirusTect is based on the VirusBuster engine via the Preventon SDK, and has become a fairly regular visitor to our test bench. The installer was provided with latest definitions included for the RAP tests, and weighed in at 85MB. The set-up process is smooth and simple with no surprises, and completes in good time. Updates were likewise simple, reliable and speedy, with total install time averaging under ten minutes.

The GUI sticks to the older format that is familiar from many previous tests, and proved simple to operate. Our only gripe was the dropping of old log data after 20MB or so, and the default setting being to log everything checked by the product, thus resulting in much pointless logging. A registry tweak changed the former, while an interface option was provided for the latter, and tests proceeded smoothly from there on. The only real bug observed when using the product was that the on-demand scanner ignored a setting to ‘detect only’ when fired off via the context menu – there was no such issue when starting scans from the interface itself, and this is the most minor of bugs.

Scanning speeds were not bad, and overheads just a little on the high side, while resource use and impact on our set of tasks were both fairly average. Detection rates were reasonable if not especially impressive, with a downward trend towards the more recent days of the Response test and a fairly steep drop into the proactive week of the RAP sets. The certification sets were handled well though, with thorough coverage of both WildList sets, only a single item ignored on-access thanks to being a self-extracting archive – as this was in the Extended list, it presented no obstacle to certification and a VB100 is duly awarded.

Avertive has only entered three tests in the last six, but has passed each time; the two-year view shows four passes and two fails from six attempts. With only a single, very minor issue observed, our bugginess rating is ‘Stable’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 99.93%

False positives: 0

Product version: 2012.0.1913

Update versions: 2112/4809, 2114/4872, 2114/4884, 2114/4897

as usual, AVG provided its premium business suite for testing, with the installer weighing in at a fairly hefty 142MB and the set-up process running through in a fair number of steps, including offers to install a security toolbar and change the default search provider. The whole process was fairly speedy though, completing in just a few minutes, and later online updates were fast too, averaging only three minutes.

The interface is simple and unthreatening – a little angular, and with a sombre colour scheme to keep business folk from getting overexcited. The layout is clear and usable and a very thorough level of options is provided in very accessible fashion. Tests ran smoothly, with no issues of any kind.

Scanning speeds started off pretty decent and increased to lightning speeds in the warm runs, while overheads were mostly pretty light – a little above average in some sets to start with, but benefiting hugely from some smart caching in the warm measures. RAM usage was low and CPU use below average too, with a notable but extreme effect on our suite of standard tasks.

Detection rates were splendid, with a very consistent showing through the Response sets and good scores in the RAP sets too – once again that rather sharper than usual drop-off into the proactive week reflected our adjustments to the compilation of the set. The WildList and clean sets were brushed aside without any problems, and a VB100 award is easily earned by AVG.

A momentary lapse just under a year ago means AVG now has five passes and a single fail in the last six tests; 11 passes from 12 attempts in the last two years. There were no stability issues, meaning that the product earns a ‘Solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 12.0.0.849

Update versions: 7.11.23.32, 7.11.25.110, 7.11.25.198, 7.11.26.28

As usual in our desktop tests, Avira submitted both its free version and its paid-for version, with the free one up first simply due to alphabetical ordering.

The product was provided with an offline update bundle, the main installer being 80MB and the updater a further 58MB. The install process was pretty simple, enlivened only by the offer to install the Ask toolbar (versions of which are flagged by many products as ‘potentially unwanted’) – an option which, pleasingly, is unchecked by default. The remainder of the process is speedy and unchallenging, taking no more than two minutes, and updates ran through in five minutes on average. T

he interface is much improved after a recent facelift, and is generally pretty usable and easy to navigate, with a pretty thorough set of controls available. Tests ran through without problems, with decent speeds and reasonable overheads, low resource use and a low impact on our set of tasks.

Detection rates were excellent, with reliably strong scores through the Response sets and a splendid start to the RAP sets, declining a little into the later weeks as expected. The certification sets presented no problems, and a VB100 award is well deserved. Our test history for this version shows three passes from three entries in the last six tests; five from five in the last two years. With no bugs or problems noted, a ‘Solid’ stability rating is earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 12.0.0.1209

Update versions: 7.11.23.32, 7.11.25.112, 7.11.25.198, 7.11.26.28

The paid-for version of Avira is similar in many ways to its free sibling, with the install package measuring 83MB and the same 58MB initial update bundle shared by the two. The set-up process ran along similar lines, although without the offer of a third-party toolbar, and completed in two minutes. Later updates were surprisingly slow, however – on most occasions claiming that a full hour would be needed, but actually taking between 20 and 30 minutes.

The interface closely resembles that of the free version but does provide a few little extras in terms of configuration, including a more granular scanning system in the interface (although in both cases most scan jobs were run using the context menu option). The layout is clear and usable.

Scanning speeds were noticeably faster here than in the free version, and overheads distinctly lighter – this is something we have observed before, and the developers regularly query it as they insist that the two products should be more or less identical. However, we observed the same differences over several repeat runs on different test systems. In the performance measures, while RAM use was low, rather more CPU cycles seemed to be used, and impact on our set of tasks was a little on the high side. Again, on observing the differences between the two products, the tests were re-run several times, but the same distinction between the two was observed.

Detection rates were identical to those of the free product in the RAP sets, thanks to that shared update, but in the Response tests the Pro version had a slight edge in most sets – perhaps thanks to luck in the timing of the tests, which were run on the same day but a few hours apart in most cases. There were no differences in the certification sets though, and another VB100 award is easily earned by Avira. Our test history shows a very solid 12 passes in the last two years. With no bugs observed, stability was also ranked ‘Solid’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 15.0.31.1283

Update versions: 7.41018/7750778, 7.41495/6996989, 7.41561/6956643, 7.41654/6984626

Bitdefender’s flagship range has had a rather drastic makeover of late, with the latest version of its Antivirus Plus 2012 product provided as a hefty 212MB package. The set-up is fast and simple, adorned with the company’s new ‘Dragon Wolf’ styling, and takes not much more than a couple of minutes to complete, even including an initial ‘quick scan’ (which is very quick indeed). Online updates took four to five minutes, although in one instance as soon as this initial update was complete and the interface showing, a second update was requested, which took about another five minutes.

The GUI is dark and a little forbidding, but attractive and simple to operate, with an impeccable range of options provided. Speeds were OK to start with, showing some somewhat irregular speed-ups in the warm runs. On-access lag times also started fairly well and improved notably on repeat attempts. Resource use was extremely low, almost imperceptible in terms of CPU use, and our set of tasks blasted through in splendid time.

Detection rates were similarly impressive, with the Response sets showing only the slightest downward trend into the more recent days, and some solid figures in the RAP sets too – even the proactive week was pretty well covered, given the toughness of the challenge this month. The certification sets presented no issues, and Bitdefender comfortably earns a VB100 award.

The last six tests show a perfect six passes, with the longer-term view showing only a brief lapse, with a single fail and 11 passes in the last two years. No stability problems were noted, earning Bitdefender a ‘Solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 12.0.213

Update versions: 12.0.215

BullGuard is based on the Bitdefender engine, but has its own distinct look and feel. The installer measured 148MB including updates, and the product installed with minimal fuss in a little over a minute. Updates were also speedy, averaging less than five minutes – although on one occasion a rather unassuming alert did suggest a reboot might be a good idea.

The interface is fairly minimalist and pared-down, with a warm orange bar along the top and a sparse, pale grey area below where simple messages and large buttons provide the bulk of the product’s feedback and control. Options are fairly basic in some areas, a little more complete in others, but usage is relatively simple and intuitive.

Scanning speeds were blisteringly fast, and overheads fairly light, except over the archive set. RAM use was around average, but CPU use was one of the highest observed this month and our set of tasks took much longer than necessary to complete – this could be related to some archive work in the activities set, with the sets of samples fetched and manipulated in zip format in several of the stages.

Detection rates closely mirrored those of Bitdefender, outstripping them slightly in the earlier days of the Response sets, but dropping slightly lower in the last few days. Once again, RAP scores were solid in the reactive weeks and still pretty impressive in the proactive week. With no issues in the certification sets, BullGuard also comfortably qualifies for VB100 certification this month.

The company’s history shows five passes from five attempts in the last six comparatives, only the annual Linux test having been skipped. Longer term entries are a little less regular, with seven passes from seven attempts in the last two years. With no stability problems, BullGuard earns a ‘Solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 7.3.33

Update versions: 5.4.1/14.1.219, 5.4.1/14.1.263, 5.4.1/14.1.270, 5.4.1/14.1.282

Another of the 17 products based on the VirusBuster engine in this month’s test, Vexira is the most similar to the mother ship, with only the colour scheme differentiating it from VirusBuster’s own offering. The 69MB installer ran through fairly well, although the process of applying a licence key was a little odd, with the focus jumping to the ‘next’ button each time a key was pressed, making it rather fiddly to enter the full key code. The set-up was supplemented by a 75MB update bundle, which was applied quickly and easily offline; later online updates were a little more troublesome, however.

As noted last time we looked at this product, several of the update methods, including a few spots in the main interface and one of the options in the system tray, seemed to have problems initiating updates properly. Messages would suggest the job had started, but no progress could be observed and even after leaving it alone for several hours no change in status was apparent. In the past, leaving it overnight seemed to get the job done, but this month time was too tight for us to leave it that long. Fortunately, we discovered that one of the update options – which opens what appears to be a dedicated updating GUI – did work properly, running through a number of steps (an option to progress from one to the next was available, but given the earlier problems we chose to ignore it). With the download time a little slow, the total process of installing and updating using this method took 25 minutes on average.

The interface has had a minor polish recently, but remains rather bland and wordy, with a decent degree of fine control available, but much of it presented in a fiddly, clunky fashion. Thanks to much practice with the product we were able to get things moving along quickly though, and in the speed measures we saw some fairly mediocre scan times and somewhat high overheads on access. Resource use was not excessive though, and our set of tasks took only slightly longer than average to complete. Detection rates were similarly mid-range – fairly steady through the Response sets and reasonable in the reactive parts of the RAP sets, dropping quite sharply into the proactive week. The WildList and clean sets did not turn up any surprises though, with good coverage throughout, and a VB100 award is duly earned.

Central Command had a spot of bad luck a few months ago, with a rare false positive blemishing an otherwise solid record – the vendor currently stands on five passes from six attempts in the last six tests; 11 passes and a single fail in the last two years. The issues observed with the update system were the only problems encountered, and Vexira is thus rated as ‘Fair’ for stability.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 10.1.079.000

Update versions: 8.1.8.79/1078935552, 8.1.8.79/1081090624, 8.1.8.79/1081435200, 8.1.8.79/1081774656

Check Point tends to take part in our tests only once or twice a year, but generally does well thanks to the underlying Kaspersky engine. This month the vendor submitted two products, which for the most part seemed fairly similar.

The installer for the ‘Extreme’ suite came as a slimline 5MB downloader tool, which fetched the noticeably larger 360MB main installer. A bundle of updates was provided for offline application for the RAP tests, which needed to be dropped into place in safe mode; later updates took around 15 minutes on average. The set-up process itself was fairly straightforward, taking only three to four minutes once the main installer was fetched (and taking an additional 25 minutes on the first install). The option of a browser toolbar was once again noted. The process did need a reboot to complete, and on at least one occasion a second reboot was required after running the online update.

The interface is a little short on the glitz and glamour one expects of end-user products these days, looking a little old-fashioned and clunky, and although a reasonable degree of fine-tuning was available, it was occasionally tricky to find and lacking in consistency across the product. During testing we noted a number of minor issues and irritations, although for the most part these only occurred under high stress. We observed the interface freezing several times after large scan jobs, and occasionally saw other error messages which resulted in the GUI restarting. Logging also proved somewhat unreliable, with a couple of jobs requiring a re-run after no log information could be found at the end of the scan.

Scanning speeds were rather slow, except over our set of media and document samples, but overheads were not too high. RAM use was reasonable, but CPU use was very high. Our set of activities showed a fairly low impact in terms of runtime, however.

Detection rates were excellent, with a dependably high rate throughout our Response sets and solid levels in the RAP sets too, not dropping off too sharply in the proactive week. The core sets were handled well, with only a few items in the clean sets labelled as being of a hacker-ish bent, and Check Point earns a VB100 award for its efforts. This is the vendor’s only entry in the last six tests, but the two-year view shows three passes from three attempts. With a few issues observed, mainly under high stress, stability was rated ‘Fair’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 10.1.079.000

Update versions: 8.1.8.79/1078935552, 8.1.8.79/1081097984, 8.1.8.79/1081424032

Similar in most respects to its ‘Extreme’ sibling, the ZoneAlarm suite held few surprises, with the small downloader fetching the full 343MB main package. The set-up process ran through the same set of steps, although at one point an error message appeared (complete with entirely unhelpful error code). Updates took around 15 minutes on average.

The interface is fairly angular and wordy, prone to occasional wobbliness, and again some high-stress work required multiple attempts after freezing up or failing to complete properly. Scanning speeds were also rather slow, and noticeably faster in the media and documents set. Overheads were fairly average, and low RAM use was countered by high consumption of CPU cycles, while our set of tasks completed in good time. Detection rates were very similar indeed, showing some splendid scores just about everywhere. This solidity of detection (if not of interface) extended to the core certification sets, with just a few warnings of possible hacker tools in the clean sets, and a VB100 award is duly earned.

With no history of entering multiple products, we can only share the single track previously reported – this one pass in the last six tests; three passes from three attempts in the last two years. Stability was hit by a few issues, the more significant ones at least only occurring under unusually high stress, but we would rate it as no more than ‘Fair’, verging on ‘Buggy’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 3.0.70

Update versions: 14.1.218, 14.1.267, 14.1.278, 14.1.283

Another of this month’s glut of Preventon/VirusBuster offerings, Clearsight has gone for a slightly newer iteration of the product with a number of additions. The submission only provided us a ‘Pro’ version however, which meant that many of the extras, such as behavioural monitoring and web filtering, were unavailable. There was little change in the set-up process, with the 89MB installer running through standard steps and completing in good time; updates averaged only six minutes or so.

Opening the interface showed us an initial bug, with the desktop icon not responding. Opening via the system tray did work though, and from then on the desktop icon seemed to come alive as well. On making the usual registry changes to fix the log capping, a reboot was attempted to apply the changes, but the only effect of clicking the restart button was to shut down the product interface. A second reboot attempt restarted the machine.

Speeds tests showed similar results to others in the range, with decent scanning speeds and reasonable overheads, average resource use and average impact on our set of tasks. Detection rates were a little harder to gather, with an initial run through the first part of the clean sets and the initial version of the Response sets apparently zipping through in record time. A closer look at the logs showed that a nasty file had tripped something up somewhere, and all subsequent files were flagged with an engine error. Checking the system, it appeared that the on-access protection was disabled, although the interface insisted it was operational. A similar issue has been noted with this product line in previous tests, where a file had apparently locked up the engine in such a way that it fails to ‘open’. A reboot was needed to fix things, and the response set was re-run from where it had fallen over.

Eventually, we could see detection rates just as expected – fairly reasonable in most areas, dropping off sharply into the proactive part of the RAP sets. The core sets were handled well though, and a VB100 award is earned. The product has notched up five passes from five entries in the last six tests; six passes and one fail in the last two years. This month’s performance rates as distinctly ‘Buggy’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 99.93%

False positives: 0

Product version: 5.1.15

Update versions: 5.3.9/201202151549, 5.3.9/201203191034, 5.3.9/201203221633, 5.3.9/201203261124

Commtouch has been having something of a rocky time in our comparatives of late, with bad luck following it from test to test – presumably its developers will be hoping for a change of fortune on this occasion. The current version came as a compact 13MB installer, with an offline update bundle of 28MB. The set-up process was very fast and simple, taking not much more than a minute with no need to reboot, and initial online updates averaged 20 minutes.

The product interface is fairly simplistic and minimal, with little opportunity for getting lost or making mistakes. Fine-tuning opportunities are rather limited, but the options that are provided are clear, sensible and responsive. In the past we’ve had some issues with exporting of the jumbo-sized logs we often generate, but these seem to have been fixed, and even under the heaviest of stress there was no sign of wobbliness.

Scanning speeds were not bad, but our on-access runs over the same sample sets took an enormous amount of time – in some cases more than double the baseline measures. The same effect showed in our set of standard tasks too, which took an age, with fairly high CPU use throughout, although RAM use was not excessive.

Detection rates were quite impressive in the Response sets, dropping off only slightly in the last day, but were fairly mediocre in the RAPs, tailing off steeply into Week +1 – implying that stronger detection is in place when cloud access is available. The fact that the RAP set scan took an epic 4,475 minutes (a little over three full days – some products completed it in under two hours this month) supports this theory, with the extra time put down to failed look-ups.

The core sets were handled well, with no signs of false alarms or misses in the WildList sets, and a VB100 award is well deserved. This hopefully marks the dawn of a new era for Commtouch, which now has two passes and three fails from five attempts in the last six tests; four passes and four fails in the last two years. Stability was rated ‘Solid’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 1.005.0006

Update versions: 21105, 21132, 21240

Once again, multi-engine behemoth Coranti submitted a brace of products, the first being the vendor’s mainstream offering straight from its Japanese HQ. Thanks to a mix-up at submission time, the lab was not informed that it would need to be installed and updated on the deadline day (indeed, the submission arrived too late to fit this work in anyway). After much head-scratching, we concluded that the smallish 53MB install package contained no detection data and would need to be excluded from the RAP tests. Attempts to install without online access led to some distinctly odd behaviour, including the product at one point insisting that the system clock was inaccurate and needed changing. The time was changed by a few hours, and initially we assumed this was to sync the systems with the company’s Japanese base – it was not until much later that we noticed the date had been set, quite bizarrely, to 1928.

Later installs ran through a fairly simple process, but once installed, online updates ran for some time, averaging close to two hours. This is doubtless thanks to the many engines contained within the product, each of which needs its own set of data, and perhaps in part due to the distance between our test lab and the company’s home market region.

The interface is wordy, but pleasantly laid out and simple to operate, with a good level of controls in most areas. It seemed to run solidly and reliably through the bulk of our testing, with some decent scanning speeds helped by smart caching in the warm runs. On-access and performance measures proved more difficult to gather, however, as the standard set of scripts we use (most of which are fairly rudimentary batch files) threw up clusters of errors and failed to produce data for chunks of the tests. No explanation could be found for this, as in most cases identical jobs which should have run multiple times were blocked on some occasions, but not on others. Re-running the tests on several fresh installs, on different hardware with fresh copies of both scripts and sample sets, produced similar, if not identical results. Thus, both on-access overheads and performance scores were estimated based on what data was available, and may be less accurate than we would like.

Detection tests (RAPs excluded) were much less fraught with difficulty though, and some splendid scores were seen in the Response sets, tipping only slightly downward in the later days. The WildList sets caused no problems and with no mistakes in the clean sets either, Coranti’s mainline product earns itself a VB100 award, having given us a few minor headaches.

The product’s history shows three passes and one fail from four entries in the last six tests; five passes and three fails in the last two years. Stability in the product itself seemed good, but the issues with our testing scripts – which were clearly caused by something the product was doing, with no information given as to why the system was not working as expected – must be counted as a bug, giving it only a ‘Fair’ rating for stability.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 2.003.00013

Update versions: 21105, 21132, 21240

Coranti’s second offering, Cora, comes from its Ukrainian office, and some of the splash screens and other displays contain Cyrillic characters alongside Roman ones. Other than that, it seemed pretty similar in most regards. The installer was even smaller this time, measuring just 26MB, and the set-up process was once again unable to operate properly without running an online update (again we noted an issue with resetting the date, this time picking the year 2057). The update took an age to complete, downloading around 250MB of data, but taking an average of over two hours to do so.

The interface is again wordy but usable, scanning speeds decent, becoming super-fast. A difference between the sibling products emerged in the on-access and performance tests though – on this occasion they all ran through unimpeded, producing a full set of results without complaint. Some pretty light overheads were seen, and reasonable resource consumption, with a good rate getting through our set of tasks. In fact, results fairly closely mirrored the figures we had pulled together for the 2012 product, so perhaps filtering out the chunks of failed results did not cause too much inaccuracy for the 2012 version after all.

Detection rates were pretty similar: very solid throughout the Response sets with only a slight downturn in the later days, and the core sets were dealt with well, earning Cora another VB100 award. That makes it three passes from three attempts in the last six tests, the product’s first appearance coming last autumn. With no issues to report other than the extreme download time and the oddities when installing offline, the product is rated ‘Stable’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 2012 (3734.575.1669)

Update versions: 15/02/2012, 18/03/2012, 21/03/2012, 27/03/2012

Number five of 17, Defenx is an evolution of Agnitum’s Outpost suite, providing Agnitum’s firewall alongside VirusBuster’s malware engine, with a few additions of its own. The user experience closely mirrors that of Outpost, with the set-up process from the 114MB installer (provided pre-updated) running through a fair number of stages and taking quite some time. Updates ran smoothly and reliably, but again were fairly slow, averaging 20 minutes for the initial runs.

The interface is clear and welcoming without being overly fluffy, and is clearly laid out with a good basic set of controls that are easy to find and adjust. Operation was stable and reliable, with no noticeable problems, and tests proceeded nicely. Scanning speeds were reasonable, aided by speed-ups in the warm runs, and on-access overheads likewise sped up nicely from a decent starting point. RAM use was a little high, and as with Agnitum’s product, CPU use and impact on our set of tasks were both very high.

Detection rates were no more than reasonable in the Response sets, and tailed off quite steeply in the RAPs, but the certification sets were properly dealt with and Defenx earns a VB100 award. The product’s history is solid, with five passes from five entries in the last six tests; ten from ten in the last two years. In terms of stability, the product is rated ‘Solid’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 3.0.70

Update versions: 14.1.218, 14.1.267, 14.1.278, 14.1.283

Another from the Preventon/VirusBuster stable, Digital Defender is one of the older names on the list, and in this test it appears in both Premium and Pro versions. Differences between the two are minimal though: they use the same installer, and the additional components provided in the Premium edition are activated by the application of a licence key. The set-up, from the 89MB install package, ran through quickly and smoothly, and updates seemed reliable, taking around seven minutes on average.

As with previous products using the newer variant of Preventon’s GUI, some oddities were noticed when opening the interface for the first time, and also when trying to reboot the system. In this case, with several extra defensive layers enabled, we noticed some further issues, with several initial scan attempts freezing up completely. Carefully picking through the changes revealed that the ‘Safety Guard’ component was at fault – its main purpose seems to be to check detections in the cloud to minimize false positives, but something was clearly not right with it. Once this component was disabled, things seemed to run fine – apart from the scan engine being snarled up nastily by a single file in the first round of Response sets, leaving it incapable of scanning any further files until the system was rebooted.

We also noted, when running on-demand speed tests, that scans of the C: partition frequently froze up even without the ‘Safety Guard’ enabled. Attempts to scan other areas occasionally resulted in the dead C: scan reviving, snagging up as before in the same spot. To complicate matters further, the disabled component also frequently reactivated after reboot.

We eventually managed to complete all our tests, the results showing scanning speeds around average and overheads perhaps a little on the high side. Resource use and impact on our set of tasks were similarly standard. Detection rates were mediocre in the RAP sets, with a sharp decline in the proactive week. They were a little better in the Response sets, but again declined slightly towards the more recent sets. The certification sets were handled properly though, and a VB100 award is granted.

Digital Defender’s recent test history is decent, with five passes from five entries in the last six tests (previous ones all being for the Pro product only). Longer term, things are less impressive, with six passes and four fails in the last two years. This month’s performance showed a number of stability issues, some of them fairly worrying, putting the product on the outer edge of ‘Buggy’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 99.93%

False positives: 0

Product version: 3.0.70 Update versions: 14.1.218, 14.1.267, 14.1.278, 14.1.283

The same product with a different licence key and some features disabled, the set-up process for this one was unsurprisingly much the same – updates again taking seven minutes or so on average. Given that the main feature expected to make a difference between the two in static detection tests was disabled in the Premium version for practical purposes, and in this version as it falls outside the Pro protection level, everything else was as similar as might be expected, with slightly fewer bugs thanks to the problem feature being switched off from the start.

The product showed mid-range speeds, overheads and resource drains, and mid-range detection rates. The VB100 requirements were met once more, but like its sibling, the product earned a stability rating in the ‘Buggy’ range.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 99.93%

False positives: 0

Product version: 5.0.1, Rule version 1630

Update versions: 1.1.2000, 1.1.2043, 1.1.2054, 1.1.2058

Blink is a bit out of the ordinary for our tests, with its creator, eEye, specializing in vulnerability monitoring and management. Indeed, the product is a little non-standard for the vendor too, and is listed on the company’s website under ‘Additional products’. It has become fairly familiar to us over several years of testing, though. The latest version came as a 220MB install package with updates included. The set-up process was reasonably simple, requiring online access to check licensing details – for which a lot of personal information is requested, including a postal address. As we have noted before with this product, updates were rather lengthy – routinely taking more than two hours, and fetching close to 200MB of data on each install.

The interface is clean and follows a fairly standard template, but is occasionally a little confusing to navigate, providing a limited set of controls. It seemed generally fairly stable and responsive though. Scanning speeds were slow in some areas, but reasonable in others, while overheads proved pretty heavy across the board. Our performance measures showed some good results though, with memory use below average, CPU use just a little higher, and our set of tasks running through in good time.

Detection was pretty good too, with decent scores in the Response sets – a little lower in the second half than the first – and a very impressive starting week in the RAP sets, curving fairly sharply downwards in the latter two weeks. The WildList and clean sets presented no difficulties, and a VB100 award is earned by eEye. The vendor has been doing fairly well of late, with four passes and a single fail in the last six tests; six passes and two fails in the last two years. Stability was good throughout, earning the product a ‘Solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 6.0.0.57

Update versions: 5,288,487; 5,506,826; 5,565,809; 5,561,228

The product formerly known as ‘A-Squared’ returns once more, hoping to end its run of bad luck in our tests. The install package came fully updated, at 115MB, and set-up was very fast, completing in under a minute. Later online updates took an average of 14 minutes.

The interface is a little quirky, leaving one searching for ‘back’ or ‘home’ buttons quite regularly, and some of the language is a little unusual too, but in general it makes a reasonable degree of sense, providing a limited set of controls. There were a few issues with larger scans once again, with jobs freezing near the end and on one occasion simply disappearing without trace, but these only occurred under heavy stress.

Scanning speeds were not bad, and overheads a little heavy in some runs, but better in others. RAM use barely registered, with CPU use also fairly low, but our set of tasks did take a little while longer than usual to complete. Detection scores were excellent as usual, with good scores throughout the Response sets and RAP scores which started very strongly and didn’t fade away too sharply. However, a handful of items in the Extended WildList set were not picked up, and in the clean set a single item – a component of some reporting software from Microsoft – was labelled as a Lolbot trojan. As a result, Emsisoft once again misses out on certification by a whisker.

Recent tests haven’t been kind to Emsisoft, with five fails from five attempts in the last six tests; in the last two years, the vendor has managed two passes, alongside seven fails. Stability seemed reasonable, and fine for use under everyday circumstances, earning the product a ‘Stable’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 99.67%

ItW Extd (o/a): 99.67%

False positives: 1

Product version: 11.0.1139.1146

Update versions: NA

India’s eScan is one of our most regular participants, rarely missing a test, and it appears here once again with its latest suite version. The install package was a large 186MB, with the installation process running along standard lines – although it did point out, after running an initial ‘quick scan’, that some erroneous registry entries had been spotted and fixed. The whole process took around three minutes, with initial updates taking an extra 15 minutes on average.

No problems were spotted during testing, and speed measures were reasonable, speeding up a little in the warm runs. Overheads were pretty light, with resource use on the lighter side of average, and there was a fairly low impact on our set of tasks. Detection rates, aided by the Bitdefender engine included in the product, were excellent, showing only the slightest downward trend in the Response sets and not too steep a decline in the RAPs. With the core sets also dealt with easily, eScan earns another VB100 award.

The company has a strong record, with a perfect six in the last six tests; ten passes and two fails in the last two years. Stability this month was sound, earning the product a comfortable ‘Solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 5.0.95.5

Update versions: 6886, 6886, 6986, 6886

Another product almost guaranteed to appear in every comparative, and equally likely to perform well, ESET’s NOD32 was provided this month as a slimline 55MB installer, including updates. It set up in little over a minute, the process enlivened as always by the company’s unusual approach to the thorny ‘Potentially Unwanted’ issue – forcing users to make a clear decision as to whether or not to point out such items. Online updates were impressively speedy, taking less than five minutes, but activation proved a little fiddly on occasion, with one attempt to start a trial simply disappearing part-way through, without an error message or any other explanation.

The interface is attractive and stylish, providing a broad range of controls in a generally usable style, although in places it does seem a little repetitive. As usual, we were unable to fathom the settings to open archives on access, and despite our best efforts they went unexamined.

During testing, we also observed a brace of blue-screens – the only ones observed during the whole testing period. These both occurred at seemingly innocuous moments: the first when attempting to paste a screenshot of the product interface into Paint for our records. The second, on a different install, happened after running the initial on-access check of our archive set – 100 or so archives containing the EICAR test file – with only the unarchived samples detected. Having run the test, we tried to open the opener tool’s csv log file in Notepad, and there we were again, rebooting unexpectedly.

Scanning speeds were very impressive though – almost getting back to their old form of five years ago – and overheads were featherlight too, barely registering in some areas. RAM and CPU use were fairly average, but our set of tasks blasted through, taking not much longer than our baseline measures.

Detection rates were oddly disappointing in the Response sets, but pretty decent in the RAPs, tailing off not too steeply. The core sets were handled splendidly, with a cluster of adware and toolbar warnings in the clean sets, all of which appeared to be justified. ESET thus earns a VB100 award to add to its ongoing epic record: 12 out of 12 in the last two years and stretching back way further than that.

On putting this report together and noting down the version information for each run from screenshots taken at the time, it became clear that some of the updates had not completed properly – although the interface reported a successful update (even recording the time of the last update run as just moments earlier), the actual data in use remained old. This happened on two of the three main test runs, and explains the unexpectedly low scores in the Response sets. On top of the two blue screens, this tips the product’s stability rating into ‘Buggy’ territory.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: V7 r3

Update versions: 14.251.43669, 15.38.49880, 15.44.15917, 15.50.54570

Filseclab is the perennial battler, bravely entering our tests month after month with little reward, but edging ever closer to certification standard. The current version came as a 130MB main installer with 112MB of updates, in an executable bundle openly available on the company’s website. Main set-up stages seemed minimal and ran smoothly, but later update attempts were less reliable, with several attempts failing and needing to be re-run. The actual download time for successful updates was only five to six minutes, but some extra must be added to account for only one in three attempts actually succeeding.

The interface is little changed since we first saw it – slightly out of the ordinary, but fairly simple to figure out and navigate, with a decent if not very thorough set of controls. These were not necessarily reliable: options were provided to extend the depth of archive scanning on access, but they appeared to have no effect. We were also unable to make the product scan non-standard file extensions on access, thus limiting our set of speed measures in this mode.

Scanning speeds were a little slow on demand, and fairly high on access, in the areas in which they could be properly measured. Performance measures showed nothing too extraordinary though, with resource use unexceptional, and impact on our set of activities fairly reasonable. Detection rates were pretty decent, with good levels in the Response sets and a good start to the RAPs too, tailing off fairly sharply in the latter weeks. Results in the certification sets proved disappointing once again however, with a number of misses in the WildList sets and quite a few false alarms in the clean sets, including items from major players such IBM (one of whose packages apparently contained the EICAR test file), SAP and Sun, alongside popular tools such as WinZip and VLC.

No VB100 award can thus be granted to Filseclab, which now has two fails in the last six tests; five fails in the last two years. Stability was generally good though, with issues in the updating process the only ones noted, thus earning the product a ‘Stable’ rating.

ItW Std: 97.48%

ItW Std (o/a): 97.48%

ItW Extd: 95.17%

ItW Extd (o/a): 93.32%

False positives: 37

Product version: 4.1.3.145

Update versions: 4.3.392/15.215, 4.3.392/15.320, 4.3.392/15.344, 4.3.392/15.357

Fortinet routinely competes for the title of smallest main install package, and must be well up there this month with a tiny 10MB offering. Offline updates were fairly hefty though, at 135MB, and after a very rapid, simple install (which took less than a minute with no need to reboot), online updates were fairly lengthy. In most cases the initial download apparently took only seven or eight minutes, but after this, an additional period of up to half an hour was needed to ‘process’ the update.

The product interface is efficient and businesslike, providing an excellent set of controls in a lucid and logical manner, and testing was a pleasant process, free from shocks or surprises. Scanning speeds were decent, pretty good over some types of files, while overheads were a little high. Resource use was on the high side – particularly CPU consumption – and our set of activities was impacted fairly heavily. Detection rates were impressive though, with some good scores in the Response sets and a good showing in the RAP sets too, dropping away only a little into the latter weeks.

The core test sets presented no problems, and VB100 certification is comfortably earned, leaving Fortinet with five passes in the last six tests (the annual Linux test being skipped); nine passes and a single fail in the last two years. Stability was excellent, comfortably earning a ‘Solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 6.0.9.6

Update versions: 4.6.5

Frisk’s F-PROT seems not to have changed at all in many years, and the current build promised few surprises. The 36MB installer ran through very speedily, and the 29MB update bundle was dropped manually into place. A reboot is demanded to complete the set-up process. Online updates seemed reliable and simple, averaging just under six minutes, and the interface is basic and minimalist, with only a handful of options and nothing to confuse even the most inexpert user.

Most tests ran without issues, showing some reasonable scanning speeds but fairly hefty on-access lag times, with RAM use on the low side, CPU use around average, and our set of tasks running through surprisingly quickly. Detection rates were pretty mediocre, but respectable in most areas.

Running large scans was, as ever, fraught with minor issues, with most jobs crashing out at least once, but restarting from where things had left off was simple enough and tests did not take too long to get finished. The core certification sets were handled well, and with no false alarms or important misses F-PROT earns a VB100 award. It now has five passes and one fail in the last six tests; nine passes and three fails in the last two years. The only problems noted were large scans of infected sets crashing out, with no issues in more usual ‘everyday’ use, thus a ‘Stable’ rating seems appropriate.

ItW Std 100.00% ItW Std (o/a) 100.00% ItW Extd 99.74% ItW Extd (o/a) 98.54% False positives 0

Product version: 9.20 build 274

Update versions: NA

F-Secure often submits two fairly similar products for our tests, resulting in a rather complex history on our website, but this month only one was entered. It came as a 64MB installer with a 145MB updater executable. The set-up was fairly fast and simple, needing a reboot to complete. Online updates were mostly reliable, but took up to 20 minutes for initial runs to complete. On occasion it seemed to be stuck in a loop, with progress bars hitting 100% multiple times and the process apparently restarting immediately.

Most tests ran smoothly, with speeds impressive to start with and powering through in the warm runs, while lag times were mostly decent, resource use low and some impact noted on our set of tasks. The first two parts of the test programme ran smoothly, although one large scan did impose a heavy weight on the system, using up 555MB of RAM and 86% of CPU time, but it completed without issues and the machine remained reasonably responsive throughout.

On the third run, however, everything went completely haywire. When running through the WildList sets on access, protection seemed to switch on and off at random, and even with several runs, no consensus could be reached on which files should be blocked and which ignored. On-demand work was similarly troublesome, with scans freezing, vanishing without trace, or claiming completion but producing no log data and reporting far fewer items scanned than were actually present. Multiple reinstalls, on almost all of our tests systems over a period of more than two weeks, repeatedly brought similar experiences, and eventually we had no choice but to abandon the entire job.

All detection results reported thus only cover the first two of the usual three runs; there at least we did see some solid work, with high rates in the Response sets declining very gently through the days and the RAP sets looking excellent, although dropping off considerably in the proactive week. In the WildList sets, even ignoring the disastrous final run, a few items appeared to be missed in both sets, and while no false alarms were noted in the first two-thirds of the clean set, no results could be obtained for the final portion which contained most of the most recent additions. No VB100 award can be granted this month, but we have been informed that F-Secure’s developers have investigated and fixed the issues we reported. Until this month, the product had managed to record a pass with one or other of its products in every test (except those on Linux platforms) over the last two years. Although most problems occurred in high-stress work involving multiple malware samples, this month’s showing could only be rated as ‘Flaky’.

ItW Std: 100.00%

ItW Std (o/a): 98.95%

ItW Extd: 100.00%

ItW Extd (o/a): 97.25%

False positives: ??

Product version: 23.0.0.19

Update versions: NA

G Data’s product routinely vies for the title of biggest installer, and things looked promising this month with a jumbo 353MB install package submitted. Set-up is uncomplicated though, taking little more than a minute to run through the standard steps, requesting a reboot at the end. Online updates averaged 20 minutes.

Scanning speeds started off fairly decent, and most jobs sped up to under a second in the warm runs, while lag times on access were a little on the high side but showed some signs of improvement in warm measures too. Resource use was a little above average but far from excessive, and our set of tasks completed in good time. Detection rates, as ever, were remarkable, with very little missed in the Response sets or the reactive weeks of the RAP sets – even the proactive week handled pretty impressively. The core sets were dealt with admirably too, and a VB100 award is easily earned by G Data, whose history shows a stable pattern of four passes and one fail in the last six tests; eight passes and two fails in the last two years, with only the Linux tests not entered. No problems were noted during testing, and a ‘Solid’ rating is duly earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 5.0.5134

Update versions: 11549, 11671, 11693, 11717

GFI’s VIPRE has a history of annoying the lab team with odd quirks and stability problems, but has been making some great strides of late, just in time to be rated under our new system. The current version has a tiny 11MB installer, and we also fetched 80MB of updates for use offline in the RAP tests. Set-up was very quick and easy, accompanied by an information slide show, and updates seemed effective too, initial runs averaging around eight minutes.

The interface is a little different from most, for some reason ignoring standard approaches and going its own way. For the most part only minimal configuration is possible, and some of it is less than clear at first glance. Operation seemed fairly stable though, with none of the issues under high stress noted in past comparatives, although our cautious approach – instinctively running each job in small chunks rather than single large runs – may have helped with this.

Scanning speeds were a little slow in most areas, but overheads were fairly acceptable, and RAm use was low. CPU use was around average, and impact on our set of tasks was pretty low. Detection rates proved excellent, rivalling the very best in the bulk of the Response sets and only dropping off a little in the most recent day, with a similar pattern in the RAP sets: excellent in the older weeks and still decent in the latter ones. The core sets presented no issues, and a VB100 award is comfortably earned.

GFI’s history shows four passes and one fail in the last six tests; seven passes and one fail in the last two years. With no stability issues to report (somewhat to our surprise), a ‘Solid’ stability rating is merited.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 2.0.125

Update versions: 2.0.127

Ikarus is another victim of a recent run of bad luck. Its current product was, as usual, provided as an ISO image of a full install CD, measuring 209MB but including much else beside the basic product installer (such as a redistributable version of Microsoft’s .NET framework which needed to be installed prior to the main product being set up). This was all done automatically, but added several minutes to the total runtime, which was around five minutes in the end. Updates were performed offline from a 72MB bundle for the RAP sets, and online for the other parts of the test, initial runs averaging 18 minutes.

The interface leans heavily on .NET, and is thus rather ugly and clunky, but these days at least it is generally responsive and usable. Options are pretty limited, but those that are provided seemed usable and reasonably easy to find.

Scanning speeds were a little slow, and on-access overheads very heavy indeed, with RAM use a little above average and CPU use pretty high; our set of tasks didn’t take too long to complete though.

Detection rates were pretty good – very high indeed in the response sets and similarly stellar in the first half of the RAP sets, dropping a little into the proactive week, as we would expect. The WildList sets were well covered, and in the clean sets for once no false alarms emerged, earning Ikarus a VB100 award after a lengthy spell of failures. The vendor now stands on one pass and three fails in the last six tests; three passes and four fails in the last two years. We noticed a little wobbliness in the interface when running large scans, but no issues in everyday use, thus rating it ‘Stable’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 4.2.4

Update versions: NA

Iolo continues to submit to our tests, but despite over a year of pleading, its developers have so far been unable to provide us with any details on how to decrypt its awkward log format – which can be displayed well in the product interface, but is all but impossible to handle offline. The product itself came as a 450KB download tool, which as usual was set up and updated online on the deadline day, no facility being in place for offline updating. Fetching the initial installer took around six minutes, and from there on standard steps were run through fairly speedily, taking another two minutes or so before requesting a reboot to complete. Online updates were then needed, taking six minutes on average.

The product interface is slick and professional, and provides a reasonable level of controls in a fairly usable manner, although one common item – the option to run scans from the Explorer context menu – was notably absent. It seemed fairly stable, with no issues observed running through the tests. Scanning speeds were reasonable, overheads very heavy on access and despite low RAM use, CPU consumption was very high and there was a significant impact on our set of standard activities.

Detection rates were fairly mediocre across the board, as far as we could ascertain from the gnarly logs. On access, our own logging system recorded a 100% block rate through the WildList sets, and this was confirmed from our reading of log data, but either on-demand logging was less easily deciphered or there were a fair number of misses. Thus, despite no apparent false alarms in the clean sets, Iolo cannot be granted a VB100 award. For mostly the same reasons, the vendor’s test history now shows one pass and three fails in the last six tests; two passes and four fails in the last two years. Stability was ‘Solid’ though.

ItW Std: 99.37%

ItW Std (o/a): 100.00%

ItW Extd: 98.61%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 11.1.0072

Update versions: 9.130.6177, 9.134.6437, 9.135.6532

Enjoying something of a golden spell of late, despite rather sporadic entries, K7’s latest version was submitted as an 81MB installer with 85MB of updates. It zipped through the set-up process in very good time, the whole thing completing with just a couple of clicks in a little over 30 seconds, with no need for a reboot. Updates were similarly speedy, averaging only two minutes for the initial runs.

The interface is bright to the point of gaudiness, and a little on the wordy side, but it is reasonably easy to find one’s way around and does provide a thorough level of controls. It behaved well throughout testing, remaining responsive even under heavy pressure. Scanning speeds were not the fastest, but were decent nevertheless, and overheads were very light indeed, at least in the warm runs. RAM use was around average, but CPU use barely noticeable, and our set of tasks ran through in good time.

Detection results were decent – not challenging the leaders this month, but more than respectable, and the core certification sets were handled well, earning K7 another VB100 award. That puts the vendor on two passes from two attempts in the last six tests; five from five in the last two years. The product showed no stability issues, earning a ‘Solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 8.1.0.646

Update versions: NA

Kaspersky once again submitted both business and consumer products for testing, with the corporate version up first. It came as a fairly hefty 248MB installer, with offline updates fetched using a special tool which builds a local mirror of update server content. The set-up process ran through quite a few steps, including building a list of trusted applications on the local system, but took no more than two minutes. Initial online updates averaged around 12 minutes.

The interface is glossy and glitzy, with several unusual touches regarding how it operates, but with a little practice and exploration it soon becomes highly usable, and provides an impeccable range of fine-tuning options. It ran through the tests well, with only one issue noted: in the on-demand speed tests, most sets were dealt with fairly slowly at first, speeding up massively for the warm runs, but in the set of miscellaneous files something seemed to snag somewhere, and each attempt to run the job was aborted after the maximum permitted time of 30 minutes (most others this month took no more than two minutes to complete this job).

Other tests were problem-free though, and on-access lag times were low, with average RAM use, CPU use a little high and a fairly big impact on our set of activities. Detection rates were splendid, extremely thorough just about everywhere, with even the proactive week of the RAP sets showing a very respectable score. The WildList sets were dealt with flawlessly, and in the clean sets we only saw a few, entirely accurate alerts on potential hacker tools. A VB100 award is thus earned without trouble.

The test history for Kaspersky’s business line shows a rather rocky road of late, with three passes, two fails and a rather historic no-entry in the last six tests; eight passes and three fails in the last two years. The only issue was the freezing speed scan, and as this occurred over normal, clean files it is judged more significant than similar problems under high stress; still, a ‘Stable’ rating is granted.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 12.0.0.374

Update versions: NA

The second product from Kaspersky this month is pretty similar in most respects, using the same mirror of updates for the RAP tests, but the installer was noticeably smaller at just 78MB. After 30 seconds or so preparing to run, the actual process only required a couple of clicks (an option to display more advanced set-up controls was provided), and the whole thing took not much more than a minute, with no need to reboot. Updates took around 15 minutes on average for the initial run.

The interface looks much like the business product, with a little more colour. Once again, some of the buttons and controls are a little funky, giving us a few surprises and a little confusion at first, but after we’d settled in it all proved usable and fairly intuitive, with a splendid range of controls available.

Scanning speeds started fairly slow, with less sign of improvement in the warm runs, but the on-access lags, which were not too heavy from the off, did show considerable speed-ups. Resource use closely mirrored the business product, with low RAM use, fairly high CPU use and a fairly heavy impact on our set of tasks. Detection rates were again superb just about everywhere, with an excellent showing in the RAP sets. The core sets proved no problem, with just a few alerts on suspect items in the clean sets. A second VB100 award is thus earned by Kaspersky this month.

The consumer product line’s test history shows four passes and one fail from five entries in the last six tests; nine passes and two fails in the last two years. With one of the speed sets again causing repeated freezes during on-demand scans, the stability rating is no higher than ‘Stable’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Product version: 1.1.81

Update versions: 14.1.218, 14.1.267, 14.1.278, 14.1.283

Another from the Preventon/VirusBuster gang, Logic Ocean has a few entries under its belt already. The submission weighed in at just under 85MB – smaller than most of the others and hinting, encouragingly, that it was still using the older, simpler, and this month much more stable version of the interface. The smooth and speedy set-up was completed in good time, and updates took around nine minutes on average for the first runs. The GUI was indeed pleasingly familiar with no additional bells and whistles, providing a good basic set of controls in a clear and usable format, and it maintained reasonable stability throughout.

Scanning speeds were not bad, with slightly high overheads and fairly high use of resources and impact on our set of tasks. One oddity we noticed (on top of the usual disregard for action settings when running scans from the context menu) was a tendency to kick off unrequested scans of our set of archives (the first job done during our speed tests) each time a setting was adjusted.

Detection rates were rather dreary, as expected, but the core sets were handled well and a VB100 award is earned. Logic Ocean now has two passes from two entries in the last six tests; three from three entries in the last two years. With just a few minor bugs spotted this month, the product earns a ‘Stable’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 99.93%

False positives: 0

Product version: 5400.1158

Update versions: 6620.0000, 6649.0000, 6656.0000, 6662.0000

McAfee’s enterprise offering is one of a small band to have seen very few drastic changes in the last five years, and with a solid record of performance and reliability, it is always a welcome sight on the test bench. The installer is on the small side at 38MB, with 119MB of updates provided for offline use. The installation process is clear and straightforward, completing in little more than a minute. Updates ran smoothly, taking an average of eight minutes for the initial runs. The familiar interface is unflashy and plain, but provides a comprehensive set of controls which are simple to find and operate.