2012-08-01

Abstract

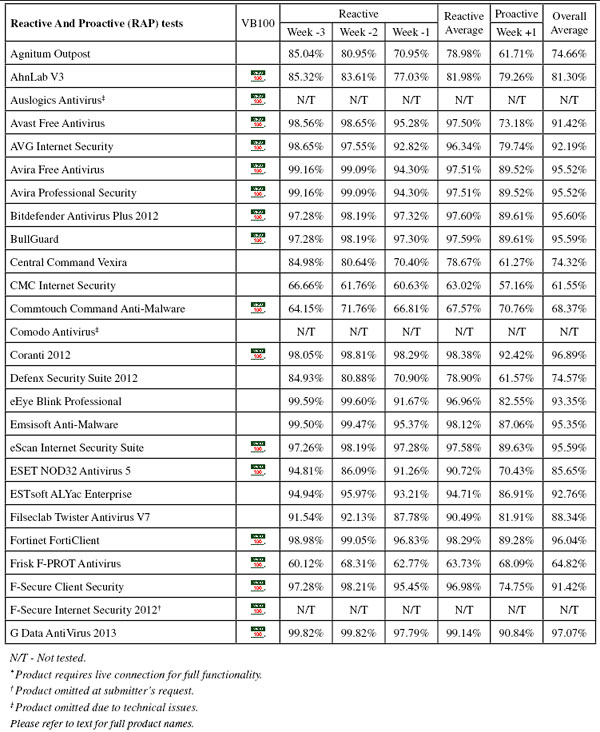

The latest VB100 test on Windows 7 generally saw better product stability than expected, although a lower pass rate than the last test - one third of products failing to achieve VB100 certification this time. John Hawes has all the details.

Copyright © 2012 Virus Bulletin

A glance through the list of entrants for this desktop test shows a fairly familiar line-up. However, between the commencement of the test and the completion of this report there have been a number of significant happenings in the industry, with companies being bought out and taken over, and products that have lengthy pedigrees ceasing to exist. Many people have long since predicted this sort of streamlining in the industry, but the general trend of late seems to have been towards diversification, with an ever wider range of products coming onto the market.

The resulting steady increase in the number of products participating in our comparatives continues to put a strain on our resources, and as of this test we have been forced to impose further limits on the number of products that can participate without charge. From now, each vendor will be entitled to one place in the test at no cost, and additional products will only be included for a fee (which will be waived for those companies that contribute to our costs through the VB100 logo licensing scheme).

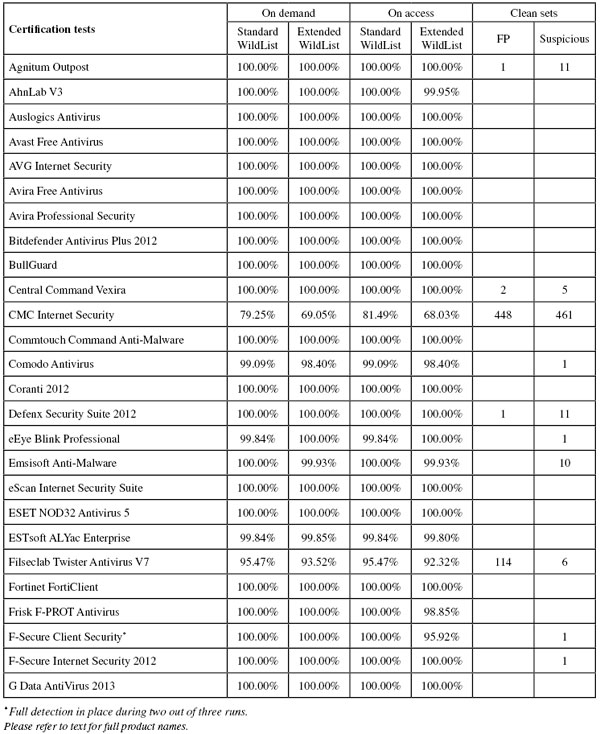

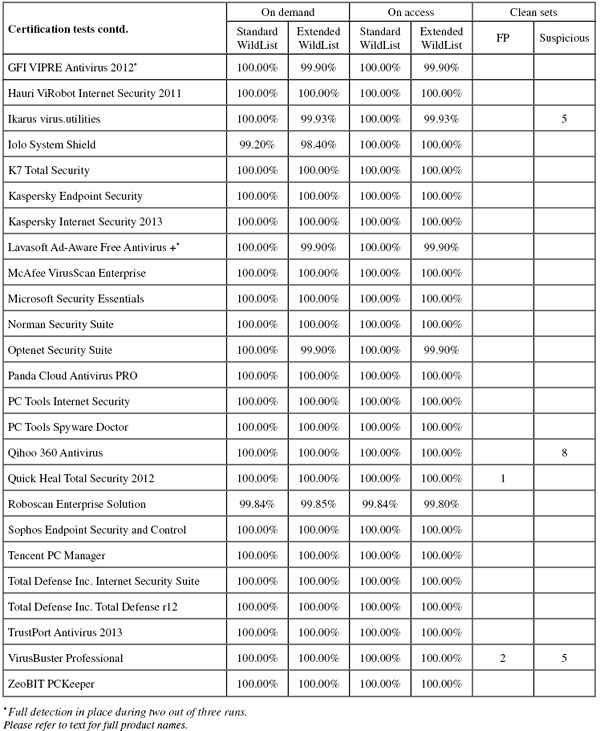

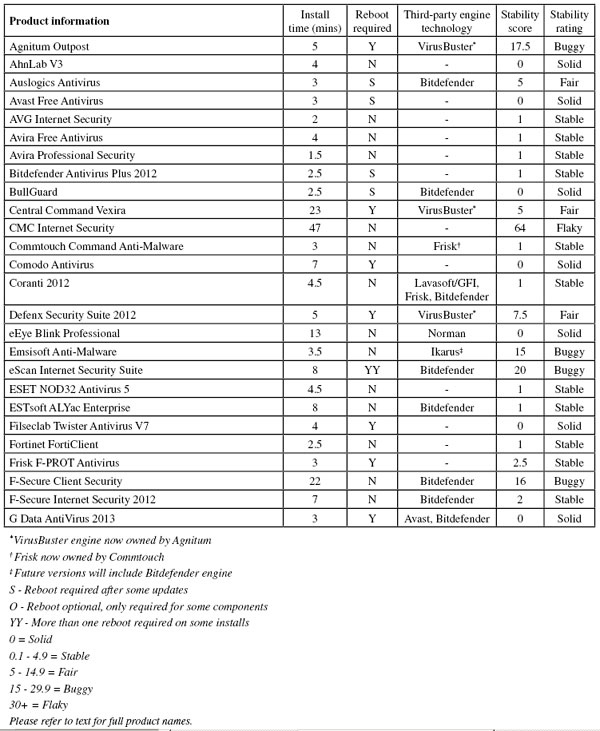

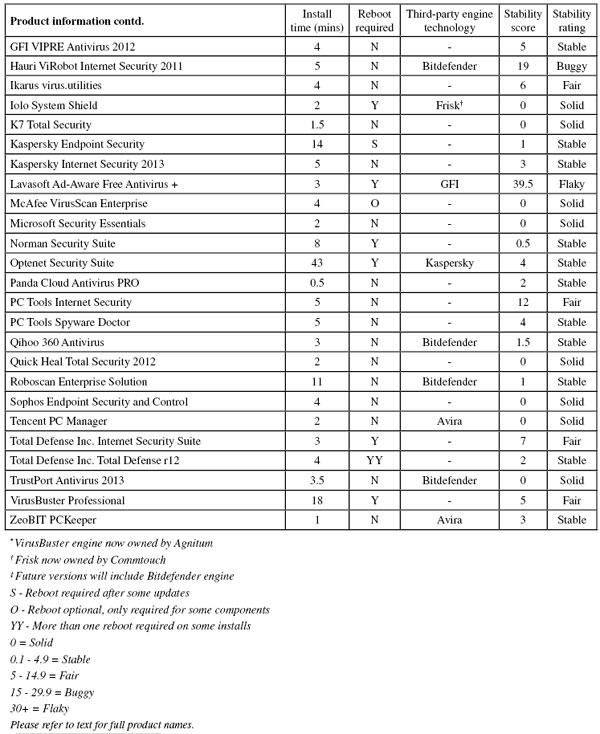

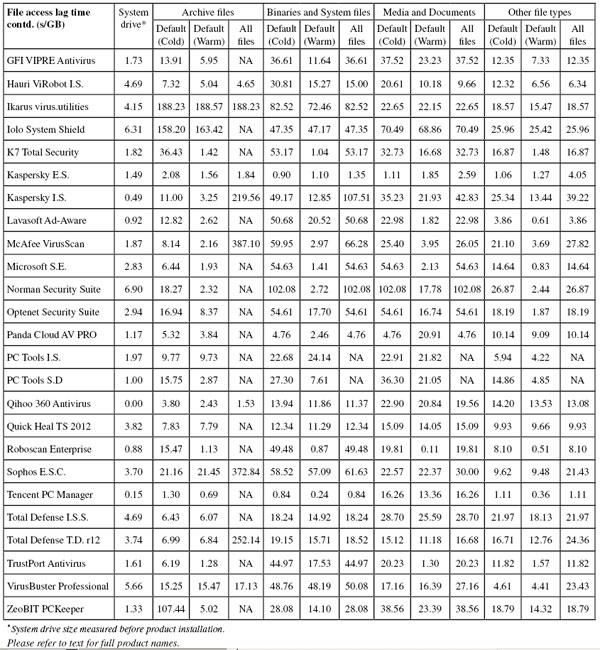

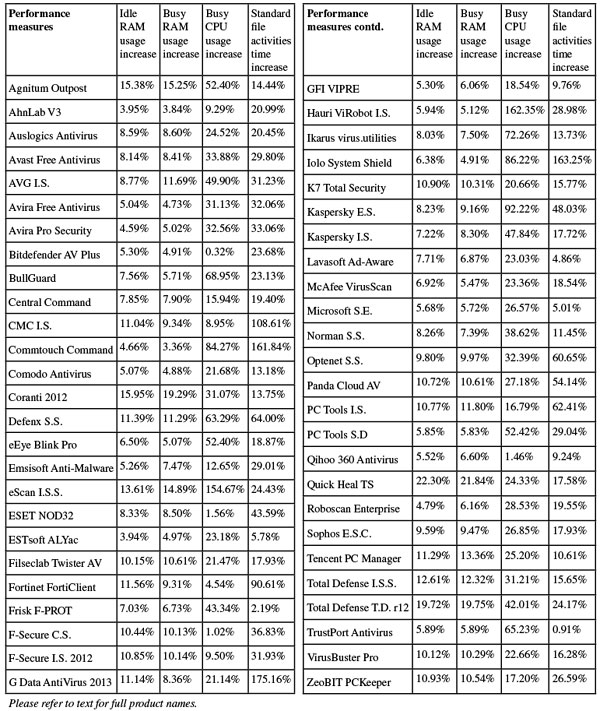

This report also sees the introduction of a formalized version of the stability measures which have been mentioned in recent tests. They are now covered in more detail in a new table – eventually we hope to include a basic level of reliability as part of the requirements for VB100 certification. The new table, with results of the stability scoring system, is supplemented by some additional details such as the time taken to install products, and whether the technology involved is ‘home-grown’ or licensed from a third party. We hope to expand further on this data in future tests; for full details, particularly of the stability rating system, please refer to the appendix to this article.

We return to Windows 7 for this test with the release of its intended successor, Windows 8, just around the corner. Replacing the rather poorly received Vista just three years ago, Windows 7 has done rather better and has just about managed to supplant Windows XP as the desktop of choice for most PC users. The platform has appeared in several tests already, and has generally generated very high levels of participation, although this month’s numbers were not quite as high as we initially anticipated. This was in large part due to the absence of a cluster of products based on a single implementation of one of the more popular engines, but once again we saw a wide range of OEM solutions. Continuing a trend observed last time, Bitdefender took the reins as the most widely deployed engine, featuring in at least 13 of the 50 or so products taking part.

Preparing the test systems proved fairly straightforward, with test images used in the last Windows 7 test unearthed and given a quick dusting down. The first and so far only service pack for Windows 7 was released over two years ago, but no further updates were added to systems, unless specifically requested by product installers.

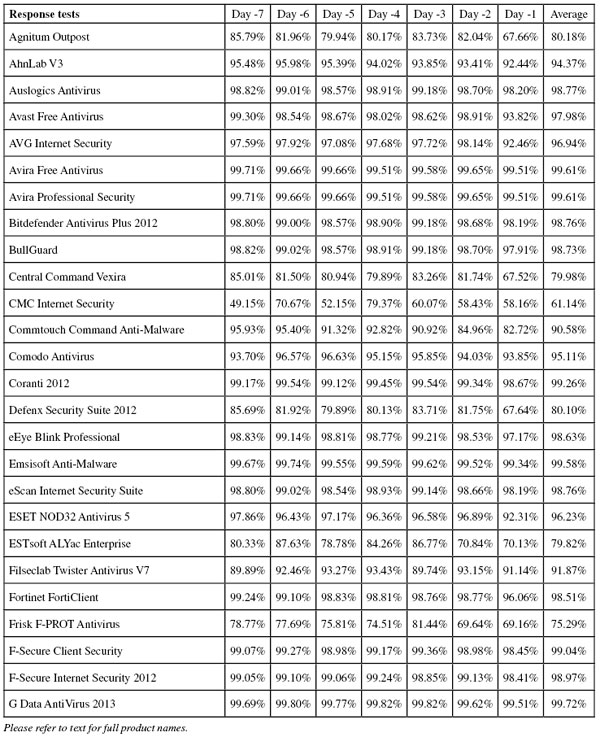

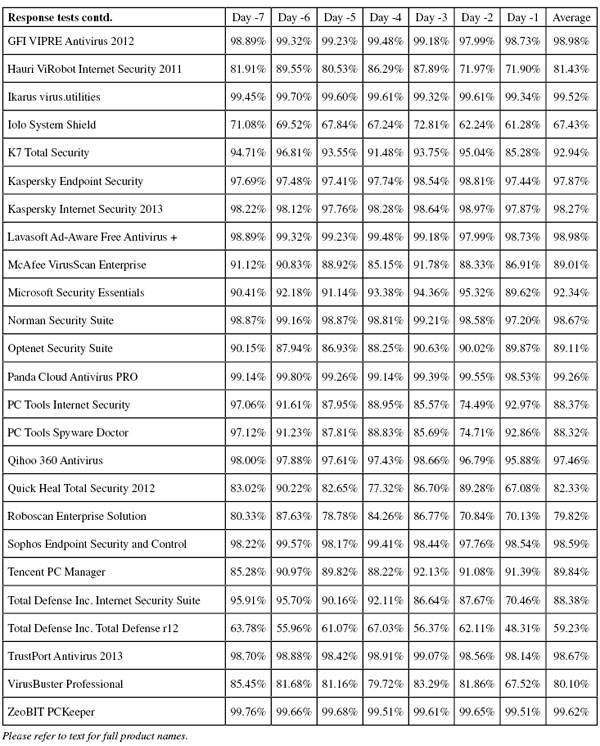

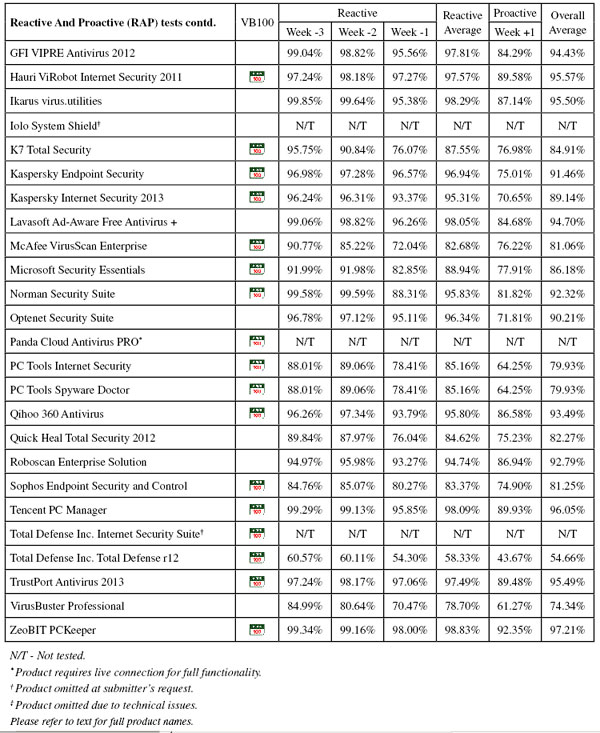

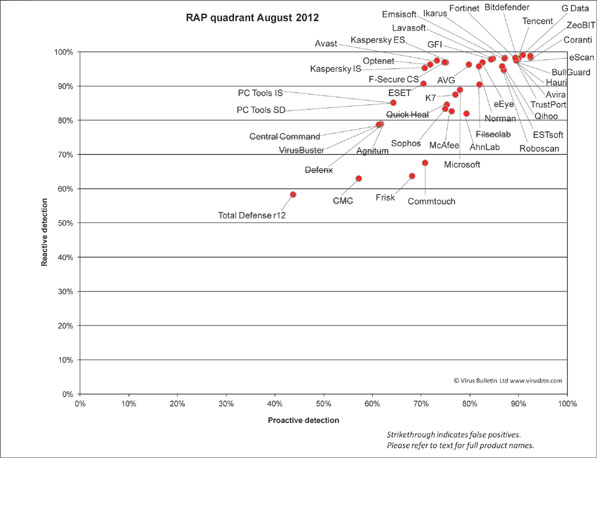

Test sets were deployed in the usual manner, with additions to the clean sets focusing on consumer software – several of the major download sites were scoured for the most popular and widely used items, and more items were added from magazine cover CDs and so on. So as not to neglect business users, the latest versions and updates of a number of common business productivity solutions were also added. The WildList sets were frozen on the test deadline of 24 June, which meant using the May list (released in early June). As usual, a number of revisions were made to the Extended WildList as items contained therein were challenged by participants, and further items were excluded if our own processes deemed them to be unsuitable. The RAP sets and Response sets were compiled using the same formula, using a selection of samples gathered daily, and this month after final rounds of validation and analysis the RAP set ended up containing around 20,000 samples per week, while the Response sets measured around 2,500 samples per day on average. Testing commenced on 1 July, and with a few interruptions for staff holidays and further work on the expansion of our lab space, was finished (as far as the certification components were concerned) by the end of the month, the remaining performance measures and so on continuing into late August.

Main version: 7.5.2 (3939.603.1810)

Update versions: 20/6/2012, 10/7/2012, 18/7/2012, 23/7/2012

First up on this month’s list is one of the companies at the heart of one of the big industry shake-ups in recent months. Having long enjoyed a close relationship with VirusBuster, including using the company’s engine in the Outpost product, a month or so after submitting for this test Agnitum announced its plans to take over development of the VirusBuster engine, inheriting the source code and all intellectual property from the now defunct company. Thus the first of our tables detailing the source of third-party technology is rendered even more complicated than we had anticipated, as those products currently marked as containing the VirusBuster engine (including Agnitum’s own) will in future be marked as containing the Agnitum engine. For now, however, we will stick to the names of the products and technology as they were at the start of this test.

Outpost has been a regular in our tests for a while and has achieved a solid record, managing a streak of 12 consecutive passes. The product itself is well designed and usually pleasant to operate. The install package measured 115MB, including all required updates for the RAP tests, and took fewer than six clicks to install, although the process did take some time, much of it taken up with initialization processes for the firewall components. Updates were integrated into the overall process so it was hard to judge just how long the updating took, but the average install time was a reasonable six minutes, with a reboot required to complete the process on each run.

On one occasion there was a major problem with the installation, when the machine failed to return to life properly after the reboot. After getting past the login prompt to the ‘Welcome’ screen, no further progress could be made, with the cursor responding to mouse actions but no other functionality available and even control-alt-delete had no effect. A hard reset didn’t help, and with time pressing we opted to simply re-image the test system and try the install again. Fortunately, the problem did not recur, either then or during any subsequent installs, nevertheless with the issue appearing in normal use and causing major inconvenience it is classed as a major bug and counts heavily against Agnitum’s stability score.

Another issue was observed during the on-access testing against malware samples, when the machine seemed to enter some kind of lock-down mode, meaning that we couldn’t check progress by opening log files in Notepad – error messages did not come from the product itself, but seemed to imply some problem with the operating system. Once the test job was complete, the system remained more or less unusable for around 15 minutes. This was a recurrent problem with all test runs, and although it only occurred during non-standard operations, when dealing with fairly large sets of malware samples, it adds further to the product’s stability woes.

Otherwise all ran fairly smoothly though, the product interface being clear and well laid-out with a basic level of fine-tuning available, logging to easily readable plain text, with a size cap in place which is adjustable within limits. Scanning speeds were a little sluggish initially but rapid in the warm runs; overheads were likewise on the high side at first but much lighter once the files had initially been checked out. Resource use was on the high side, but our set of tasks ran through in decent time. Detection scores were a little mediocre, as usual, with a fairly steep drop through the weeks of the RAP sets, and we can only hope that the changes in the ownership and operation of the engine will bring some improvements.

The changeover period may have had some impact on the clean set coverage too, with a rare false positive observed: an item from IBM was flagged as the Psyme worm. This means that Agnitum’s run of success in the VB100 tests comes to an end just as the vendor prepares for a new era, leaving it on four passes and one fail in the last six tests, with no entries in our annual Linux tests; nine passes and one fail in the last two years. The serious issue with one of the installs, plus the lock-ups during the on-access tests, places the product just inside the ‘buggy’ category in our stability rating system.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 1

Main version: 8.0.6.6 (Build 1197)

Update versions: 2012.06.20.01, 8.0.6.13 (Build 1239)/2012.07.10.00, 2012.07.16.05, 2012.07.21.00

AhnLab has been rather inconsistent in its entries of late, with its last appearance having been in the previous Windows 7 test in late 2011. The vendor returns in this test with much the same product as we remember from way back then. The current installer measures 208MB with latest updates included and runs through its business fairly rapidly, after a slightly worrying few moments of complete silence before it starts moving. Only a few clicks are required, with no need for a reboot at the end, and the entire process including online updates took under four minutes on average.

The interface is clean and clear, providing a reasonable degree of control, and it seemed responsive throughout testing, even under heavy pressure. Logs are displayed in a special viewer tool, which provides the option to save to text.

Scanning speeds were not too bad, and overheads perhaps just a little above average, with low use of resources and impact on our set of tasks noticeable, but not extravagant. Detection rates were not too bad, showing a relatively gentle decline through the RAP sets, and a similar slope in the Response sets from a decent starting point. With no problems in the core certification areas, a VB100 award is earned, giving AhnLab two passes and one fail from three attempts in the last six tests; four passes and three fails in the last two years. There were no bugs or stability issues noted, giving the product a ‘solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 99.95%

False positives: 0

Main version: 15.0.36

Update versions: 7.42872/7365118, 7.42915/7359988, 7.42961/7364417

Auslogics has a single previous entry under its belt, again in the previous Windows 7 test late last year. The product is a straight clone of Bitdefender’s current offering, with a few tweaks including the ‘Dragon Wolf’ that adorns Bitdefender’s install process having been changed to a simple ‘Black Dragon’ here. The set-up was run from a small downloader program, which fetched the latest build of the main installer. This initial download was very speedy, taking less than 15 seconds, and a standard set-up process then followed, including a zippy scan of vital areas and further downloading of components. The whole thing was done in under half a minute. Rather surprisingly, given the multiple download stages already witnessed, more updates were then required, but again these were very quick, taking only 20 seconds or so to fetch the 15MB of data requested.

On the initial installation, a quick check showed things were not quite right; no protection was in place and scanning appeared non-responsive. On digging further, we found a rather well-hidden message saying a reboot was needed to complete a stage, but even after several restarts we were unable to get things moving. An error message indicated that a key service was not running, but the Windows Event Viewer system claimed it was, and was blocked from trying to restart it. Re-running the installer brought an offer to repair the installation, which ran through and claimed to have made some fixes, but still the product refused to work. Having wasted enough time on our busy deadline day, we aborted the job hoping to be able to fix it later, but sadly had no luck, meaning that the RAP tests could not be performed; we would assume that, had things worked out properly, scores would closely match those of the Bitdefender solution.

When we came back a few weeks later and retried the install from scratch on a different system, everything went smoothly, with no repeat of these problems over multiple reinstalls – it looks like on our initial attempts we were hit by a very unlucky one-off bug. For the rest of testing everything went very nicely indeed, with blazing fast scan times especially in the warm runs, very light overheads until the settings were turned up, fairly low resource use and a light impact on our set of tasks. The design is clear and pleasant, with a good level of configuration available, and responsiveness was good throughout. Logging is kept in a fairly readable XML format, with no apparent option to export to more human-friendly plain text.

Detection rates were really quite excellent, with consistently high scores throughout the Response sets, no issues at all in the WildList sets and no false positives either. Auslogics thus comfortably earns its second VB100 award. That gives the vendor two passes from two attempts, both in the last six tests. With the problems encountered in the first install not recurring, stability was rated as ‘fair’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 7.0.1445

Update versions: 120620-1, 7.0.1458/120709-0, 120716-0, 120719-2

The only blemish on Avast’s recent history is a fail in the last Windows 7 comparative. The product routinely elicits warm, affectionate smiles from the test team, with this month’s submission promising more of the same. The install package measured 86MB and included all data required for the RAP tests. Later, online updates averaged around 45 seconds, including the additional time taken to update the full product on some occasions; at these times a reboot was needed to complete things, but usually this was not necessary. The install process itself is speedy and smooth, with the offer to install Google’s Chrome browser and some advertising for the company’s upcoming Android offering the only reminders that this is a free solution.

The interface itself remains one of the most satisfyingly clear and usable out there, packaging an impressive range of functions into a slick and at times quite beautiful GUI. Configuration options are provided in unimpeachable depth and for the most part maintain splendid levels of clarity and simplicity, although a few spots do seem to rely on existing knowledge of the product’s few quirky concepts. Logging is clear and simple to use, although it seems to be switched off by default in most areas. In the past we have seen some wobbliness in the sandboxing components, but this was not evident this month – hopefully due to fixes rather than simply good luck.

Scanning speeds were pretty decent and our overhead measures fairly light, although the product only does limited on-read scanning by default; with the settings turned up things did slow down a little, but not terribly. Resource use was around average, with impact on our set of tasks perhaps slightly higher than some.

Detection rates were very decent, with some superb scores in the reactive parts of the RAP test but a fairly sharp drop into the proactive week. The Response sets also showed high numbers in the earlier days and a bit of a downturn in the last day or two. The core sets were properly dealt with though, and Avast earns another VB100 award fairly easily; that gives the vendor five passes and just one fail in the last six tests; 11 passes out of 12 entries in the last two years. With no issues to report, stability was rated a firm ‘solid’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 2012.0.2180

Update versions: 2437/5079, 2437/5121, 2012.0.2195/2437/5135, 2012.0.2197/2437/5142

In contrast to its compatriot Avast, AVG tends to submit its paid-for business suites rather than its wildly popular free offerings. This month is no different, with a fairly large 148MB installer provided, including required updates for the RAP tests. Set-up was very speedy and simple, with an ‘Express’ install option meaning that only a couple of clicks were needed. Updates were also very rapid, the whole process taking less than two minutes to complete with no reboots required.

The interface is cleaner and leaner these days, providing a decent if not quite exhaustive set of controls, and logging was fairly clear and usable too. Responsiveness was generally good, but on one of the installs things seemed to get a little snarled up – scanning refused to start, with no error message or explanation for its silence, and a reboot was needed to get things moving again. After that all seemed fine though, and there was no sign that the issue had affected protection.

Speeds were pretty good, with serious improvements in the warm runs. Overheads were extremely light, although resource use was a little high and our set of tasks took a fair time to get through. Detection was good though, with pretty decent scores across the board, and with no issues in the core sets a VB100 award is duly earned. AVG now has five passes and one fail in the last six tests, ten passes and two fails in the last two years. With a single, non-reproducible issue noted, the product earns a ‘stable’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 12.0.0.1125

Update versions: 7.11.33.62, 7.11.35.136, 7.11.36.94, 7.11.36.252

Another seriously popular free product, Avira’s basic offering was provided as a mid-range 96MB installer, with an additional 68MB of updates for offline use. Installation was via another ‘Express’ system involving little more than a single click, plus acceptance of a EULA, and completed very rapidly. Updates were mostly very speedy, generally taking less than 30 seconds, but on one of the runs for some reason this was dragged out to over 10 minutes. This brought the total install average to just over four minutes.

The interface is a little crisper and friendlier these days, and provides a good level of control, although this is somewhat hampered in some areas such as by the absence of an option to scan a specific area from the main GUI (a context-menu scan option is of course provided). Logging is in plain text and laid out in a human-friendly format. Things seemed to work OK most of the time, but on one occasion the scanner component crashed out with a rather ungrammatical error message, claiming that ‘the exception unknown software exception occurred’. Restarting the scan was not a problem, and we didn’t manage to get this behaviour to recur. As it happened during an on-demand scan of a large malware set, it did not dent the product’s stability rating too heavily.

Scanning speeds were very good, as ever, and lag times fairly light, with RAM use low and CPU use a little below average, as was impact on our set of tasks. Detection rates were very solid indeed, with high scores everywhere, and the core sets caused no problems either, earning Avira a VB100 award for its free solution. Participating only in desktop comparatives, it now has three passes from three attempts in the last six tests; six from six in the last two years. With just a single minor crash noted, the product is rated as ‘stable’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 12.0.0.1466

Update versions: 7.11.33.62, 7.11.35.136, 7.11.36.94, 7.11.36.252

Very similar in most respects to the free edition, Avira’s Pro version has a few little extras which are usually most noticeable to us in the additional configuration available in some areas. The set-up was similarly simple and speedy, with no issues encountered zipping through the updates this time and an average install time of less than two minutes.

With a few additional areas that are not found in the free edition, such as a more complete scanner interface, the controls are highly rated for thoroughness, and logging is clear, lucid and reliable. Once again we noted an issue in the RAP tests, with the scanner crashing out unexpectedly, but again the task was re-run with no repeat of the problem.

Scanning speeds were good and overheads not bad, with low memory consumption, reasonable use of CPU cycles and our set of tasks completing in good time. Detection scores were excellent, with the drop-off in the proactive week of the RAP sets especially slight, and with no issues in the WildList or clean sets a second VB100 is easily earned by Avira this month. That keeps the Pro offering’s flawless run of success going: 12 passes in the last 12 tests. Stability was hit by a single scanner crash, which again occurred only while scanning a large set of samples; the rating is thus ‘stable’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 15.0.31.1283

Update versions: 7.42671/7313010, 7.42867/7363718, 7.42915/7359988, 7.42961/7364417

Having become the most popular engine for inclusion in other products, Bitdefender’s solution has already appeared this month with only the most minor of alterations. A wider range of implementations is yet to come, and things already look promising. The initial installer provided here included all updates required for the RAP test, weighing in at a fairly hefty 212MB; on running it, it immediately offered to download a fresh version – when this was required, it rarely took more than 30 seconds, presumably only fetching a fairly recent version of the main installer, as further updates would still be required later on. The main set-up involves only a couple of clicks and completes in good time, with definition updates adding no more than half a minute or so in most cases.

The recently refreshed interface is a little dark and brooding, but fairly attractive and easy to navigate. Options are provided in great depth, with good clarity; logs are stored in an XML format which is fairly simple to read with the naked eye, and seem quite reliable since some issues with excessively large logs were resolved several tests ago.

Scanning speeds were reasonable from the off, with huge improvements in the warm runs. On a couple of occasions these scan jobs (of clean files only) did seem to freeze up after a few seconds and had to be restarted, but this was not a reliably reproducible problem. File access times were perhaps a little sluggish, especially with settings turned up to the maximum thoroughness, but not too intrusive. Resource use was barely noticeable, and our set of tasks didn’t take too much longer than normal to complete.

Detection rates were extremely impressive, with excellent figures in both the RAP and Response sets. The core sets proved no problem either, and Bitdefender easily earns a VB100 award; it too joins the elite club of vendors celebrating 12 successive VB100 passes. With only a single very minor issue noted, a ‘stable’ rating is earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 12.0.224

Update versions: 12.0.0.29/12.0.0.27/12.0.0.58/12.0.0.50

As one of our longest-serving Bitdefender-based products, we expected good things from BullGuard too. The initial installer was mid-sized at 147MB and installed very speedily with only a few clicks, reboots were required on some updates. Updating took between ten seconds and a couple of minutes, much of which was ‘implementing’ rather than downloading in the longer runs.

The interface is a little quirky but reasonably simple to operate, providing just a little more than the bare minimum set of controls. Logs can be saved in a ‘.bglog’ XML format, which is clearly displayed in the product’s own viewer tool but less friendly for separate processing.

Speeds were splendid, with more great improvement in the warm runs. Overheads were fairly light, RAM use was low but CPU use rather high, while our set of tasks got through in average time. Detection was again really quite excellent, with very little overlooked in any of the sets. The core sets were perfectly handled and BullGuard also earns a VB100 award, giving it five passes from five entries in the last six tests; nine from nine in the last two years. No issues were noted, earning it a ‘solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 7.4.68

Update versions: 5.5.1.3/15.0.62.1, 5.5.2.10/15.0.91.0, 5.5.2.11/15.0.102.0, 5.5.2.13/15.0.111.0

Another product based on the engine formerly known as VirusBuster, Central Command’s Vexira is a regular in our tests and usually causes few major problems. The set-up process follows the usual lines, with the option to join a feedback scheme rather deviously hidden on the EULA acceptance page; it completed in around two minutes on average. Updating was less speedy – on several occasions initial attempts failed with little or no information, while trying to re-run the process seemed to do nothing. Leaving it alone to do its thing seemed the best if not only way to achieve a proper update, and getting this to occur took anywhere from five to 90 minutes, for no discernible reason – our average install time is thus a rather rough estimate of the average user’s chances.

The interface is a little clunky and old-fashioned, but reasonably usable with a little practice, and provides a decent level of configuration. Logging is capped for size but adjustable within limits, and produces reliable and pleasantly readable text logs. Scanning was not the fastest, and overheads were a little heavy in some areas, with low resource use and a fairly low impact on our set of tasks.

Detection rates were a little mediocre in most areas, but the WildList was handled well. However, with all looking good, a couple of false positives turned up in the clean sets, including the IBM item noted earlier, thus denying Vexira a VB100 award on this occasion. The product now has four passes and two fails from the last six tests; eight passes and two fails in the last two years. With the updating problems the only issue noted, but a fairly serious one, stability is rated only ‘fair’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 2

Main version: 1635

Update versions: 1637

A newcomer of sorts, CMC makes its first appearance in a set of results here, but the vendor has submitted products several times before. The fact that previous attempts to coax the product through the testing process have invariably failed did not bode well. With problems a little more manageable this month, we bravely soldiered through to gather a reasonably complete set of figures. The installer provided measured 67MB, and initial set-up seemed fairly smooth, with the standard set of steps to click through, completing in around a minute with no need to reboot.

The interface is fairly clear and simple to navigate, providing a basic set of controls, and while not as slick and glossy as some, it looks businesslike and professional. Problems hit us immediately though, with updates routinely seizing up. The update process fetches a fair number of distinct items, and on some occasions the number remaining would go down with each attempt, hinting that at least some progress was being made, while on others restarting the job set things back to the very beginning. On occasion a message would warn that the ‘CMCcore’ had stopped working, and nothing could be done to get it back online; in these cases (around one in three installs) we had no option but to wipe the machine and start again from scratch.

We generally managed to get a functioning and apparently at least mostly updated product to work with though, and plodded on through the tests. Speed tests were reasonably problem-free, with some fairly sluggish times, especially over the set of executables and other binaries; it was difficult to tell if archives were being properly examined, as there seemed to be no detection of the EICAR test file, rendering our archive depth test useless. Although convention suggests that failing to spot this file means the product is not set up properly, we did manage to get some detection of other items, both on access and on demand, so hopefully we had done nothing too wrong. On-access figures suggest some smart caching of previously scanned files, taking overheads from a little above average to quite decent in the warm runs, while resource use shows fairly average figures for RAM and CPU. Impact on our set of tasks was very high indeed, with a long time taken to get through the set of jobs.

On-demand tests were rather trying, with scans frequently crashing out with little data to report, but by carefully splitting the test sets into small chunks and running many small scans, we managed to get as close to a full set of figures as could be hoped for in the time available. Looking through the logs, it was clear that a large number of items were marked as variations of ‘Heur:UNPACKED’ followed by a common packer type such as UPX. While we would normally class such alerts as suspicious only (rather than full detections), given the large numbers of them evident in the WildList sets they were initially counted as full detections, but even with this concession, both the standard and Extended WildLists were not very well covered, with large numbers of misses in each. Ignoring the packed alerts reduced the figures by around a further 10%, but to be generous we allowed the alerts to stand.

We were also generous in the other direction in the clean sets, separating the alerts and counting the packer warnings as suspicious only, but even so false positives were rife. Even in our speed sets, specially compiled to minimize the chances of false alarms, a number of items were flagged, and when scanning the system’s C: partition we even noted that components of the product itself were flagged as packed with UPX and thus potentially dangerous. It would seem fairly basic logic that if one is going to warn about packers as a suspicious thing for a legitimate developer to be using, one should avoid using such packers oneself.

So, there is no VB100 award for CMC on its first full appearance in our tests, and it looks like quite some work is required for it to reach the required standard. With problems rife, the product stands very firmly in the ‘flaky’ category.

ItW Std: 79.25%

ItW Std (o/a): 81.49%

ItW Extd: 96.05%

ItW Extd (o/a): 68.03%

False positives: 448

Main version: 5.1.16

Update versions: 5.3.14/201206201558, 201207111323, 201207171158, 201207231225

Our new table shows Commtouch as incorporating the Frisk engine, but in another acquisition that took place during the testing period it seems the roles may have to be reversed. Frisk now forms part of Commtouch and so perhaps in future Frisk will be described as using the Commtouch engine in its products (which seem set to continue as a separate line). Commtouch itself has had a rather rocky time in our tests of late.

The product was provided as a very compact 12.6MB installer, with an update package of 28MB for use in the RAP tests. Installing is fairly straightforward, involving only a couple of clicks and a few minutes’ wait, while updates were very rapid indeed, taking no more than 20 seconds. No reboots were required.

The product interface is fairly rudimentary, but provides a basic set of controls which were mostly fairly responsive. Logs can be exported to readable plain text, which took quite some time when large amounts of data were being handled but never failed to complete. On one occasion the interface froze up on the completion of a scan, but forcing it to close cleared things up without difficulties.

Scanning speeds were not bad but lag times were very high, and while RAM use was low, CPU use was very heavy indeed and our set of activities took an extremely long time to get through. Detection rates were not very good at all in the RAP sets but much better in the Response sets, implying some effective use of cloud-based technology that is not available when offline.

The WildList and clean sets were handled well, and Commtouch can celebrate a VB100 award this month, putting it on two passes and three fails in the last six tests; five passes and five fails in the last two years, with false positives the usual cause of trouble. With only a single, very minor issue noted, while scanning large infected sets, a ‘stable’ rating is earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 5.9.221665.2197

Update versions: 12889, 12973, 13031

Comodo has been quiet for a while as far as our test bench is concerned, the vendor’s last entry having been late last year. Although the company has managed a single pass with its suite product, the standalone AV solution has been unlucky not to have made the grade yet. With the new fee structure in place, Comodo chose only to enter a single product (the standalone version), but the suite solution runs from the same installer, and provides the same malware detection alongside additional functions such as firewalling.

Set-up from the 84MB executable provided runs through a few steps, including the option to set the system’s DNS to use Comodo’s servers. Online updating pulled down around 120MB of additional data on average, and took quite a while to do so – around five minutes in most runs. A reboot was required after updating, and in some cases this was rather hard to spot – it was mentioned in passing in the product’s interface but not pointed out more forcefully. The GUI itself is colourful and attractive, appearing to provide a wealth of functions and options without becoming cluttered, and it seemed responsive throughout testing. Logging can be exported to a clear plain text format.

The company was unable to provide the product with updates from the deadline date, so for the RAP test an update was required on the day itself. Unfortunately, due to an administrative error, the image taken at this time was destroyed, so sadly no RAP figures can be provided. Other tests ran smoothly though, with fairly decent scanning speeds and rather heavy overheads; resource use was light however, and our set of activities didn’t take too long.

Detection rates took rather a long time to gather in the case of the on-access measures, each run lasting more than an hour where most other products got through the same job in less than a minute. On-demand scanning was much more speedy. Response scores were not far behind the leaders, but in the WildList sets a few items were not detected, thus denying Comodo a VB100 award this time around. This product has no passes so far, from three entries in the last six tests and five entries in the last two years. There were no issues with stability, earning the product a ‘solid’ rating.

ItW Std: 99.09%

ItW Std (o/a): 99.09%

ItW Extd: 98.40%

ItW Extd (o/a): 98.40%

False positives: 0

Main version: 1.005.00006

Update versions: 22318, 22537, 22593, 22649

Another vendor that requires us to install and update the product on the deadline day for the RAP tests, and another that would normally have submitted a pair of products, until the recent change in the number of products that can be submitted free of charge. Coranti opted to submit its mainline product rather than the ‘Cora’ offshoot, and provided it as a svelte 53MB installer. This ran through after just a few clicks in less than half a minute, updates taking a little longer as over 230MB of data had to be fetched to feed the multiple engines included. This generally took no more than a few minutes though, and things were soon up and running.

The interface is fairly simple – wordy, but not too hard to find one’s way around; configuration is decent but not exhaustive. One extremely minor issue was noted, in that while the interface insists the default setting for archive scanning is two levels of nesting, this was clearly not the case, with some types only opened to a single layer and others to at least ten. Scanning was initially rather sluggish, but picked up a lot in the warm runs, while on-access lag times were not too heavy. A lot of memory was used, but CPU usage was on the low side and our set of tasks was completed in good order.

As expected from the multi-engine approach, detection rates were very good indeed, with only the last day of the Response sets dropping below 99% (and even then only very slightly), and the RAP sets covered excellently, scoring over 90% even in the proactive week. The WildList caused no problems at all, and with no false alarms either Coranti picks up another VB100 award with some ease. The vendor now stands at three passes from three entries in the last six tests for this product; six passes from eight entries in the last two years. With a single very minor defect noted, a ‘stable’ rating is earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 3735.603.1669

Update versions: 20/06/2012 (9119179), 10/07/2012 (9225417), 18/07/2012 (9237307), 23/07/2012 (9246767)

Incorporating what should now be referred to as exclusively Agnitum technology, Defenx has become a fixture on our test bench in the last few years, and has built up an impressive record of success. The current version came as a 111MB installer, which ran through the usual stages, including the hidden option to join a feedback programme tucked away on the EULA page. A number of tasks are then performed, including getting the firewall components settled in before running an initial update. This generally took around three minutes, bringing the total install time to just under five minutes in all. The product interface is similar to that of Agnitum’s own, with a fairly clear and simple layout, configuration for the anti-malware component more than basic but less than thorough. Logging is capped by default but this can be extended, and information is stored in a clear, readable text format.

The speed tests passed without incident, showing some slowish times initially, but much better in the warm runs, while on-access overheads were very low indeed. RAM use was fairly low, CPU use decidedly high, and our set of activities ran through rather slowly too. The on-access measures over infected sets once again brought the machine to something of a standstill, with the opening of text files in Notepad and many other basic functions blocked and everything very slow for quite some time afterwards, as the deluge of detections was processed.

Detection rates, when finally worked out, were not terrible, but far from impressive, and although the WildList sets were properly dealt with, in the clean sets, alongside a selection of warnings about suspicious and packed files, there was the same single FP on a file produced by IBM. This denies Defenx a VB100 award for the first time in a while – it stands on four passes and one fail in the last six tests; nine passes and one fail in the last two years with just the Linux tests not entered. The odd behaviour and slowdowns during the on-access measures were fairly intrusive but only occurred under heavy malware bombardment. No other issues were noted, so Defenx merits a ‘Fair’ rating for stability.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 1

Main version: 5.0.2, Rule version 1634

Update versions: 1.1.2173, 1.1.2204, 1.1.2215, 1.1.2222

Blink is another product incorporating a third-party engine alongside in-house specialism (in this case the Norman engine with eEye’s vulnerability expertise), and eEye is another company involved in the spate of recent mergers and acquisitions, having been taken over by BeyondTrust at around the time of submissions for this comparative.

The product provided for testing was fairly sizeable at 242MB, and took some time to do its business, starting with a lengthy period of unpacking, preparation and installation of dependencies. After a few steps to click through, the process then ran for a couple of minutes before presenting a brief set-up wizard, finally opening its interface and offering to run an update. This final stage pulled down over 200MB of data on each install and took on average eight minutes to complete, making it one of the slowest this month, but no reboots were required.

The product interface is unflashy but clear and well presented, with a set of controls slightly more detailed than the most basic. Operation seemed smooth with no stability issues, and speed tests proceeded without hindrance. Apart, that is, from the product’s inherent sluggishness – the scanning of binaries and particularly of archives taking an age to complete, even though it seemed only a single layer of nesting was being analysed. Overheads were pretty heavy, but RAM use was low, CPU use not too high, and our set of tasks at least managed to get through in good time.

Detection rates were impressive, continuing a steady improvement observed in recent months, and well up with the leading cluster in the RAP and Response tests. There were no issues in the clean sets, but in the WildList sets a single item was ignored both on demand and on access in all three test runs, thus denying eEye a VB100 award this month despite a generally decent showing. The vendor now has four passes and one fail from the last six tests, with the Linux test missed out; seven passes and two fails from nine attempts in the last two years. With no bugs or problems to report, the product earns a ‘solid’ stability rating.

ItW Std: 99.84%

ItW Std (o/a): 99.84%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 6.6.0.1

Update versions: 5,913,562; 5,952,590; 5,976,533; 6,001,549

There are more changes to report here, with Emsisoft announcing towards the end of testing that it would be changing the core engine in its future products from long-time partner Ikarus to the ever more prevalent Bitdefender; the switch will be accompanied by a major new release of the product. For one last look at it in its old form, we used a 134MB executable downloaded from the company’s website, which included the latest updates available when fetched. Installation involves very few clicks, and zips through in a minute or so. At the end there are a few more steps to click through, including licensing and joining a community feedback scheme. Updates follow, pulling down close to 130MB of additional data but completing in only a couple of minutes.

The product interface is slightly quirky but reasonably clear once you’ve got used to the odd behaviour of its menu panel, and it provides some basic configuration options. Logging is in a clear text format.

Operation was interrupted several times – in fact, just about every time a scan of malicious files was run – with error messages usually warning of a ‘major problem’. In most cases this meant no further scans could be run and protection was disabled. Sometimes, simply rebooting solved the problem (although this on its own was rather frustrating with lots of jobs to do), but in a number of instances the interface still refused to open after reboot and protection could not be restarted – so we had no choice but to wipe the test machine and start again as best we could.

With this extra work delaying things, it was fortunate that scanning speeds were zippy enough to recoup some lost time. Overheads were a little on the heavy side though, with low RAM use but fairly high CPU use and a lowish impact on our set of tasks. Detection rates were excellent in the RAP and Response sets – very close to the top of the field – but in the WildList sets a single item went undetected throughout the testing period, denying Emsisoft a VB100 award this month. The vendor’s history shows one pass and four fails in the last six tests; two passes and eight fails in the last two years. With the severity of the issues noted mitigated by only having occurred during scans of large malware sets, their sheer frequency nevertheless merits a rating of ‘buggy’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 99.93%

ItW Extd (o/a): 99.93%

False positives: 0

Main version: 11.0.1139.1230

Update versions: NA

Another product using a third-party engine, eScan is part of this month’s crowded raft of Bitdefender-based solutions, with some additional technology of its own on top. The installer measured 189MB, and did nothing too surprising during the set-up process, which took about a minute and ended with a reboot. Initial update times ranged from four to eight minutes, depending how far we were from the original submission date.

The interface has curvy corners and cute jumbo icons, but underneath there is a serious degree of fine-tuning available. Logging is clear, readable and reliable. Scanning speeds were very fast indeed, and overheads pretty light, but use of RAM was a little high and CPU use was through the roof; our set of tasks was completed in reasonable time though.

Moving onto the malware tests, initial attempts at on-access testing proved ineffective – nothing seemed to be picked up at all. On rebooting the system, suddenly all was fine again. It appeared that for some reason two reboots were required, one after the initial install and a second after the first update, but this was not made clear by the product and users could easily think they were protected when they were not at all. This occurred on two of the three main test runs.

We also had some problems with the RAP tests taking rather a long time, which we suspected may have been due to failed attempts at cloud look-ups; the job ran through without errors, but took 60 hours (whereas the fastest products – including some using the same base engine – took less than two hours to do the same thing, with almost identical results). It was worth the wait though, as scores were splendid, putting eScan right up in the top corner of our RAP chart with its many relatives in the Bitdefender family. Response scores were splendid too, and with the WildList and clean sets handled well, a VB100 award is earned. That puts eScan on six passes in the last six tests; 11 passes and a single fail in the last two years. The product’s stability was problematic on this occasion though, with some serious problems leaving the system unprotected. As a result, it earns a ‘buggy’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 5.2.9.1

Update versions: 7235, 7284, 7303, 7314

Still maintaining an epic run of success in VB100 testing, ESET’s current product line has grown pretty familiar over the last few years and rarely gives us much trouble. The build submitted this month measured a compact 50MB including all required data for the RAP tests. Installation was fairly simple if a little on the slow side, completing in around four minutes on average, but with very zippy update times adding only around 30 seconds. The interface is very slick and good-looking, and provides a wealth of configuration; logging is clear and reliable, with plenty of ways to export to text files.

Speed measures showed fast scanning speeds and light overheads, with resource use very low – especially CPU use – but our set of tasks took a little longer than average. Detection rates were pretty good across the board, and with no issues in the certification sets ESET comfortably earns another VB100 award. The vendor remains on its extended run of success, with 12 passes in the last two years. A single GUI crash did occur during one of the RAP tests, but nothing was lost and no lasting damage was done, nevertheless it brought the product’s rating down to just ‘stable’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 2.5.0.18

Update versions: 12.4.26.1/433901.2012061916/7.42659/7309216.20120619, 12.4.26.1/436785.2012071117/7.43864/7363079.20120710, 12.4.26.1/437480.2012071714/7.42897/7363560.20120715, 12.4.26.1/438143.2012072313/7.42918/7360237.20120718

Another Bitdefender-based product, ESTsoft’s performances in our tests have been rather unpredictable so far, with some scores closely matching those of related solutions and others differing wildly. This month’s installer measured 142MB, ran through the standard process and took around two minutes to do its actual work. No reboot was required to complete, and updates seemed more stable than we have observed in the past, the duration being hard to predict and ranging from three to 10 minutes in various runs.

The interface is reasonably clear and usable, providing a limited set of controls; logging is decent, if rather slow to export and to save at the end of a scan. Scanning speeds were impressive though, and overheads fairly light, with low use of resources and a very low impact on our set of tasks.

Detection rates were superb in the RAP sets, but the Response scores were some way lower than expected, hinting that updating had not perhaps been as successful as it appeared. The clean sets were handled well, but in the WildList sets a small number of items were consistently ignored, which we could only ascribe to some additional whitelisting having gone awry. This denies ESTsoft a VB100 award this month, its first fail from four entries, all in the last six tests. A minor issue was noted, in that options to adjust archive scanning appeared non-functional. This was counted as a trivial bug, but was enough to put the product in the ‘stable’ category.

ItW Std: 99.84%

ItW Std (o/a): 99.84%

ItW Extd: 99.85%

ItW Extd (o/a): 99.80%

False positives: 0

Main version: 7.3.4.9985

Update versions: 15.160.27442, 15.188.60694, 15.198.24291, 15.206.2179

Still striving to reach its first VB100 award, Filseclab continues to enter our tests regularly. The product was downloaded from the vendor’s public website, coming as a main installer and an update bundle each measuring just over 130MB. The install process only requires a couple of clicks and completes in under a minute; online updates added another few minutes to the total, and a reboot was required to complete the job.

The interface is unusual but reasonably well presented, providing a little more than the bare minimum of controls, once they have been found amongst the rather wordy layout. Things seemed to run fairly smoothly throughout, with no bugs or issues observed. Speeds were pretty sluggish though, and lag times fairly high too, although our set of tasks completed in reasonable time and resource use was within normal limits.

Detection was pretty reasonable in the RAP and Response sets, although in the WildList sets there were still a few misses, with gradual improvement over the course of the three main test runs. False positives also remain a problem, with the large numbers this month mostly down to separate detection of numerous items in the Cygwin package, included on a Netgear drivers CD. Other packages alerted on included several from Adobe, with Microsoft, Google, IBM, Sun/Oracle and SAP among other providers whose files were labelled as malicious.

So, there can be no question of Filseclab winning a VB100 award this month, leaving the vendor with no passes from three tries in the last six tests; five fails in the last two years, but stability at least was good, earning the product a ‘solid’ rating.

ItW Std: 95.47%

ItW Std (o/a): 95.47%

ItW Extd: 93.52%

ItW Extd (o/a): 92.32%

False positives: 114

Main version: 4.1.3.149

Update versions: 5.0.21/15.724, 15.809, 15.866, 15.886

Another product which has shown strong improvement in our RAP tests of late, Fortinet returns doubtless hoping for more of the same. The installer is very small at less than 10MB, with an update bundle measuring 143MB provided for offline use. Set-up runs through rapidly with minimal fuss and no need to reboot, and updates are speedy, the whole process taking little more than a couple of minutes.

The interface is plain and businesslike, providing a very thorough set of controls, and logging is clear, reliable and easy to read. Scanning speeds were a little below average, overheads a little heavy on access; RAM use was also around average, CPU use very low, but our set of tasks took a long time to complete. Detection rates were very impressive though, continuing the recent upward trend, and with no issues in the certification sets a VB100 award is comfortably earned.

With no participation in our Linux tests, Fortinet now has five passes from five entries in the last six tests; nine passes and a single fail in the last two years. A single issue was noted: one of the Response test scans bailed out with a message that it had been ‘interrupted’. There was no apparent loss of protection though, and the same job completed without any problems on a second attempt, meaning a ‘stable’ rating is merited.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 6.0.9.6

Update versions: 4.6.5/19/06/2012, 23:32; 11/07/2012, 14:11; 16/07/2012, 23:37; 23/07/2012, 13:25

Frisk has been involved in one of the big industry events of recent months with its acquisition by Commtouch. However, it seems the Frisk product lines will continue to operate as usual (for the time being at least), with a major new version due out fairly soon. The current product remains familiar from many years of testing, with the installer a compact 35MB and updates measuring 58MB. Set-up is quick and easy, completing in around a minute with a reboot required; updates add another couple of minutes to the total time taken.

The interface is plain and simple, providing basic controls only, but logging is clear and dependable and operation was generally smooth. After part of the RAP tests the interface refused to run any more scans, the browse-for-folder dialog simply disappearing as soon as it opened, but a reboot resolved this without too much pain. Scanning speeds were decent, overheads perhaps a little above average in some areas (mainly the set of binaries), but our set of tasks ran through rapidly, with low memory consumption and CPU use just a little above average.

Detection rates were not too impressive, but at least fairly consistent, and the core sets were properly dealt with, earning a VB100 award for Frisk; the vendor can boast a clean sheet of six passes in the last six tests; ten passes and two fails in the last two years. With a single GUI issue occurring under heavy pressure, a ‘stable’ rating is also earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 98.85%

False positives: 0

Main version: 9.31 build 118

Update versions: 9.30 build 17464

A brace of products were submitted by F-Secure as usual, adding further to the lengthy list of solutions that include the Bitdefender engine, although in this case a little more in-house work is incorporated than in most. Set-up was fairly straightforward, with options related to management systems indicating that this is F-Secure’s corporate product line. The process took a couple of minutes with no need to reboot; update times were harder to measure, as the process would start, claim it had run, but in fact downloading and installation of new data continued in the background for some time. In some cases we couldn’t be sure the product was fully ready for testing for around 20 minutes.

Speed measures ran smoothly, showing some good times to start with and huge speed-ups in the warm runs; overheads were also light, with RAM and particularly CPU use quite low; our set of activities did take a while to complete, but not excessively so.

Moving onto the malware tests, things got a little more problematic. In the RAP runs, on several occasions logs were truncated, missing vital detail; repeating the runs several times was necessary to ensure we gathered a full set of figures. Even so, with the score for the proactive week lower than expected, it remains possible that some data was lost. Moving onto the Response and certification tests, the first run hit a nasty snag. An initial attempt to run an on-access job over the WildList sets froze up completely around 2% of the way through. After rebooting and retrying, it seemed to run better, but looking at the logs it was clear that all was still not right. Chunks of files were ignored, hinting that detection was shutting down for periods before being re-enabled. The test was re-run multiple times, but comparing results showed identical patterns each time, so it would seem that some samples were tripping the scanner up, causing it to stop working until it could fix itself. On later runs these problems were not apparent, and much work providing debug information to the developers has yet to pin down the cause of the issue.

In the end, detection rates were very good, especially in the Response sets, with RAP scores possibly hit by logging issues. The WildLists were well covered in most runs, and in the one instance where things went horribly wrong, only the Extended list was affected; as we currently only require on-demand coverage of this set, which was well provided, F-Secure earns a VB100 award for this product by the very skin of its teeth. The test history for the company’s mainline product now shows three passes and one fail from four entries in the last six tests; eight passes and one fail in the last two years. The problems noted in one of the test runs did not recur in later runs, but were repeatable at the time and resulted in temporary loss of protection; on top of the logging issues, this pushes the product into the ‘buggy’ category.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 95.92%

False positives: 0

Main version: 12.56 build 100

Update versions: 10.00 build 18110

The second product from F-Secure was a little different this month. Due to its install method, and some delay with the developers’ initial decision to submit it, it was not possible to include it in the RAP tests. The package sent to us was a tiny 1MB downloader tool, which when run fetched the main component, installing a ‘launch pad’ utility which would then be used to control other components, including the ‘computer security’ item of which the anti-malware product was a sub-component. Initial attempts to follow this process proved difficult though, as once the launch pad was in place, attempting to install the security part from it repeatedly hit a wall; licence details were requested, but once provided a message abruptly stated that the service was unavailable and that we should try again later. After several attempts with a number of licence keys, with the help of the developers we finally managed to get to the bottom of the problem, which involved certification keys that had not been updated as the installation of Windows was new and had not yet been online very much. As we would recommend people install security solutions as early as possible in their computer’s lifecycle, this seems less than ideal (as indeed does the idea of a product which can only be installed from the web).

Eventually, after several days of trying, we finally managed to get a working product, and from there things moved along much more smoothly. The issues observed with the other F-Secure solution were not encountered, but it is of course possible that this may have something to do with the delay in getting under way; we try to ensure that similar products are run as closely together as possible, but if the issues affecting the other product were caused by some faulty updates or engine problems, it’s possible that this product would also have been affected, had we tested it on the same day (as intended).

As it was, speed tests were zippy, especially in the warm runs, and overheads light, with medium RAM use, low CPU use and a fairly high impact on our set of activities. Detection rates were excellent in the Response sets, with no RAP scores for comparison, and the core sets were well handled, earning another VB100 award for F-Secure, this time a lot more comfortably. We haven’t seen a second product from F-Secure in a while, the company’s submission patterns playing havoc with our system of categorizing products, but with this one in the I.S. line we have only seen this single entry in the last six tests; three passes from three entries in the last two years. The initial install issues were intentional but still a minor annoyance, and with a single instance of inaccurate logging too, the product falls into the ‘stable’ category.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 23.0.3.2

Update versions: NA

Yet another product incorporating Bitdefender’s hugely popular engine, this time with additional contributions from Avast, G Data is one of our most consistently strong performers. The build entered this month measured 235MB including its updates, but installation was speedy and simple with just a handful of clicks, and a reboot was needed at the end. Updates were fairly zippy too, rarely taking more than two minutes to complete.

The interface looks good and provides excellent configuration; logging is also clear and easy to use. Scanning speeds were a little sluggish initially but powered through the warm runs in no time; file access lags were rather high, again improving greatly after an initial familiarization period, and resource use was low, although our set of activities took a very long time to complete.

Detection rates were extremely impressive, close to perfect in some areas – this was one of very few products to break the 90% barrier in the proactive week of the RAP sets. The certification sets were brushed aside effortlessly, comfortably earning the product a VB100 award, and G Data now stands at five passes from five attempts in the last six tests; nine passes and a single fail from ten entries in the last two years. Stability was rated ‘solid’, verging on the ‘indestructible’.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 5.2.5162

Update versions: 12083, 3.9.2540.2/12215, 3.9.2542.2/12260, 3.9.2542.2/12338

GFI has put in some consistently decent performances of late, but we still occasionally see issues handling large sets of malware, caused by an inefficient logging system which keeps all data in memory rather than the more standard approach of using a log file. The current version comes as a 116MB installer with 85MB of data provided for the RAP tests. It was set up with a minimum of interaction, completing in a couple of minutes with no need to reboot. Updates were fairly zippy, the longest taking just over three minutes, and the interface is bright and shiny, providing not much beyond the basic controls required. Logging is rather unfriendly for the human reader, but parsing tools provided by the vendor have proved very helpful.

Scanning speeds were pretty sluggish, overheads perhaps a little on the high side, but resource use was low and our set of tasks completed in good time. Detection rates were mostly excellent, as long as we didn’t try to scan too much at once, in which case scans routinely stopped abruptly, leaving no usable data behind. This would be unlikely to affect the normal user though, and it was not too difficult to gather full data for the RAP and Response tests. The core sets were again fiddly thanks to a lack of usable logging of on-access activities and a rather quirky approach to some samples – to which access is initially allowed, but which are later removed once some background scanning has completed.

The clean sets were handled OK, but on looking over the WildList results we saw that a handful of items in the Extended List had been missed on the third and final run, both on demand and on access. Further investigation and consultation with the developers revealed that this was due to an error with a set of updates – which had been fixed promptly. The timing of our test was a little unfortunate, as running it a few hours earlier or later would probably have avoided the issue. Nevertheless, no VB100 award can be granted this month, despite a clean sheet in two of the three runs. GFI now has three passes and two fails in the last six tests; seven passes and three fails in the last two years. A few scans falling over when overloaded with detections were the only issues noted, earning the product a ‘stable’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 99.90%

ItW Extd (o/a): 99.90%

False positives: 0

Main version: 6.0.0.0

Update versions: NA

Back to another Bitdefender-based product, Hauri’s ViRobot has been around for a long time and has seen something of a resurgence in our tests of late thanks to the vendor’s partnership with external engine developers. The product was submitted as a 182MB installer including required updates, and installation ran through the standard set of steps with no reboot required. Updates were a little baffling at times, with the actual process running for no more than a couple of minutes, but on most occasions no apparent change was made to the information displayed on the product GUI; despite reassuring messages about the update having completed successfully, the version dates on the main interface rarely changed. However, after completing our standard process of attempting updates before and after a reboot, we had no choice but to continue with what we had.

The interface is fairly pleasant and easy to operate, with a decent level of controls, and logging is usable too, with a viewer utility and an easy method of exporting to file. Scanning speeds were slow, but overheads not too high; RAM use was low, but CPU use way up, and our set of activities took a little longer than average to complete.

Detection rates were superb in the RAP sets as expected, but in the Response sets they were rather lower than we might have predicted, hinting that perhaps the update process was not as successful as it claimed. There were no issues in the core sets though, so a VB100 award is earned, putting Hauri on two passes and one fail from three entries in the last six tests; two passes and four fails in the last two years. With updates clearly not fully reliable, and some issues noted starting up the on-access component, the product falls into the ‘buggy’ category this month.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 2.0.125

Update versions: 1.1.118/81544, 2.0.134/1.1.122/81704, 2.0.134/1.1.122/81777, 2.0.134/1.1.122/81825

Ikarus tends to have rather uneven luck in our comparatives, and another appearance from its engine in this test hints that a recent spell of good fortune may have come to an end. The product submitted came as an ISO image of an install CD with many additional components, the whole thing measuring 204MB, while updates came as a separate bundle of 82MB. Set-up is simple and direct, with the standard path followed, although options to join a feedback scheme appeared to be hidden, greyed-out by default, alongside the normal update settings. The product interface can be a little slow to open, especially on first run, but updates were mostly fairly zippy, taking a couple of minutes to complete. On one occasion an update failed, with an error message displayed in German. However, the GUI informed us that the last update had been a minute or so previously – which one might be forgiven for thinking meant last successful update, rather than merely the last update attempt. Rebooting and retrying resolved the issue easily though.

The GUI is pretty perfunctory, providing the bare minimum of controls, but it seems to have become much more stable of late, running well for most of the tests. After some of the speed tests it did get a little tangled up in itself though, refusing to start any more scans, and once again a reboot was needed to return things to normal. Scanning speeds were pretty slow for the most part, a little better over our sets of media and miscellaneous files than over executables and archives. On-access lag times were very heavy indeed. RAM use was low, but CPU use rather high, and our set of tasks ran through very quickly. Logging was clear and reliable throughout.

Detection rates were excellent in the RAP and Response sets, with a handful of items in the clean sets marked as suspect thanks to embedded scripts. The WildList sets were mostly well dealt with, but a couple of items in the Extended set were ignored, meaning no VB100 award for Ikarus, as expected. The vendor now has two passes and two fails in the last six tests; three passes and five fails in the last two years. A few issues with the updater and scanner mean only a ‘fair’ rating for stability.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 99.93%

ItW Extd (o/a): 99.93%

False positives: 0

Main version: 4.2.11.0

Update versions: NA

In something of a surprise move, Iolo’s developers informed us this month that they would like their product to be omitted from the RAP test. Having made participation in the RAP tests optional so as not to unfairly represent products that require access to cloud data to function properly, this was the first time a product had been withdrawn from the test for other reasons (the developers cited poor performances in previous tests) and has made us question whether such a move should be permitted. It is likely that the rules will be tightened when we complete a redraft of the official procedures documents, currently under way.

As far as testing goes it made things much easier of course, as Iolo’s product has never been the easiest to work with. The installer submitted was a tiny 500KB downloader, which on first operation fetched the main installer, measuring 55MB. An option is usefully provided to hang on to a copy of this file in case it is needed for later reinstallations. The set-up process then proceeds, following standard lines. The process takes not much more than a minute in all, including the time taken to download the initial file, and completes with a reboot required. On restarting the machine for the first time after the install, a noticeable pause was observed before the system came fully to life – perhaps 30 seconds longer than the normal start-up time – but this did not happen on subsequent restarts. Updates were very speedy though, taking no more than 20 seconds to complete the full process even when using a fairly old copy of the main installer.

The interface is clean and professional-looking, providing a little more than the basic set of controls. Logging is clearly displayed in the product GUI, but for handling large amounts of data is something of a nightmare; with no option to export to text, data has to be decrypted from a bizarre and esoteric database format, which despite repeated pleas to the developers we are still forced to rip apart as best we can.

Operation of the product itself is less troublesome though, and speed tests proceeded without problems. Scan times were not bad, overheads rather heavy, and although RAM use was low, CPU use was pretty high and our set of tasks took a very long time indeed to get through. Detection, as far as we could judge from our rather brutal unpicking of log data, was rather uninspiring in the Response sets, with no RAP scores for comparison, and although the WildList sets were properly dealt with on access, the on-demand jobs required data from the logs which appeared to show a number of items not covered, despite careful picking through them for any trace of the missing data.

We are thus forced to deny Iolo a VB100 award once again, the vendor’s record now showing four fails from four attempts in the last six tests; two passes and five fails in the last two years, although it seems probable that some of these are entirely due to the lack of usable log data to process; we repeat our request to the developers to provide some more reliable way of accessing the information their product stores. Stability was good throughout though, meriting a ‘solid’ rating.

ItW Std: 99.20%

ItW Std (o/a): 100.00%

ItW Extd: 98.40%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 12.1.0119

Update versions: 9.144.7068, 12.1.0124/9.145.7253, 9.145.7298, 9.145.7329

Coming into this test we were told that K7’s product had had a bit of a refresh. The 84MB installer ran very simply with just a single click required, and completed very speedily, which was all the more impressive given that initial updates were included in the process. No reboot was required to get at the new GUI, which certainly looks very different. Gone is the wordy layout and gaudy colour scheme of the previous incarnation, replaced with a much simpler and clearer layout, featuring a brushed-metal-effect colour scheme and military fonts, giving it a robust and solid feel. Configuration is fairly thorough and easy to navigate, and logging is clear and reliable.

Scanning speeds were reasonable if not super fast, file access lags fairly light, with average resource use and a good time in our set of tasks. Detection was pretty good too – perhaps not quite up with the very best this month, but not far behind. The core sets presented no difficulties, comfortably earning the product a VB100 award and continuing a good run of success, which thanks to a sporadic pattern of entries means only three passes in the last six tests, from three attempts; five passes from five entries in the last two years. Stability was not a problem, earning a ‘solid’ rating.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 8.1.0.831

Update versions: NA

A pair of products came in from Kaspersky as usual, with both enterprise and consumer product lines represented. First up is the business product, which was provided as a 318MB package with updates for the RAP tests rolled in. The set-up process runs through a number of steps but doesn’t take too long, completing in not much more than a minute. The initial install did not require a reboot, but some later updates did. In some cases this was not made very clear at all, with a small message in the product’s logging interface informing the user that the machine must be restarted for the product to function fully.

The GUI itself is sharp and stylish, a little quirky but reasonably simple to operate and providing an impeccable range of controls. Logging is also comprehensive and lucid with plenty of options to export to file. Scanning speeds were mostly a little slow to start with, but much faster in the warm runs. In the miscellaneous set however, we once again saw scans lasting an age, in some cases appearing to stop altogether. This was finally traced to a single rather large archive dropped as a temporary file by some installer, which was not being dealt with very well. On access it appears to have caused no problems though, with incredibly low overheads across the board. RAM use was average but CPU use a little high, with a fairly notable impact on our set of tasks too.

Detection rates were very solid though, a little behind the leading pack in the proactive week of the RAP sets but pretty impressive everywhere else, and with no issues in the core sets a VB100 award is well earned, putting Kaspersky’s business line on four passes and one fail in the last six tests; eight passes and three fails in the last two years with a single test skipped. With the only issue noted being the poor handling of a deeply nested archive, a ‘stable’ rating is earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Main version: 13.0.0.3370

Update versions: NA

The consumer offering from Kaspersky is a little glitzier than its business counterpart but is usually found to be fairly similar under the covers. This month it was provided as a set of install files plus update data, the whole thing totalling a whopping 764MB but including a lot of additional data for the updating of other products. Set-up was very simple with an express install method requiring only a couple of clicks, completing in around two minutes with no reboot needed; updates were a little speedier here, averaging around four minutes.

The interface is shiny and sparkly but does not skimp on the fine-tuning controls, providing ample configuration. Logging is again comprehensive, clear and easy to export. Scanning speeds were rather sluggish, with only some runs showing improvements in the warm scans, and again the set of ‘other file types’ took a long time to get through. On-access speed tests hit a problem at some point, the job freezing up part way through and still not having completed after being left alone for a full weekend. It seemed fine when we re-ran it though, although overheads were rather higher than expected. RAM use was a low average, CPU a little high, and our set of tasks got through in good order.