2012-09-21

Abstract

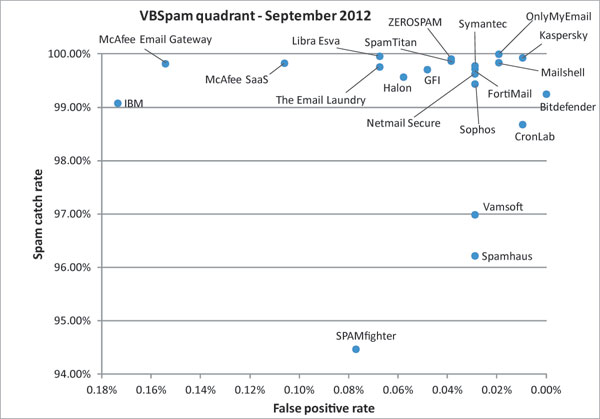

In this month's VBSpam tests, catch rates improved a little across the board, but products had problems with the introduction of more Asian-language spam. Martijn Grooten has the details.

Copyright © 2012 Virus Bulletin

One of the properties of spam is that it is sent indiscriminately. As a result, English-language Viagra spam – the most archetypical of all spam, whose subject is aimed heavily towards men – is sent to many young women in non-English-speaking parts of the world.

It’s not that spammers don’t care about who receives their messages. They might not care about the ethics of sending such messages, but their resources, though huge, are ultimately limited (especially if you consider ‘success rates’ in the order of one-thousandth of a per cent). If they could exclude large groups of non-potential ‘customers’, they would certainly do so. In fact, in some cases, they already do so by sending certain campaigns only to users on specific top-level domains.

This doesn’t mean that two email addresses get similar spam, even if they use the same top-level domain. For instance, on my work email address I get a disproportionate amount of spam in Farsi, whereas my personal email account (another .com address) is popular with Chinese spammers. I speak neither language, nor do I have regular correspondence with people in Iran or China.

This is even true at an organizational level: there are significant qualitative and quantitative differences between the spam received by different organizations, just as there are huge differences between the legitimate emails (ham) received by different organizations.

In the VBSpam tests, we attempt to use corpora of emails that are representative of the ham and spam sent globally. And while these corpora may still have a slight bias towards English-language emails, we spend a lot of time and effort making sure the emails have global coverage.

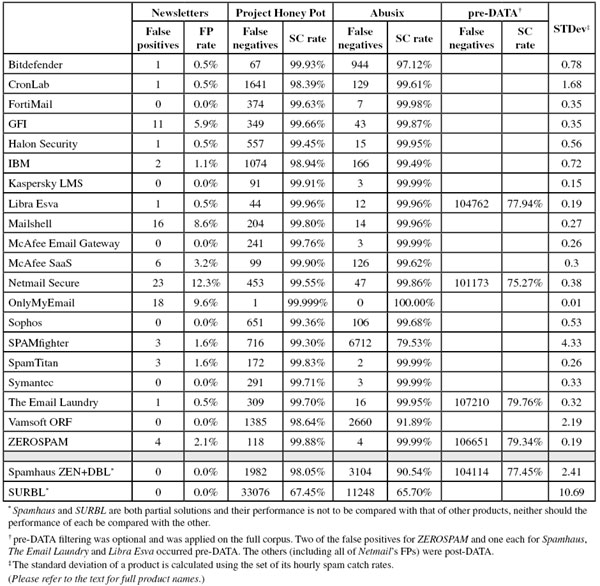

This test saw the re-introduction of the Spamfeed.me feed from Abusix, which was absent from the previous test. This feed now contains a lot of spam in Asian languages, particularly Japanese. For some participants, these emails were a real struggle. And while the vendors that found filtering such emails difficult may claim that, for many of their customers, spam in these languages is not a problem, I speak from personal experience when I say that it could be – even for someone who doesn’t speak a word of any non-European language.

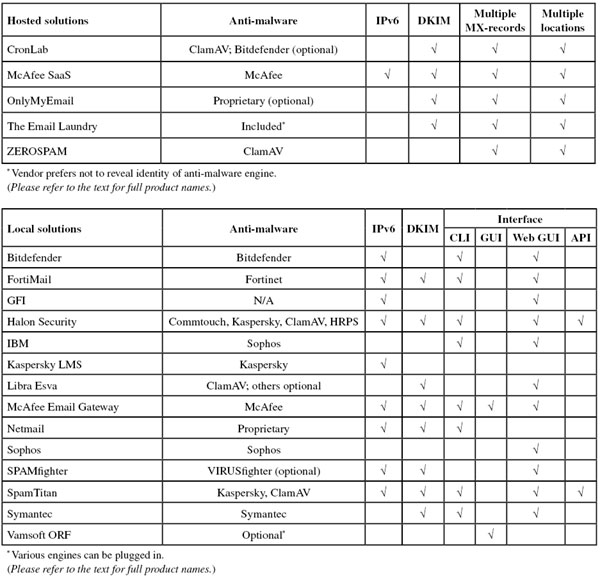

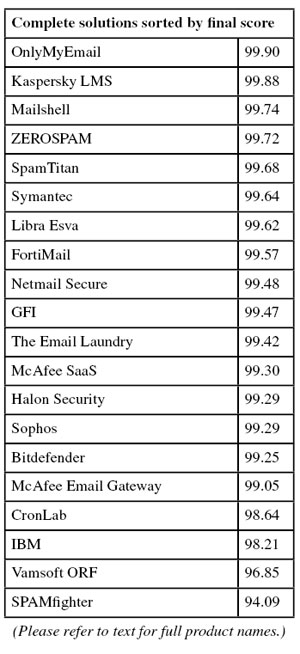

This month, 20 complete anti-spam solutions participated, as did two partial solutions (DNS blacklists). Eighteen full solutions won a VBSpam award, but none reached the required standard to earn a VBSpam+ award.

The VBSpam test methodology can be found at http://www.virusbtn.com/vbspam/methodology/. As usual, email was sent to the products in parallel and in real time, and products were given the option to block email pre-DATA. Five products chose to make use of this option.

As in previous tests, the products that needed to be installed on a server were installed on a Dell PowerEdge R200, with a 3.0GHz dual core processor and 4GB of RAM. The Linux products ran on SuSE Linux Enterprise Server 11 (Bitdefender) or Ubuntu 11 (Kaspersky); the Windows Server products ran on either the 2003 or the 2008 version, depending on which was recommended by the vendor.

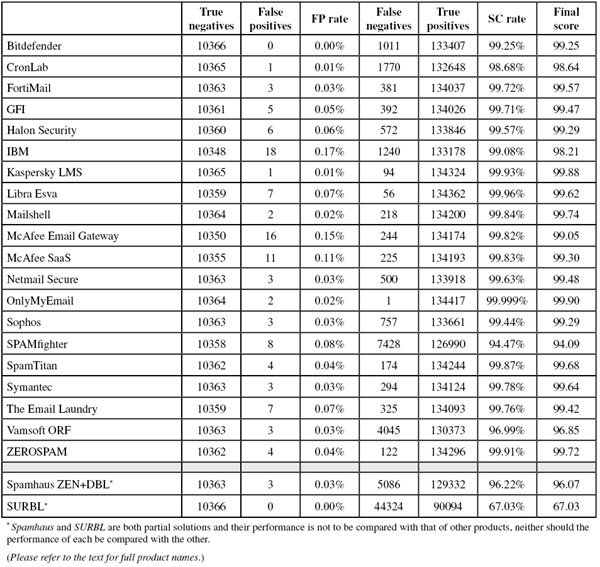

To compare the products, we calculate a ‘final score’, which is defined as the spam catch (SC) rate minus five times the false positive (FP) rate. Products earn VBSpam certification if this value is at least 97:

SC - (5 x FP) ≥ 97

Those products that combine a spam catch rate of 99.5% or higher with no false positives earn a VBSpam+ award.

The test ran for 16 consecutive days, from 12am GMT on Saturday 18 August 2012 until 12am GMT on Monday 3 September 2012. On the afternoon of 24 August, the test stopped running for a few hours due to a hardware failure. Although technically not affecting the test set-up, we chose to err on the side of the caution and suspended the test for a few hours while fixing the issue.

The corpus contained 144,971 emails, 134,418 of which were spam. Of these, 101,621 were provided by Project Honey Pot, and 32,797 were provided by Spamfeed.me from Abusix. They were all relayed in real time, as were the 10,366 legitimate emails (‘ham’) and the remaining 187 emails, which were all legitimate newsletters.

Figure 1 shows the spam catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rates have been excluded for each hour.

In previous reports, we noticed a drop in the products’ spam catch rates and we were thus keen to see if this trend had continued. While it may be tempting to conclude that it had – as the average complete solution had a 0.16% lower catch rate this time than in the July test – this is skewed by some products having significant difficulty with the Abusix feed. Comparing the performance solely on the Project Honey Pot feed (which was all that was used in July) we noticed that, on average, the products’ catch rates increased by 0.19%.

The graph in Figure 1 confirms this: at no point during the test did the average catch rate drop below 99%, and most of the time it was above 99.5%. Comparing this with the graph in the previous test, we can see that the overall situation has improved.

Of course, in the cat-and-mouse game between spam filters and spammers it may be that spam filters have worked hard to catch up with the spammers, but it may also be the case that the latter are taking it easy for the moment. Even so, it is worth noting that the catch rates are still well below those we recorded at the beginning of the year.

This test also saw an increase in non-English-language ham and newsletters. Products had some difficulties in both these categories. False-positive rates in double digits were not an exception this time, and only one full solution managed to avoid false positives altogether.

In the text that follows, unless otherwise specified, ‘ham’ or ‘legitimate email’ refers to email in the ham corpus – which excludes the newsletters – and a ‘false positive’ is a message in that corpus that has been erroneously marked by a product as spam.

The ‘false negative rate’, mentioned several times in the text that follows, is the complement of the spam catch rate. It can be computed by subtracting the SC rate from 100%.

Because the size of the newsletter corpus is significantly smaller than that of the ham corpus, a missed newsletter will have a much greater effect on the newsletter false positive rate than a missed legitimate email will have on the false positive rate for the ham corpus (e.g. one missed email in the ham corpus results in an FP rate of slightly less than 0.01%, while one missed email in the newsletter corpus results in an FP rate of almost 0.6%).

SC rate: 99.25%

FP rate: 0.00%

Final score: 99.25

Project Honey Pot SC rate: 99.93%

Abusix SC rate: 97.12%

Newsletters FP rate: 0.5%

In 21 VBSpam tests, Bitdefender’s anti-spam solution has never been absent, yet even after three-and-a-half years, the product’s developers are always eager to see if things can be improved. I am sure they will investigate the product’s performance on the Abusix corpus which caused the overall spam catch rate to drop a little this month. However, on the flip side, Bitdefender was the only complete solution not to miss a single legitimate email in this test, which is something to be celebrated as is its 21st VBSpam award.

SC rate: 98.68%

FP rate: 0.01%

Final score: 98.64

Project Honey Pot SC rate: 98.39%

Abusix SC rate: 99.61%

Newsletters FP rate: 0.5%

This was CronLab’s third VBSpam test and this was the first time the product has missed a legitimate email. Compared to previous tests, the Swedish hosted solution achieved a significantly lower spam catch rate, though interestingly, it was mostly the Project Honey Pot spam that caused problems for the product. Nevertheless, CronLab easily earns its third VBSpam award.

SC rate: 99.72%

FP rate: 0.03%

Final score: 99.57

Project Honey Pot SC rate: 99.63%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.0%

There was a drop in FortiMail’s spam catch rate in the last test, so it was nice to see its performance bounce back this time around. With three false positives, the FP rate remained constant and the product did not miss a single newsletter. FortiMail earns its 20th VBSpam award.

SC rate: 99.71%

FP rate: 0.05%

Final score: 99.47

Project Honey Pot SC rate: 99.66%

Abusix SC rate: 99.87%

Newsletters FP rate: 5.9%

GFI MailEssentials achieved a higher spam catch rate in this test than in any of the previous eight tests in which it has participated, which is a nice achievement. The false positive rate increased a little on this occasion though – with five false positives it was about average. Despite the rise in false positives, GFI managed a decent final score and earns its ninth consecutive VBSpam award.

SC rate: 99.57%

FP rate: 0.06%

Final score: 99.29

Project Honey Pot SC rate: 99.45%

Abusix SC rate: 99.95%

Newsletters FP rate: 0.5%

This was Halon Security’s tenth VBSpam test and it was nice to see the product achieve its highest spam catch rate of the year. There were six false positives, but despite that the product’s final score increased a little and another VBSpam award is added to Halon’s tally, taking it to a total of ten.

SC rate: 99.08%

FP rate: 0.17%

Final score: 98.21

Project Honey Pot SC rate: 98.94%

Abusix SC rate: 99.49%

Newsletters FP rate: 1.1%

Last month, IBM just about scraped a VBSpam award, coming dangerously close to the threshold final score of 97. Things look a little better this month, although that is only thanks to a significantly increased spam catch rate: the product missed 18 legitimate emails – more than any other product in this test. The product earns its seventh VBSpam award, but its developers still have plenty of work to do.

SC rate: 99.93%

FP rate: 0.01%

Final score: 99.88

Project Honey Pot SC rate: 99.91%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%

Kaspersky’s Linux Mail Security made an impressive debut in the last test, yet it managed to improve its performance even further this month. The 99.93% catch rate was the third highest of all complete solutions, while for the second time running, the product missed only a single legitimate email. It was only that email that stood between Kaspersky and a VBSpam+ award, but with the second highest final score this month, the developers can still be very pleased with their product’s performance.

SC rate: 99.96%

FP rate: 0.07%

Final score: 99.62

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.96%

SC rate pre-DATA: 77.94%

Newsletters FP rate: 0.5%

Libra Esva has always tended to achieve high spam catch rates in our tests, but 99.96% is impressive even by its own standards, and was the second highest catch rate in this test. There were seven false positives this time – more than we have seen from the product for a long time – which did cause it to drop down the tables a little, but Esva still easily earned its 15th VBSpam award in as many tests.

SC rate: 99.84%

FP rate: 0.02%

Final score: 99.74

Project Honey Pot SC rate: 99.80%

Abusix SC rate: 99.96%

Newsletters FP rate: 8.6%%

It was nice to see Mailshell’s Anti-Spam SDK return to the test bench and even better to see the product – which is used by other anti-spam solutions – do so with a decent spam catch rate. This was combined with just two false positives, resulting in the third highest final score. The performance wins Mailshell its third VBSpam award in as many tests.

SC rate: 99.82%

FP rate: 0.15%

Final score: 99.05

Project Honey Pot SC rate: 99.76%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%%

Since joining the tests in March this year, McAfee’s Email Gateway appliance has, somewhat against the grain, constantly improved its spam catch rate. This test was no exception, but against that stands a spike in false positives, of which there were 16 this time (though, interestingly, none among the newsletters). This was not enough to prevent the product from winning a VBSpam award, but hopefully the developers will work hard to bring the FP rate back down for the next test.

SC rate: 99.83%

FP rate: 0.11%

Final score: 99.30

Project Honey Pot SC rate: 99.90%

Abusix SC rate: 99.62%

Newsletters FP rate: 3.2%

The spam catch rate for McAfee’s SaaS product dropped a little this time (a consequence of the re-introduction of the Abusix feed), while there was a small increase in the false positive rate. Neither is a good thing, of course, but such small differences were not enough to stand in the way of the product winning its eighth VBSpam award.

SC rate: 99.63%

FP rate: 0.03%

Final score: 99.48

Project Honey Pot SC rate: 99.55%

Abusix SC rate: 99.86%

SC rate pre-DATA: 75.27%

Newsletters FP rate: 12.3%

Netmail is the new name for VBSpam regular M+Guardian. Much as we care about aesthetics in the VB lab, ultimately it is about performance – and Netmail’s was good. The spam catch rate saw a huge increase, with the false negative rate reducing to less than one third of what it was, although the number of false positives increased. While a false positive score of 12.3% on the newsletter corpus is a little high, Netmail wins its first VBSpam award, the tenth for Messaging Architects.

SC rate: 99.999%

FP rate: 0.02%

Final score: 99.90

Project Honey Pot SC rate: 99.999%

Abusix SC rate: 100.00%

Newsletters FP rate: 9.6%

After 12 tests, it is hard to find appropriate adjectives to describe OnlyMyEmail’s catch rate, but as the product missed only a single spam message in a corpus of well over 134,000 emails, ‘impressive’ is an understatement. And yet again, with just two false positives, the product has one of the lowest false positive rates. If there is something that can be improved, it is the performance on newsletters, where the product blocked a number of mostly Swedish and Finnish newsletters, but that’s only a small detail and shouldn’t stop the developers from celebrating achieving another VBSpam award with this month’s highest final score.

SC rate: 99.44%

FP rate: 0.03%

Final score: 99.29

Project Honey Pot SC rate: 99.36%

Abusix SC rate: 99.68%

Newsletters FP rate: 0.0%

With a small decrease in its spam catch rate and a small increase in false positives (though, yet again, not missing a single newsletter), Sophos’s Email Appliance dropped a little in the product rankings this month. However, its performance was still very decent and Sophos earns its 16th VBSpam award.

SC rate: 94.47%

FP rate: 0.08%

Final score: 94.09

Project Honey Pot SC rate: 99.30%

Abusix SC rate: 79.53%

Newsletters FP rate: 1.6%

It could well be that many of SPAMfighter’s customers see little Asian spam and thus its poor performance on the Abusix corpus in this test wouldn’t hurt them much. It did dent the product’s results though, putting the catch rate well below 95%. Add to that eight false positives (though this is actually a decrease from the previous test) and the Danish product fell well below the VBSpam threshold and thus misses out on an award this time round.

SC rate: 99.87%

FP rate: 0.04%

Final score: 99.68

Project Honey Pot SC rate: 99.83%

Abusix SC rate: 99.99%

Newsletters FP rate: 1.6%

In this test, SpamTitan equalled the excellent spam catch rate it achieved in the last test – although this time it did not manage to avoid false positives, thus a second VBSpam+ award was just out of reach. However, with a top-five performance there are still plenty of reasons for SpamTitan to celebrate yet another VBSpam award.

SC rate: 99.78%

FP rate: 0.03%

Final score: 99.64

Project Honey Pot SC rate: 99.71%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%

In this test, Symantec managed to combine a small increase in its spam catch rate with a decrease in its false positive rate – and even avoided false positives on the newsletters altogether. No doubt this will give even more cause to celebrate the virtual solution’s 17th consecutive VBSpam award.

SC rate: 99.76%

FP rate: 0.07%

Final score: 99.42

Project Honey Pot SC rate: 99.70%

Abusix SC rate: 99.95%

SC rate pre-DATA: 79.76%

Newsletters FP rate: 0.5%

In this test, The Email Laundry showed a nice increase in its spam catch rate. The number of false positives for the hosted solution increased too – from five to seven – but that didn’t stop the product from climbing up the rankings. And while the pre-DATA spam catch rate dropped significantly, this was the case for all products set up to use this feature, and The Email Laundry once again had the highest performance in this category. The product’s 14th VBSpam award was thus well deserved.

SC rate: 96.99%

FP rate: 0.03%

Final score: 96.85

Project Honey Pot SC rate: 98.64%

Abusix SC rate: 91.89%

Newsletters FP rate: 0.0%

Vamsoft ORF was one of the products that had some difficulty with the re-introduced Abusix feed, causing the overall catch rate to drop just below 97% (while, in fact, the product’s catch rate on the Project Honey Pot corpus increased slightly). The product also missed three legitimate emails, which saw the final score drop a little further and with 96.85 Vamsoft misses out on a VBSpam award for the first time.

SC rate: 99.91%

FP rate: 0.04%

Final score: 99.72

Project Honey Pot SC rate: 99.88%

Abusix SC rate: 99.99%

SC rate pre-DATA: 79.34%

Newsletters FP rate: 2.1%

While many products saw their false positive rates increase, ZEROSPAM saw the number of missed legitimate emails decrease from 13 to four. What is more, the product did so while maintaining its high spam catch rate. With the fourth highest final score, the product’s fourth VBSpam award is thus very well deserved.

SC rate: 96.22%

FP rate: 0.03%

Final score: 96.07

Project Honey Pot SC rate: 98.05%

Abusix SC rate: 90.54%

SC rate pre-DATA: 77.45%

Newsletters FP rate: 0.0%

This wasn’t a good month for Spamhaus: the re-introduction of the Abusix corpus saw the product’s spam catch rate drop quite a bit, to just over 96%. This is not surprising if one looks at the pre-DATA performance of the four full solutions that are set up to have this measured: they all blocked less than 80% of emails before the DATA command was sent, while previously they all scored 96% or higher. It may well be that a lot of spam in this month’s test used otherwise legitimate servers and therefore could not have been blocked before the DATA phase.

So, while Spamhaus did not win a VBSpam award this time (apart from the low catch rate, the product also had three false positives), the fact that the catch rate was still above 96% does show the value of the DBL, the domain-based blacklist offered by Spamhaus.

SC rate: 67.03%

FP rate: 0.00%

Final score: 67.03

Project Honey Pot SC rate: 67.45%

Abusix SC rate: 65.7%

Newsletters FP rate: 0.0%

In its eighth appearance on the VBSpam test bench, the SURBL domain-based blacklist yet again did not miss a single legitimate email. This may seem a trivial thing, after all, SURBL only looks at domain names, but it is worth noting that many false positives picked up by other solutions happen because of incorrectly blacklisted domains – so avoiding false positives while still blocking over two-thirds of all spam is not an easy thing to achieve.

(Click here for a larger version of the table)

(Click here for a larger version of the table)

(Click here for a larger version of the table)

(Click here for a larger version of the chart)

I have somewhat mixed feelings about this month’s test results.

On the one hand, it is clear that many products saw their catch rates – which had gradually slid down throughout the year – bounce back a little. This is really good news.

On the other hand, some products had serious problems with the introduction of more Asian-language spam, while many products found some non-English legitimate emails hard to deal with. There is certainly some room for improvement. We will be watching to see whether participants can make these improvements.

The next VBSpam test will run in October 2012, with the results scheduled for publication in November. Developers interested in submitting products should email [email protected].