2014-03-10

Abstract

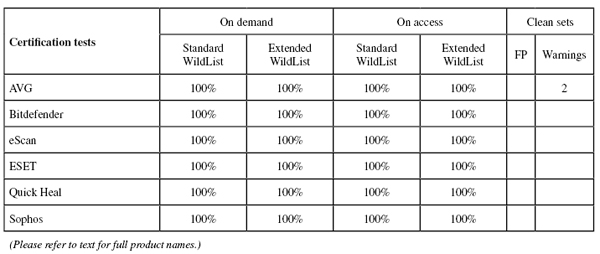

For the first time in living memory, this test saw a clean sweep of certification passes, with all products reaching the required standard for a VB100 badge, and most also doing well in terms of stability. John Hawes has all the details.

Copyright © 2014 Virus Bulletin

The members of the VB test team usually look forward to the annual Linux test as something of a break from the much larger comparatives we run on other platforms. With far fewer companies offering Linux solutions, the test is always much quieter than those on the Windows platforms, and the ease of automating tasks is an additional bonus.

This Linux comparative is even smaller than usual: a number of products we might expect to see taking part were notable by their absence, and a handful of other products that were initially entered for the test were found to be incompatible with our testing procedures.

Although the start of the testing period overlapped with the previous comparative, and the end of it threatened to coincide with a rather disruptive lab move, we managed to get through the required tests in fairly decent time, helping us realign our schedule with pre-existing plans.

Ubuntu continues to thrive, combining popularity in the consumer desktop market with strong inroads into server and corporate deployment. According to Wikipedia, it runs on more than a quarter of all Linux-based web servers. The version used in this test, 12.04 LTS, was released in April 2012 and is the most recent ‘Long Term Support’ version available.

Installation of the platform is a fairly slick and user-friendly process, in stark contrast to the Linux set up of days gone by, with all of the hardware in our relatively new test machines fully supported out of the box. We opted for a fairly minimal install, with no GUI and only a basic set of software, as this would be the standard set-up in most serious server environments: only necessary items should be installed, for both security and efficiency reasons.

The server systems were prepped with our standard sets of samples and scripts, with this being the last outing of our speed measurement set in its current incarnation – work is already well under way to build a fresh new set. The only change to the speed sets for this test was the addition of a set of Linux files, harvested from the main operating directories of a well-used Linux server machine.

We would generally advise readers to avoid comparing our speed and performance figures directly between tests, as other variables apply, but it is particularly difficult to compare between our normal Windows tests and Linux comparatives as there are some fundamental differences in the testing processes employed.

While we try to keep things as similar as possible between tests, including using the same sample sets and automation systems wherever possible, our Linux tests operate on two machines: the server for which protection is being tested, and a client from which all on-access tests are performed. This is intended to represent a normal client system connecting to a file server, and either being allowed to access or prevented from accessing various items stored there by the solution under test.

In this case, the client systems ran Windows 7 Professional SP1, recycling an image used in a previous comparative with some minor tweaks, and had shares from the test servers mounted as network drives via Samba. For the on-access protection tests we simply used the normal scripts we would deploy in a Windows comparative, and for speed and performance tests we used slightly tweaked versions of our standard automation. This allowed some simple communication with the server systems, controlling the recording of resource use and so on during the various test phases.

As the set of standard activities used for our main time delay testing is designed primarily to work on Windows, some sections of it may be less significant here than usual. For example, in the step where samples are downloaded from a local web server, there should be no way for the Linux back-end to tell how these files are being written to them, be it a web download or a simple copy from another local location. The rest of the activities, dominated by copy/paste/delete and archiving, should impact the file server in a normal way despite the fact that the script coordinating the actions is running on the Windows client.

For the certification tests, we used the latest WildList available on the test deadline of 18 December, which was the November list released that very day. This should be the last time we see separate ‘Standard’ and ‘Extended’ lists, with the two due to be merged before the next comparative.

Our clean sets were updated with a healthy swathe of new items, and pruned to remove older samples where necessary. The final sets used for false positive testing amounted to around 985,000 files, 250GB of data. The remaining test sets – containing malware samples used for the now unified Response and RAP tests – were compiled daily using the latest samples gathered in the appropriate time frame, according to our history of previously encountered samples.

With the prep work all complete, we were ready to get busy looking at the small group of products participating in the comparative.

Main version: 13.0.3114

Update versions: 3657/6926, 3681/7005, /7019, /7034

Last 6 tests: 5 passed, 1 failed, 0 no entry

Last 12 tests: 10 passed, 2 failed, 0 no entry

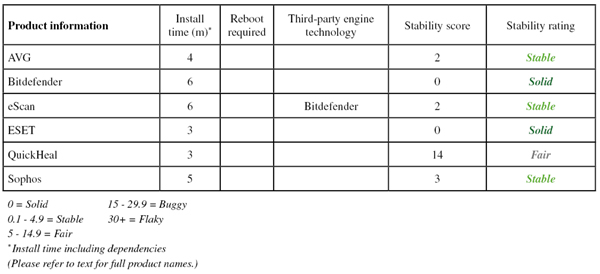

AVG’s product is provided in a range of formats including RPM, .deb and simple shell-script installers. We selected the .deb variant as the most suitable for our systems, and it appeared to install happily. However, initial experiments found many of the main components refusing to operate properly. Some moments of confusion followed, but with a little assistance from the developers, we soon realized that the product is 32-bit only and so required some additional compatibility libraries to function. With these in place, all seemed fine.

Operation was fairly intuitive, with a handful of main daemons controlled by a set of binaries which are used to pass in settings, and the scanner provided a good range of options in a simple and sensible style.

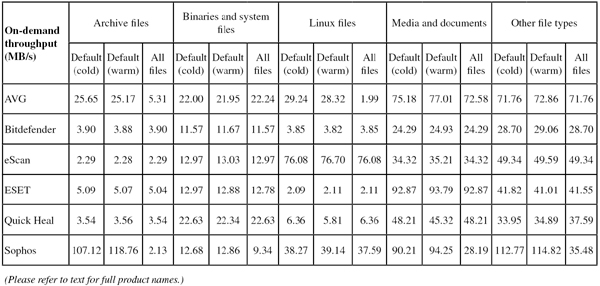

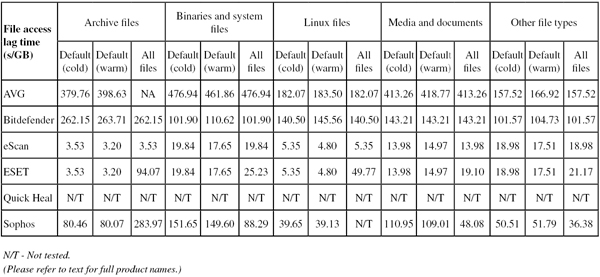

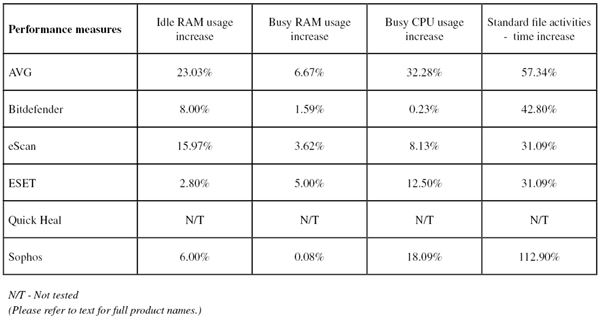

On-demand scanning speeds were pretty zippy with the standard settings, and slower in most areas with full archive and file type handling enabled. On-access overheads were heavy though, with all our sample sets taking a long time to plod through. Resource use was a little high, and our set of tasks took a fair time to process. There were a few minor issues, mostly down to the confusion caused by absent dependencies, and a Stable rating is earned.

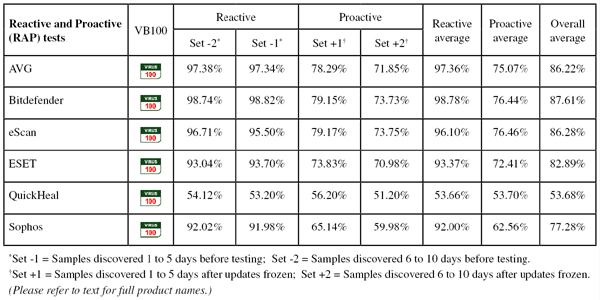

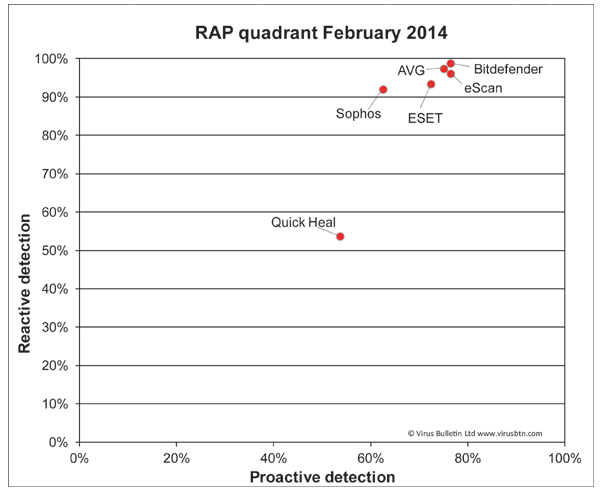

Detection was very good, dropping off fairly noticeably into the later parts of the proactive sets. The WildList and clean sets were handled well, and a VB100 award is earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Stability: Stable

Main version: 3.1.2.131213

Update versions: 27557

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 12 passed, 0 failed, 0 no entry

Bitdefender’s Samba-focused version is provided as a .deb install package wrapped in a script, which sets most things up nicely. A few additional manual steps are required to enable the integration with Samba, directing share access to the scanner via a VFS object. The configuration system again uses a tool to pass in settings rather than the more standard approach of a human-editable configuration file, but with a little practice the complex syntax proved reasonably usable.

Scanning speeds were not super-fast, with overheads a little heavier than some for simple file reading too. Resource use was low though, especially CPU use, and our set of tasks didn’t take too long to complete. There were no problems with stability, and a Solid rating is earned.

Detection was very good indeed, with superb figures in the reactive sets and pretty good scores in the proactive sets too. The certification sets were dealt with cleanly, and a VB100 award is earned.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Stability: Solid

Main version: 7.52159

Update versions: 7.52777, 7.52901

Last 6 tests: 5 passed, 1 failed, 0 no entry

Last 12 tests: 11 passed, 1 failed, 0 no entry

The Linux solution from eScan came as a handful of .deb install packages, plus a fairly lengthy list of dependencies to fulfil before getting started – which made for a little extra work, but saved us from having to dig around to find out what was required for ourselves. Again, on-access protection is for Samba shares only, using the VFS object approach and requiring a little manual tweaking of Samba config files to get it enabled.

The product’s layout is a little fiddly, with much of the configuration only possible via a web-based graphical interface – meaning that on our GUI-free servers we had to move to another machine to perform changes. For the on demand scanner at least, full control is available at the local command line, with reasonably clear and sensible syntax.

Scanning speeds were pretty decent, very fast indeed over our sets of Linux files, and on-access lag times were very low too. RAM use was a little high at idle, but fine when busy, and CPU use was fairly low too. Our set of tasks was not too heavily impacted, and stability was decent with just a few minor issues accessing our automation tools from the client system (which was blocked initially before access was granted).

Detection was again excellent: reactive sets were not covered quite as well as Bitdefender, whose engine is included here alongside ClamAV and some additional stuff of eScan’s own, but the proactive sets were very well handled, and with no issues in the certification sets, eScan also earns a VB100 award.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Stability: Stable

Main version: 4.0.10

Update versions: 9187, 9293, 9313, 9341

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 12 passed, 0 failed, 0 no entry

ESET’s Linux file server product is also provided as a .deb package, and installation was smooth and simple. For once, configuration is provided in the traditional manner with a well commented config file which can be edited to change settings. On access protection is available via a number of approaches, and we opted for the Samba-share-only method, requiring just a simple tweak to one of the Samba start-up files.

The layout is fairly clear and usable, helped a lot by clear commenting and documentation, and the scanner options are logical and easy to use too.

Scanning was mostly fairly fast, but a little slower than most over our set of Linux files, while overheads were pretty low, even with the archive-handling settings turned up. Resource use was low and our set of tasks got through in decent time. There were no stability issues, and the product earns a Solid rating.

Detection was pretty strong, dropping away a little into the proactive sets, but the certification sets presented no problems and a VB100 award is well deserved.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Stability: Solid

Main version: 15.00

Update versions: N/A

Last 6 tests: 5 passed, 0 failed, 1 no entry

Last 12 tests: 9 passed, 2 failed, 1 no entry

Quick Heal’s offering was provided as a simple zip file containing an installer shell script, which seemed to set things up nicely. Again, only Samba shares are protected, using the VFS object approach, with a little manual work involved in setting things up.

The configuration is fine-tuned using tools rather than editing simple files, but for the on-demand scanner, options are passed in in the normal manner, with a sensible format.

Scanning speeds were reasonable, but attempting to measure on-access speed, resource use and impact on standard tasks proved rather difficult, with our tools freezing up at the client end as access to Samba shares cut out, apparently overwhelmed by the repeated and rapid attempts to access files. We also saw some on-demand jobs crashing out – mainly in the core false positive sets but also in the sets used for speed measures – meaning that we had to repeat a number of the scan attempts. Most of the problems proved to be associated with a couple of reproducible issues, and a Fair stability rating is just about earned.

Detection was a little disappointing across the board, but the certification sets were properly handled, with nothing missed in the WildList sets and no false alarms in the clean sets, thus earning Quick Heal a VB100 award.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Stability: Fair

Main version: 4.94.0

Update versions: 3.48.0/4.96, 4.97.0/3.50.2/4.97

Last 6 tests: 6 passed, 0 failed, 0 no entry

Last 12 tests: 11 passed, 1 failed, 0 no entry

Sophos’s Linux product promised full on-access protection of the entire system, with previous experience teaching us that the Talpa hooking system used comes either as a pre-built binary, for major Linux versions, or in source form for compilation on-the-fly during the install process. Initial install attempts proved tricky, with the scripted process completing with apparent success but then reporting that the on-access component was unavailable due to unspecified problems. Much research eventually revealed that some of the required build tools were absent from our system. Adding the required tools made everything work nicely, but clearer reporting of the problem would have enabled us to pin it down much more quickly.

The layout again eschews traditional configuration files in favour of a suite of control tools, which can be a little confusing at first but soon becomes fairly usable. Scanning is much more traditional, with a clear and comprehensive set of command-line options.

Scanning speeds were very fast over archives with the default settings, where a list of file extensions is employed, and pretty speedy elsewhere too, even with the settings turned up. Overheads were fairly average, but one set (our set of Linux samples) failed to complete properly with archive handling enabled – this is likely to be due to odd file types or deeply nested archives. This was the only issue observed, meaning a Stable rating is merited. RAM use was low, CPU use a little higher than some at busy times, and our set of activities took a surprisingly long time to complete.

Detection was pretty strong in the reactive sets where cloud look ups are available, dropping quite sharply in the proactive sets where the product does not have the benefit of this technology. The core certification sets were well dealt with, meaning a VB100 award is earned by Sophos.

ItW Std: 100.00%

ItW Std (o/a): 100.00%

ItW Extd: 100.00%

ItW Extd (o/a): 100.00%

False positives: 0

Stability: Stable

As the test deadline approached we heard from two regular participants in our comparatives, Avast Software and Kaspersky Lab, who informed us that their respective Linux solutions were about to receive a major new version, so it would not be worth testing old and almost obsolete editions in this round of tests.

Products from two additional firms were submitted for testing and quite some time was spent installing and exploring them before we decided to exclude them from the final test. An offering from Norman was found only to provide on-access protection in the case of local read actions, and did not protect remote users connected via Samba, while a product from Total Defense proved to provide on-demand scanning only.

Without proper protection of Samba shares, neither product would have qualified for certification, and most of our speed and performance measures would have been useless, so both were left out of the test.

(Click for a larger version of the table)

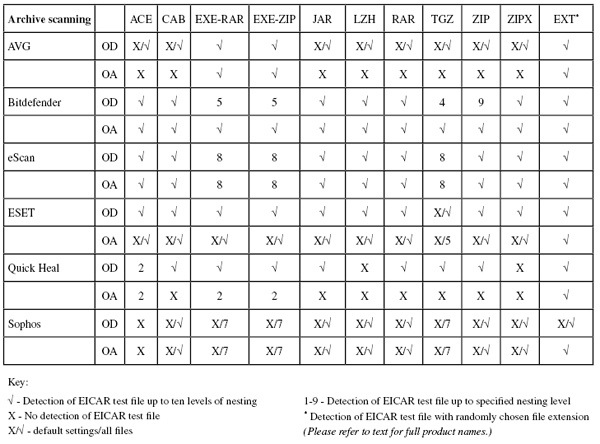

(Click for a larger version of the table)

(Click for a larger version of the table)

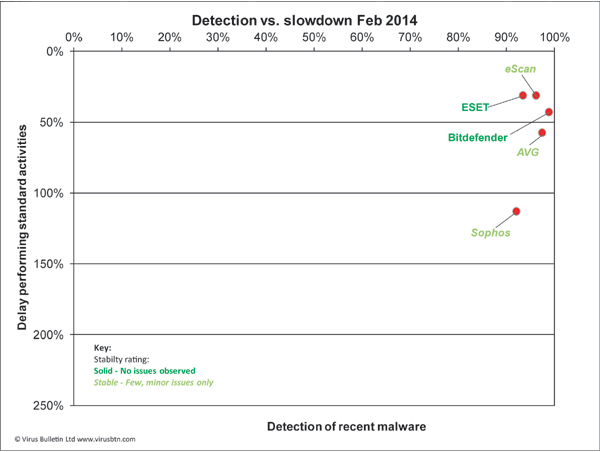

(Click for a larger version of the chart)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the table)

(Click for a larger version of the chart)

This was a fairly small test, but one which presented its share of difficulties – most of them as usual related to figuring out how to set up and configure products. Many participants provided detailed instructions, others complete manuals, but a few simply handed over an installer and left things up to us, which made for a good test of their products’ ease of use. In the end, we managed to figure most things out without too much head scratching or resorting to contacting developer teams for help.

For the first time in living memory, we have a clean sweep of certification passes, with all products reaching the required standard for a VB100 badge. Most also did well in terms of stability, although the problems we saw in one were fairly severe and meant losing a big chunk of the performance data we would have liked to have provided.

Linux support seems to be dwindling, or at least being given less priority by the firms that do provide it, but Linux systems continue to dominate many areas of the diverse server market, and continue to pick up fans in the consumer world too. So, it’s clear that protection is still needed, both to guard servers themselves from nasty Linux malware hijacking server credentials and taking over website installations, and to provide an extra layer of defence for clients connecting to file shares and other network resources. Hopefully, this time next year we will see a fuller field of participants.

The next test will be back to the Windows platform with Windows 7, and we expect many more products to participate. We’ll be making a few more incremental adjustments to our tests too, with a few planned expansions of our performance measurement processes alongside the replacement of our speed sets – as well as getting a first look at the new WildList format.

Test environment. Test environment: All tests were run on identical systems with AMD A6-3670K Quad Core 2.7GHz processors, 4GB DUAL DDR3 1600MHz RAM, dual 500GB and 1TB SATA hard drives and gigabit networking, running Ubuntu Linux 12.04 LTS, 64-bit Server Edition. Client systems used the same hardware and Microsoft Windows 7 Professional, 32-bit edition.

Any developers interested in submitting products for VB's comparative reviews, or anyone with any comments or suggestions on the test methodology, should contact [email protected]. The current schedule for the publication of VB comparative reviews can be found at http://www.virusbtn.com/vb100/about/schedule.xml.