Virus Bulletin

Copyright © Virus Bulletin 2016

Since we first started the VBSpam tests in the spring of 2009, we have run 44 official VBSpam tests, each covering a period of about two weeks.

Yet, in the periods between these tests our lab has been just as active, with thousands of emails continuing to flow through the products in real time. We have been using these between-test periods to provide those participating in the VBSpam tests (as well as a number of other companies that have asked us to measure the performance of their products on a consultancy basis) with feedback on what kind of emails have been misclassified by their products. For many participants, this regular feedback is just as valuable as the reports themselves, which demonstrate to the wider community how well the products have performed.

At the end of this month's test, however, we took a short break in order to move all our test machines to a brand new lab, which should allow the VBSpam tests to grow in both size and depth. This accounts for the delay in the publication of this report, as well as the fact that it is slightly shorter than previous ones.

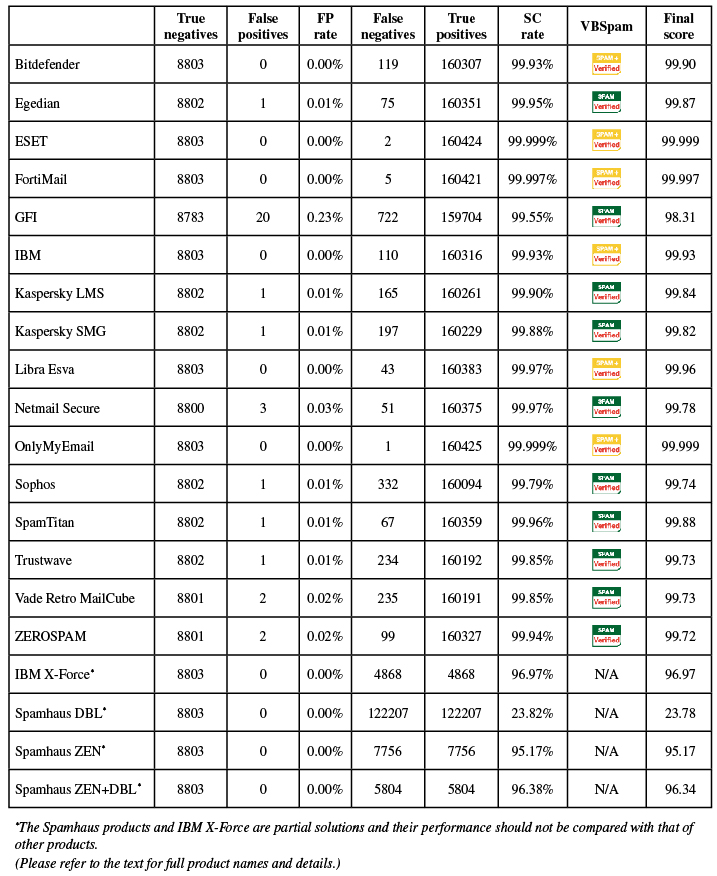

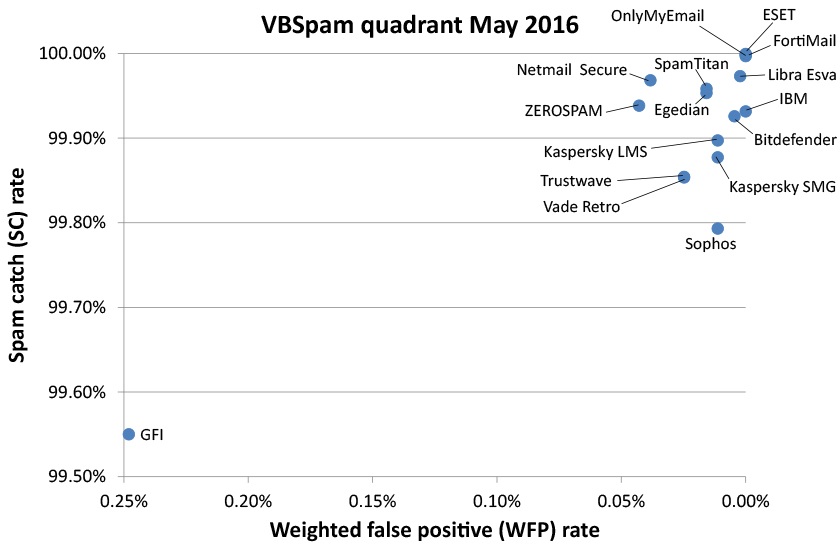

A total of 16 full email security (or anti-spam) solutions took part in this test, all of which achieved VBSpam certification. Six of them performed well enough to earn the VBSpam+ accolade.

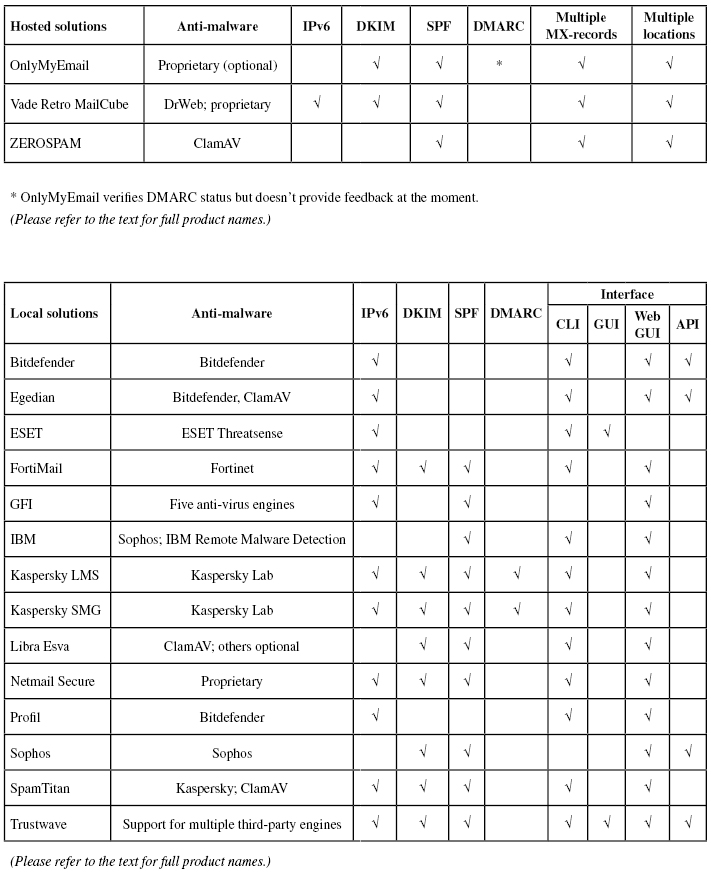

The VBSpam test methodology can be found at https://www.virusbulletin.com/testing/vbspam/vbspam-methodology/. As usual, emails were sent to the products in parallel and in real time, and products were given the option to block email pre-DATA (that is, based on the SMTP envelope and before the actual email was sent). However, on this occasion no products chose to make use of this option.

For those products running on our equipment, we use Dell PowerEdge machines. As different products have different hardware requirements – not to mention those running on their own hardware, or those running in the cloud – there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers' requirements and note that the amount of email we receive is representative of that received by a small organization.

To compare the products, we calculate a 'final score', which is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

Final score = SC - (5 x WFP)

Products earn VBSpam certification if the value of the final score is at least 98.

Meanwhile, products that combine a spam catch rate of 99.5% or higher with a lack of false positives and no more than 2.5% false positives among the newsletters earn a VBSpam+ award.

Extra criteria based on the speed of delivery of emails in the ham corpus were not included on this occasion, as a number of network issues meant we could not be 100 per cent confident about the accuracy of the speed measurements. However, we believe that the awards achieved would have been unchanged had speed data been included.

The test ran for 18 days, from 12am on 23 April to 12am on 11 May 2016 – slightly longer than normal to account for some interruptions as described below.

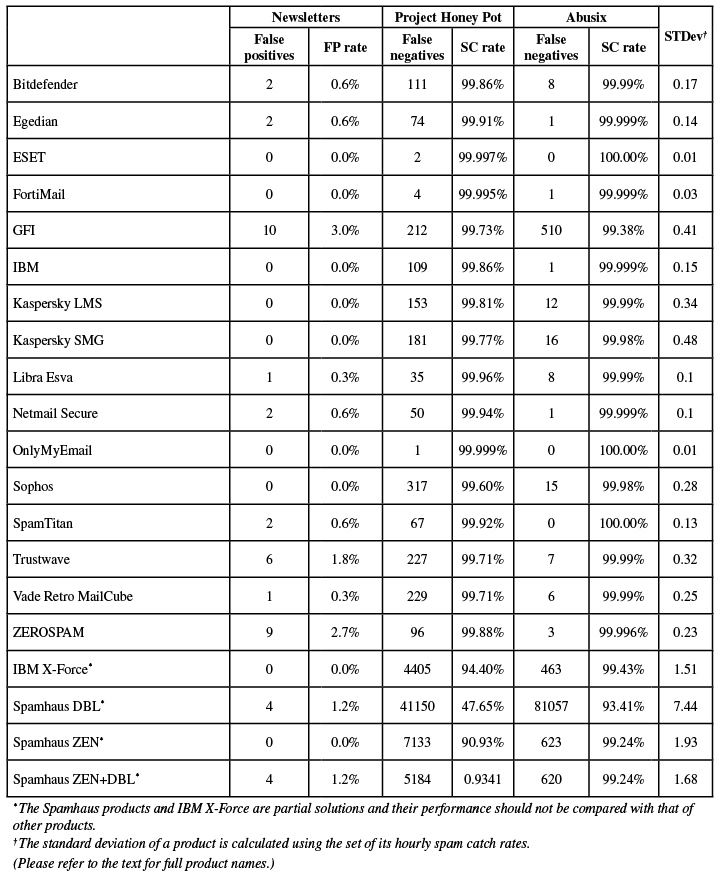

The test corpus consisted of 169,565 emails. 160,426 of these emails were spam, 78,612 of which were provided by Project Honey Pot, with the remaining 81,814 spam emails provided by spamfeed.me, a product from Abusix. They were all relayed in real time, as were the 8,803 legitimate emails ('ham') and 336 newsletters.

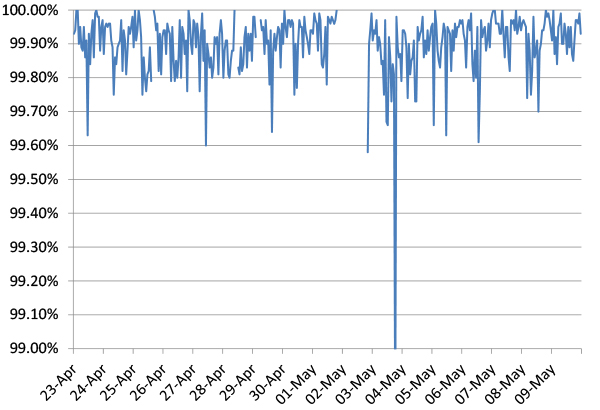

Figure 1 shows the catch rate of all full solutions throughout the test. To avoid the average being skewed by poorly performing products, the highest and lowest catch rates have been excluded for each hour.

Figure 1: Spam catch rate of all full solutions throughout the test period.

Two things are noticeable from this graph. The first is that spam catch rates remain very high, although slightly lower than in the last test.

The second noticeable thing is a number of small gaps: these concerned hours during which testing conditions weren't optimal, leading us to exclude the corresponding emails from the test. As our goal is to measure accurately and realistically rather than to run an always-on system of mail servers, this is not an issue, though we did extend the test by two days to make up the numbers.

The performance of some filters dropped slightly albeit noticeably on the evening of 4 May, but this appeared to have been caused by a number of unrelated emails rather than by a single campaign.

Although participating products all performed very well, some spam emails were missed, among which were some with malicious content. Of course, given the prevalence of the ransomware threat, this is something users are extremely concerned about. In future tests, we will be examining the ability of products to block this type of email in particular.

Three products – the OnlyMyEmail hosted email solution, ESET Mail Security for Microsoft Exchange, and Fortinet's FortiMail appliance – achieved a final score that, rounded to two decimals, would be 100. Each of these products had no false positives, not even among the newsletters, and missed just one, two and five spam emails respectively.

Clearly, these three products achieved a VBSpam+ award, as did IBM, Bitdefender and Libra Esva. The remaining 10 products all achieved a VBSpam award; in many cases, it was just a single false positive that stood in the way of them achieving a VBSpam+ award. For detailed descriptions of the products, we refer to previous test reports.

Regular readers of these reports will notice the appearance of Trustwave's Secure Email Gateway product. This is a new participant – although a previous incarnation of the same product impressed us with its performance in the very early VBSpam tests. The product runs on Windows and with a very good catch rate came within a whisker (that is, within one false positive) of a VBSpam+ award.

IBM's X-Force API is also new to the test. The product is an IP blacklist, which queried the sending IP address of every email and made its blocking decision based solely on that. Therefore it entered the test as a partial solution, whose performance shouldn't be compared with those of full solutions and not necessarily even with those of other partial solutions. Still, even outside of that context, blocking almost 97 per cent of emails based on the IP address is pretty impressive.

SC rate: 99.93%

FP rate: 0.00%

Final score: 99.90

Project Honey Pot SC rate: 99.86%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.6%

SC rate: 99.95%

FP rate: 0.01%

Final score: 99.87

Project Honey Pot SC rate: 99.91%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.6%

SC rate: 99.999%

FP rate: 0.00%

Final score: 99.999

Project Honey Pot SC rate: 99.997%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.0%

SC rate: 99.997%

FP rate: 0.00%

Final score: 99.997

Project Honey Pot SC rate: 99.995%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.0%

SC rate: 99.55%

FP rate: 0.23%

Final score: 98.31

Project Honey Pot SC rate: 99.73%

Abusix SC rate: 99.38%

Newsletters FP rate: 3.0%

SC rate: 99.93%

FP rate: 0.00%

Final score: 99.93

Project Honey Pot SC rate: 99.86%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.0%

SC rate: 99.90%

FP rate: 0.01%

Final score: 99.84

Project Honey Pot SC rate: 99.81%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.0%

SC rate: 99.88%

FP rate: 0.01%

Final score: 99.82

Project Honey Pot SC rate: 99.77%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.0%

SC rate: 99.97%

FP rate: 0.00%

Final score: 99.96

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.3%

SC rate: 99.97%

FP rate: 0.03%

Final score: 99.78

Project Honey Pot SC rate: 99.94%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.6%

SC rate: 99.999%

FP rate: 0.00%

Final score: 99.999

Project Honey Pot SC rate: 99.999%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.0%

SC rate: 99.79%

FP rate: 0.01%

Final score: 99.74

Project Honey Pot SC rate: 99.60%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.0%

SC rate: 99.96%

FP rate: 0.01%

Final score: 99.88

Project Honey Pot SC rate: 99.92%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.6%

SC rate: 99.85%

FP rate: 0.01%

Final score: 99.73

Project Honey Pot SC rate: 99.71%

Abusix SC rate: 99.99%

Newsletters FP rate: 1.8%

SC rate: 99.85%

FP rate: 0.02%

Final score: 99.73

Project Honey Pot SC rate: 99.71%

Abusix SC rate: 99.99%

Newsletters FP rate: 0.3%

SC rate: 99.94%

FP rate: 0.02%

Final score: 99.72

Project Honey Pot SC rate: 99.88%

Abusix SC rate: 99.996%

Newsletters FP rate: 2.7%

The products listed below are 'partial solutions', which means they only have access to part of the emails and/or SMTP transaction, and are intended to be used as part of a full spam solution. As such, their performance should neither be compared with those of the full solutions listed above, nor necessarily with each other's.

SC rate: 96.97%

FP rate: 0.00%

Final score: 96.97

Project Honey Pot SC rate: 94.40%

Abusix SC rate: 99.43%

Newsletters FP rate: 0.0%

SC rate: 23.82%

FP rate: 0.00%

Final score: 23.78

Project Honey Pot SC rate: 47.65%

Abusix SC rate: 0.93%

Newsletters FP rate: 1.2%

SC rate: 95.17%

FP rate: 0.00%

Final score: 95.17

Project Honey Pot SC rate: 90.93%

Abusix SC rate: 99.24%

Newsletters FP rate: 0.0%

SC rate: 96.38%

FP rate: 0.00%

Final score: 96.34

Project Honey Pot SC rate: 93.41%

Abusix SC rate: 99.24%

Newsletters FP rate: 1.2%

In this, the 44th VBSpam test, spam catch rates continued to be very good. Yet emails do occasionally slip through the mazes of email security solutions, and while one may argue we are doing as well as we can, those missed spam emails often have malware attached and can thus cause real harm. For that reason, in future tests we will be looking at how well spam filters block specific campaigns.