Virus Bulletin

Copyright © Virus Bulletin 2016

Over the years, cybercriminals have perfected the way they spread malware through email. They craft their emails to look like messages that people are likely to receive in everyday life, such as receipts or delivery notifications, and use attachments in file formats that people are used to receiving – quite often Word or Excel files in which the malware‑downloading component requires activation by a gullible user. (It has long been known that end-users are the weakest link in just about every system.)

There are many things that individuals and organizations can reasonably be expected to do to make malware infections less likely, but blocking all Office documents attached to emails is not one of them. And thus there is no way to a priori block these emails and their malicious payload.

But how bad are things, really? After all, while there are millions of emails like this being sent every day, almost every mailbox is protected by an email security solution, or spam filter.

In this report, we share some good news: spam filters block the overwhelming majority of these malware-containing emails, at a rate even higher than that at which they block 'ordinary' spam. And while one should never be complacent about threats, this does explain why, despite all the dangers out there, for most people the Internet in general, and email in particular, continues to work.

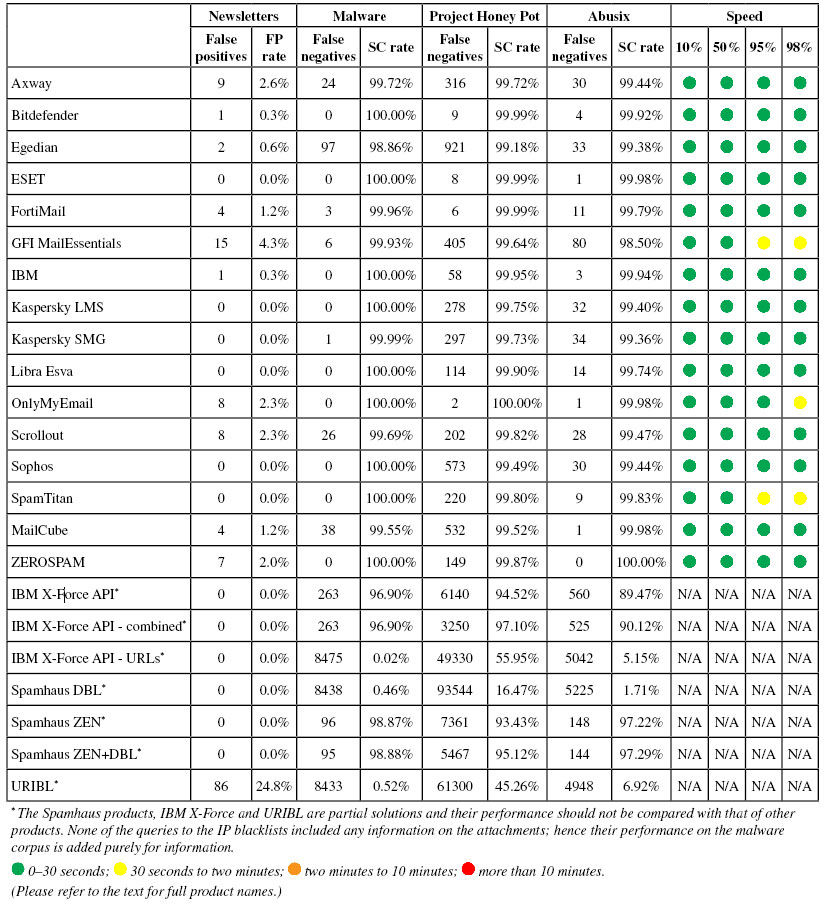

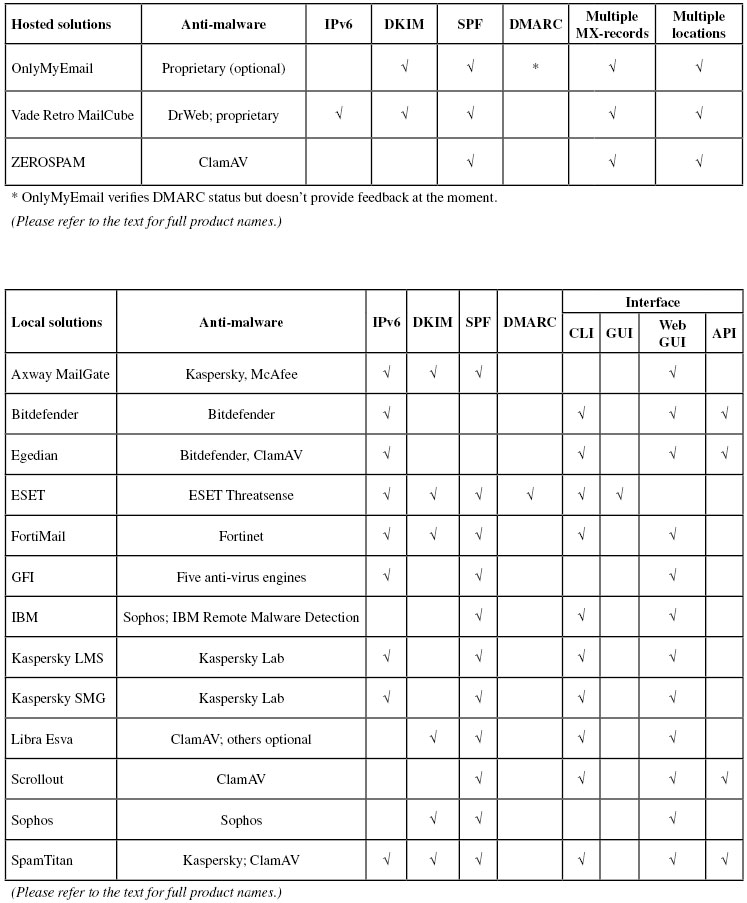

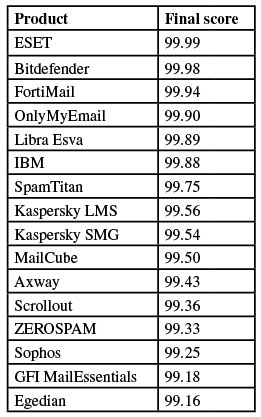

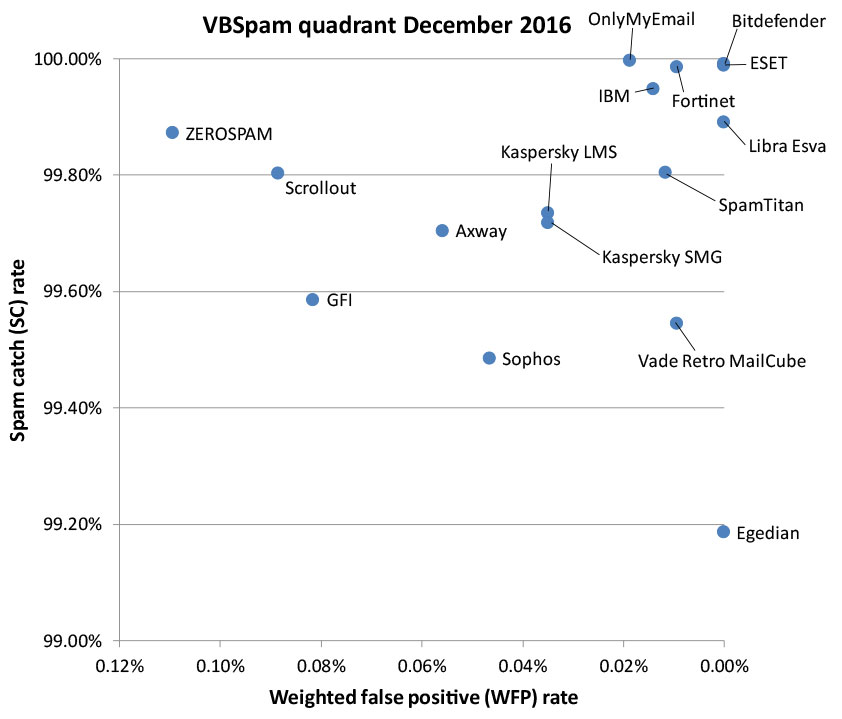

We make these statements based on the performance of 16 full email security (or anti-spam) solutions, from both large and small vendors (and one open-source provider), in the 46th comparative VBSpam test. All of the products in the test reached the benchmark required for VBSpam certification, and six of them performed well enough to earn the VBSpam+ accolade. We also tested five DNS-based blocklists, as well as two combined lists.

To make this report more readable, we have moved the technical information about the test, as well as the methodology, to an appendix. Regular readers of these reports will also have noticed that they are now published quarterly.

The conclusion drawn from this test remains the same as that drawn from a dozen previous tests: most spam is blocked. In fact, of the more than 117,000 spam emails used in this test, 97.7% were blocked by all full email security solutions, with most of the remaining spam emails missed by just one or two products. Only 18 emails (less than 0.02%) were missed by more than half of the full solutions.

Unsurprisingly, the most difficult to block spam emails are the ones that verge on the legal: legitimate companies using illegitimate means and sloppy sender practices to get their message seen. However, the three 'winners' – from the spammers' point of view – in this test were actual scams: two emails that urged the recipient to contact the sender, and a third that contained what appeared to be a phishing link, but was inactive by the time we accessed it.

Much as scams are bad, and can be costly for the victim, far worse are emails that have malware attached. Among the spam emails in this test, we found 8,477 that contained malicious attachments. Following requests from both participating vendors and readers, we decided to report the performance of products on this corpus as a separate metric.

Malware was seen in spam throughout the duration of the test, but we identified a few dozen individual campaigns. Most of the attachments were either zip files with the payload hidden inside or Word documents where the payload would be downloaded through the execution of macros; one campaign used RAR archives as attachments.

It is beyond the scope of this report to analyse the attachments, but it is rare for them to contain the final payload; rather, attachments are downloaders that have the sole purpose of downloading the payload onto the victim's machine. The payload that is eventually downloaded may depend on a number of parameters, such as the victim's location, but it is fair to say that many of the people who opened the attachments we saw would have become infected with ransomware, most likely Locky.

The story of ransomware and how much damage it does has been told often enough, but we have more good news to report: in this test, all but one of the participating full solutions blocked at least 199 out of every 200 emails that contained a malicious attachment, with nine out of 16 products blocking all of them.

Moreover, none of these emails were missed by more than two products, and no campaign stood out as being significantly difficult to block, thus showing that cybercriminals haven't found a secret way past the spam filter.

Still, while this is certainly good news, there is an important caveat: we looked at the ability of products to block these emails as spam. What we showed was that, on average, more than 99.8% of these emails would not end up in users' inboxes.

But email that doesn't make it to the inbox is often still accessible to users. Given the cost of false positives, this is a feature of spam filters rather than a bug, but it does mean that there is still the possibility of a user being infected, even if the spam filter blocked the email. This is why many spam filters tread extra carefully when it comes to malicious attachments: they remove the attachments from the emails, or block access to the emails altogether. In this test, we did not assess products' ability to detect the malware.

It is possible that many malicious spam emails were only recognized as spam. After all, the two IP-blacklists we tested stopped the overwhelming majority of them, despite not having access to the attachments. In future tests, we aim to look at products' ability to detect malicious attachments as such.

In this test, OnlyMyEmail stood out for missing just three spam emails in the spam corpus, while ESET, Bitdefender and Fortinet all blocked at least 99.98% of spam as well. These four products did not block any legitimate emails either, earning them VBSpam+ awards, along with Libra Esva and Vade Retro MailCube. 'Clean sheets' – where the product didn't block any legitimate emails or any emails from the newsletter feed – were achieved by ESET and Libra Esva.

New in this test is a domain-blacklist that is part of IBM's X-Force suite. We found the product to block well over half of the spam emails in our test set, purely by looking at the domains visible inside them. We also included in the test a combination of both IBM X-Force lists.

SC rate: 99.71%

FP rate: 0.04%

Final score: 99.43

Project Honey Pot SC rate: 99.72%

Abusix SC rate: 99.44%

Newsletters FP rate: 2.6%

Malware SC rate: 99.72%

| 10% | 50% | 95% | 98% |

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.98

Project Honey Pot SC rate: 99.99%

Abusix SC rate: 99.92%

Newsletters FP rate: 0.3%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.19%

FP rate: 0.00%

Final score: 99.16

Project Honey Pot SC rate: 99.18%

Abusix SC rate: 99.38%

Newsletters FP rate: 0.6%

Malware SC rate: 98.86%

| 10% | 50% | 95% | 98% |

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.99

Project Honey Pot SC rate: 99.99%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.94

Project Honey Pot SC rate: 99.99%

Abusix SC rate: 99.79%

Newsletters FP rate: 1.2%

Malware SC rate: 99.96%

| 10% | 50% | 95% | 98% |

SC rate: 99.59%

FP rate: 0.05%

Final score: 99.18

Project Honey Pot SC rate: 99.64%

Abusix SC rate: 98.50%

Newsletters FP rate: 4.3%

Malware SC rate: 99.93%

| 10% | 50% | 95% | 98% |

SC rate: 99.95%

FP rate: 0.01%

Final score: 99.88

Project Honey Pot SC rate: 99.95%

Abusix SC rate: 99.94%

Newsletters FP rate: 0.3%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.74%

FP rate: 0.04%

Final score: 99.56

Project Honey Pot SC rate: 99.75%

Abusix SC rate: 99.40%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.72%

FP rate: 0.04%

Final score: 99.54

Project Honey Pot SC rate: 99.73%

Abusix SC rate: 99.36%

Newsletters FP rate: 0.0%

Malware SC rate: 99.99%

| 10% | 50% | 95% | 98% |

SC rate: 99.89%

FP rate: 0.00%

Final score: 99.89

Project Honey Pot SC rate: 99.90%

Abusix SC rate: 99.74%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.997%

FP rate: 0.00%

Final score: 99.90

Project Honey Pot SC rate: 100.00%

Abusix SC rate: 99.98%

Newsletters FP rate: 2.3%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.80%

FP rate: 0.07%

Final score: 99.36

Project Honey Pot SC rate: 99.82%

Abusix SC rate: 99.47%

Newsletters FP rate: 2.3%

Malware SC rate: 99.69%

| 10% | 50% | 95% | 98% |

SC rate: 99.49%

FP rate: 0.05%

Final score: 99.25

Project Honey Pot SC rate: 99.49%

Abusix SC rate: 99.44%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.80%

FP rate: 0.01%

Final score: 99.75

Project Honey Pot SC rate: 99.80%

Abusix SC rate: 99.83%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.55%

FP rate: 0.00%

Final score: 99.50

Project Honey Pot SC rate: 99.52%

Abusix SC rate: 99.98%

Newsletters FP rate: 1.2%

Malware SC rate: 99.55%

| 10% | 50% | 95% | 98% |

SC rate: 99.87%

FP rate: 0.09%

Final score: 99.33

Project Honey Pot SC rate: 99.87%

Abusix SC rate: 100.00%

Newsletters FP rate: 2.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 94.29%

FP rate: 0.00%

Final score: 94.29

Project Honey Pot SC rate: 94.52%

Abusix SC rate: 89.47%

Newsletters FP rate: 0.0%

Malware SC rate: 96.90%

SC rate: 96.78%

FP rate: 0.01%

Final score: 96.72

Project Honey Pot SC rate: 97.10%

Abusix SC rate: 90.12%

Newsletters FP rate: 0.0%

Malware SC rate: 96.90%

SC rate: 53.65%

FP rate: 0.01%

Final score: 53.59

Project Honey Pot SC rate: 55.95%

Abusix SC rate: 5.15%

Newsletters FP rate: 0.0%

Malware SC rate: 0.02%

SC rate: 15.80%

FP rate: 0.00%

Final score: 15.80

Project Honey Pot SC rate: 16.47%

Abusix SC rate: 1.71%

Newsletters FP rate: 0.0%

Malware SC rate: 0.46%

SC rate: 93.60%

FP rate: 0.00%

Final score: 93.60

Project Honey Pot SC rate: 93.43%

Abusix SC rate: 97.22%

Newsletters FP rate: 0.0%

Malware SC rate: 98.87%

SC rate: 95.22%

FP rate: 0.00%

Final score: 95.22

Project Honey Pot SC rate: 95.12%

Abusix SC rate: 97.29%

Newsletters FP rate: 0.0%

Malware SC rate: 98.88%

SC rate: 43.52%

FP rate: 0.50%

Final score: 40.21

Project Honey Pot SC rate: 45.26%

Abusix SC rate: 6.92%

Newsletters FP rate: 24.8%

Malware SC rate: 0.52%

After a decline at the beginning of the decade, malicious spam has once again become a very big problem and one that deserves special attention in a report like this one.

The next test report – to be published in March 2017 – will continue to look at this aspect of spam. Those interested in submitting a product should contact [email protected].

The full VBSpam test methodology can be found at https://www.virusbulletin.com/testing/vbspam/vbspam-methodology/.

The test ran for 19 days, from 12am on 19 November to 12am on 7 December 2016. The test period was extended by three days, following unexpected maintenance performed by our Internet provider, which caused some downtime on 24 and 25 November.

The test corpus consisted of 126,167 emails. 117,303 of these were spam, 111,987 of which were provided by Project Honey Pot, with the remaining 5,316 spam emails provided by spamfeed.me, a product from Abusix. There were 8,517 legitimate emails ('ham') and 347 newsletters.

Moreover, 8,477 emails from the spam corpus were found to contain malicious attachments; though we report separate performance metrics on this corpus, it should be noted that these are also part of the full spam corpus.

Emails were sent to the products in real time and in parallel. Though products received the email from a fixed IP address, all products had been set up to read the original sender's IP address as well as the EHLO/HELO domain sent during the SMTP transaction, either from the email headers or through an optional XCLIENT SMTP command. Consequently, products were able to filter email in an environment that very closely resembled one in which they would be deployed in the real world.

Those products running in our lab were all run as virtual machines on a VMware ESXi cluster. As different products have different hardware requirements – not to mention those running on their own hardware, or those running in the cloud – there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers' requirements and note that the amount of email we receive is representative of that received by a small organization.

Although we stress that different customers have different needs and priorities, and thus different preferences when it comes to the ideal ratio of false positive to false negatives, we created a one-dimensional 'final score' to compare products. This is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

Final score = SC - (5 x WFP)

In addition, for each product, we measure how long it takes to deliver emails from the ham corpus (excluding false positives) and, after ordering these emails by this time, we colour-code the emails at the 10th, 50th, 95th and 98th percentiles:

| (green) = up to 30 seconds | |

| (yellow) = 30 seconds to two minutes | |

| (orange) = two to ten minutes | |

| (red) = more than ten minutes |

Products earn VBSpam certification if the value of the final score is at least 98 and the 'delivery speed colours' at 10 and 50 per cent are green or yellow and that at 95 per cent is green, yellow or orange.

Meanwhile, products that combine a spam catch rate of 99.5% or higher with a lack of false positives, no more than 2.5% false positives among the newsletters and 'delivery speed colours' of green at 10 and 50 per cent and green or yellow at 95 and 98 per cent earn a VBSpam+ award.