2011-08-01

Abstract

This month's VB100 tests on Windows Vista proved to be something of a marathon for the VB test team, with several of the 48 products on test misbehaving - causing crashes, blue screens and system slowdowns. John Hawes has all the details and reveals which products achieved VB100 certification.

Copyright © 2011 Virus Bulletin

This time last year, when we published our previous test on Windows Vista (see VB, August 2010, p.21), I speculated that it might be the final appearance of the platform in these pages. Vista has been plagued by criticisms and complaints since its first appearance in 2007, and has quickly been superseded by a far superior replacement in Windows 7, while its supposed predecessor Windows XP still refuses to fade away.

Usage of Vista has continued to decline very gradually though, with estimates this time last year putting it on around 20% of desktops and the latest guesses ranging from 10% to 15%. This makes it still a pretty significant player in the market, and until those lingering users replace their OS with something better (be it newer or older), we feel obliged to continue checking how well served they are by the current crop of anti-malware solutions. Gluttons for punishment that we are, we opted to try a 64-bit version of the platform, which seemed almost guaranteed to bring out any lingering shakiness in products, many of which have proven themselves in recent tests to be highly susceptible to collapsing under any sort of pressure.

Preparing the test systems in something of a hurry after several recent tests overran, we found the set-up process to be rather more painful than usual, mainly thanks to the need to apply two service packs separately from the media to hand. With this done, and the resulting clutter mopped up, we made the usual minor tweaks to the systems, installing a handful of useful tools, setting up the networking and desktop to our liking and so on, before taking snapshots of the systems and moving on to preparing the sample sets.

The clean set saw a fair bit of attention this month, with the usual cleanup of older and less relevant items, and the addition of a swathe of new files culled from magazine cover CDs, the most popular items from major download sites, as well as items from the download areas of some leading software brands. After dumping a fair amount of older clutter, the final set weighed in at just over half a million files, 140GB.

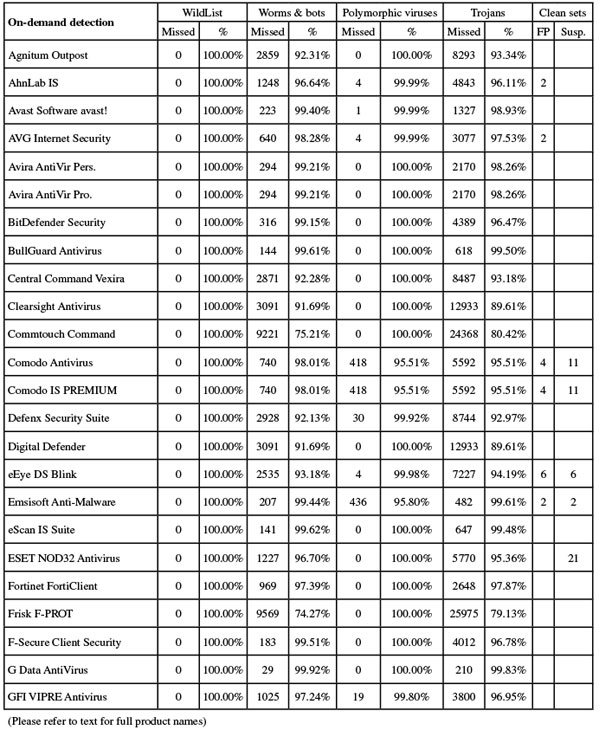

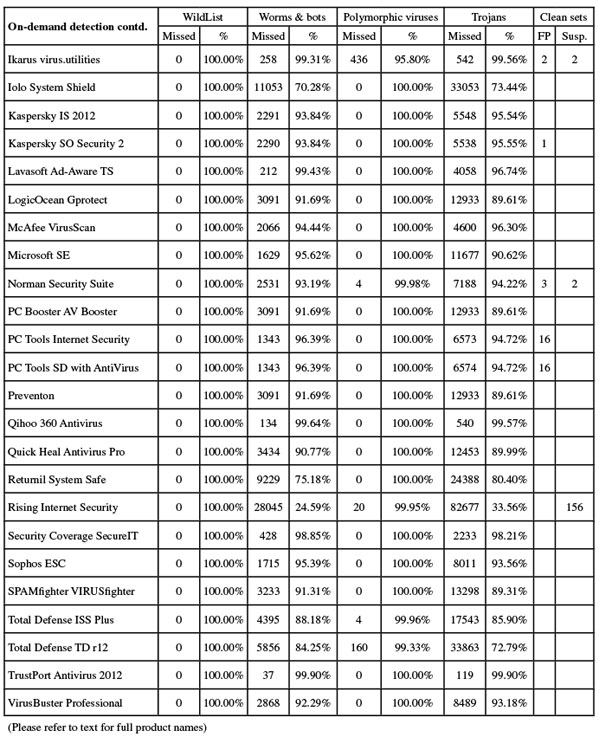

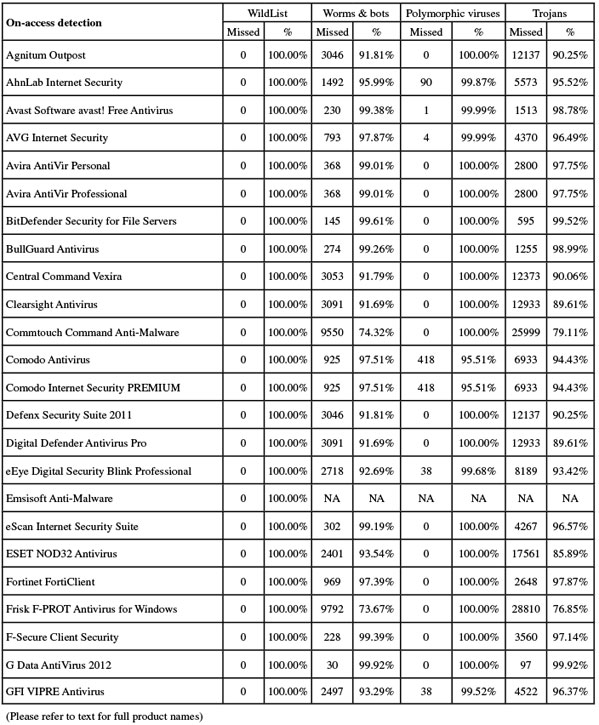

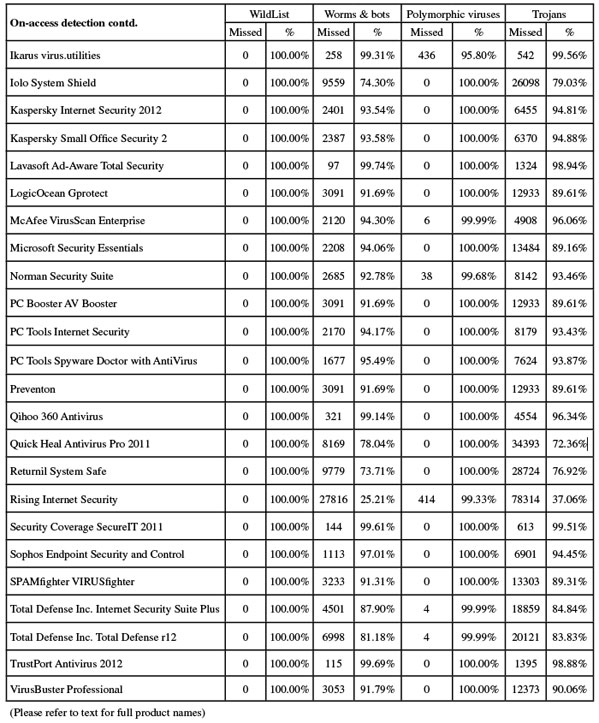

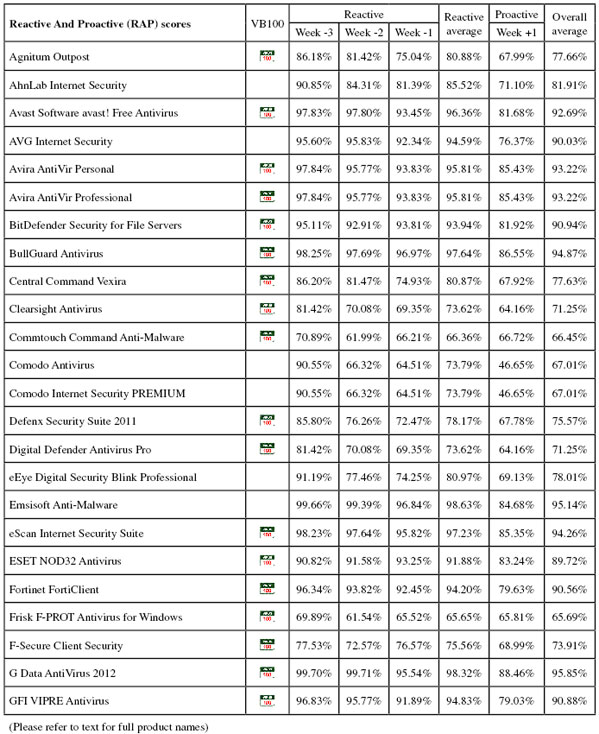

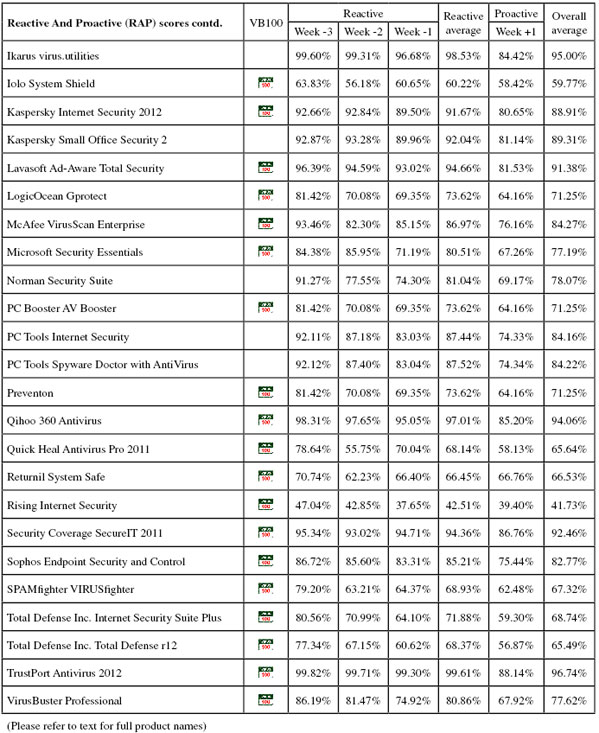

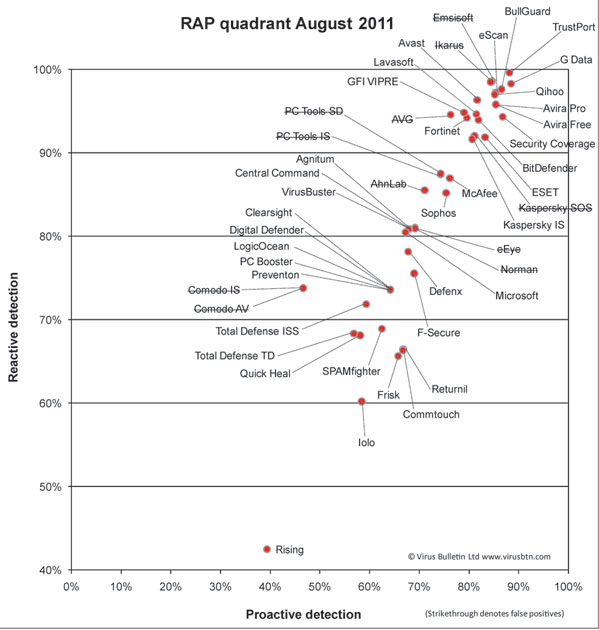

Building the sets of malicious samples using all new files seen during the appropriate periods – June for the RAP set and May for the sets of trojans, worms and bots – led to some rather large collections in each category, which needed verification and classification to bring them down to a manageable size. Initial tests were run with the unfiltered sets, but these were trimmed down in time for the products which we expected to be troublesome, with further filtering continuing throughout the test period. Final numbers were around 35,000 samples in the worms and bots set; 120,000 trojans; and an average 40,000 for each of the weekly RAP sets.

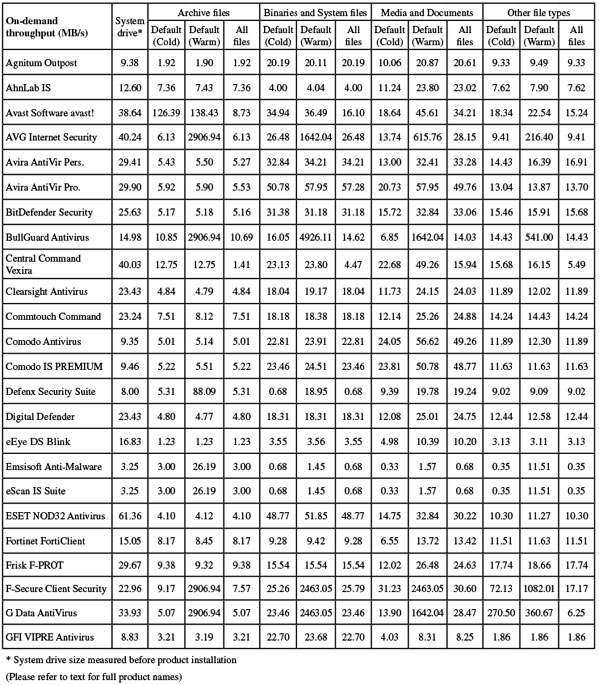

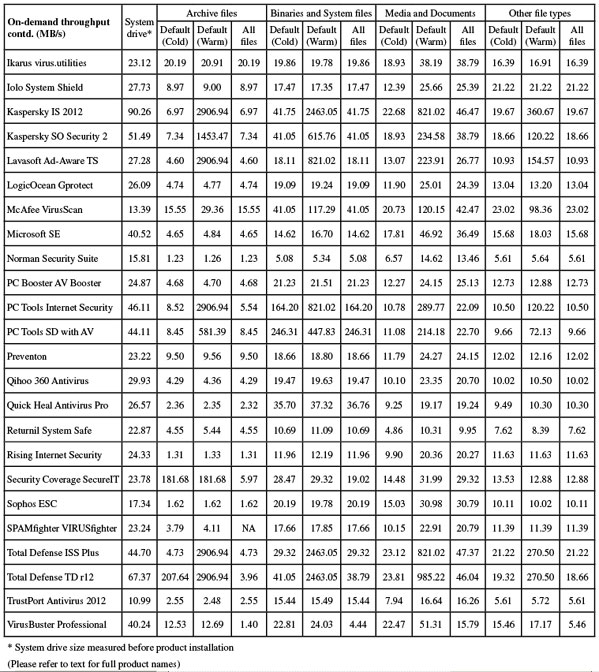

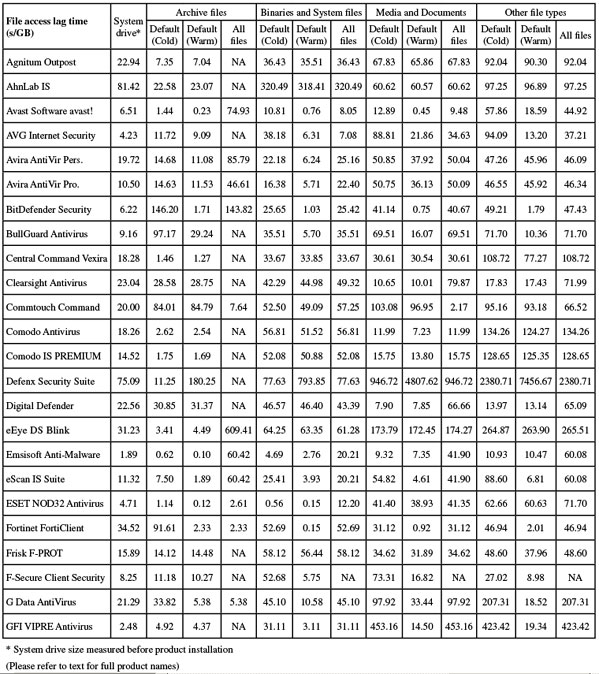

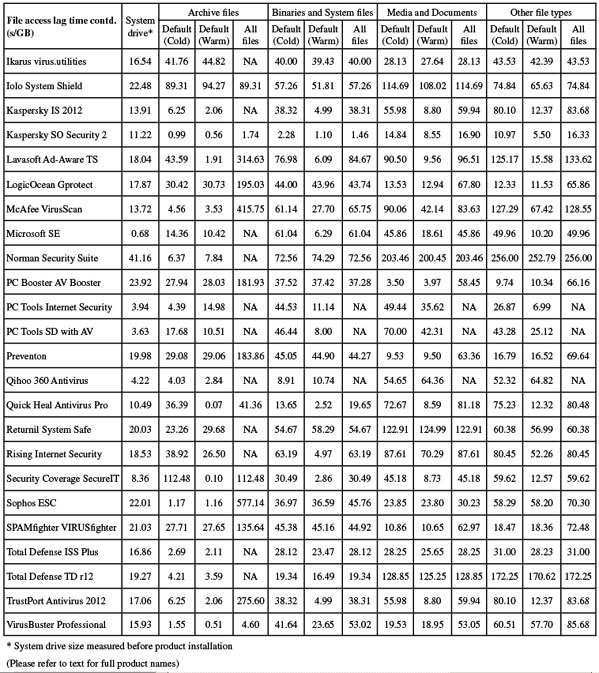

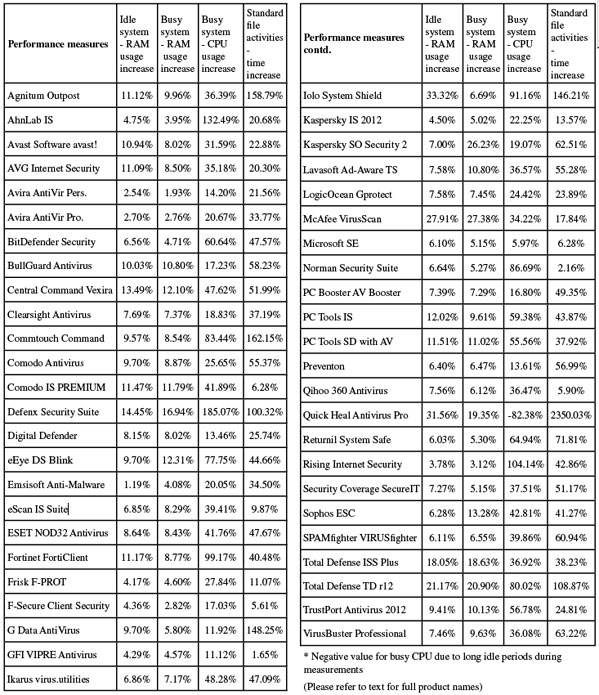

The clean sample sets used for the speed measures remained unchanged. The speed test scripts were adjusted slightly to include more runs of the collection of standard activities – this test was run ten times per product this month, with the average time to complete the jobs compared with a baseline figure taken from multiple runs on clean systems.

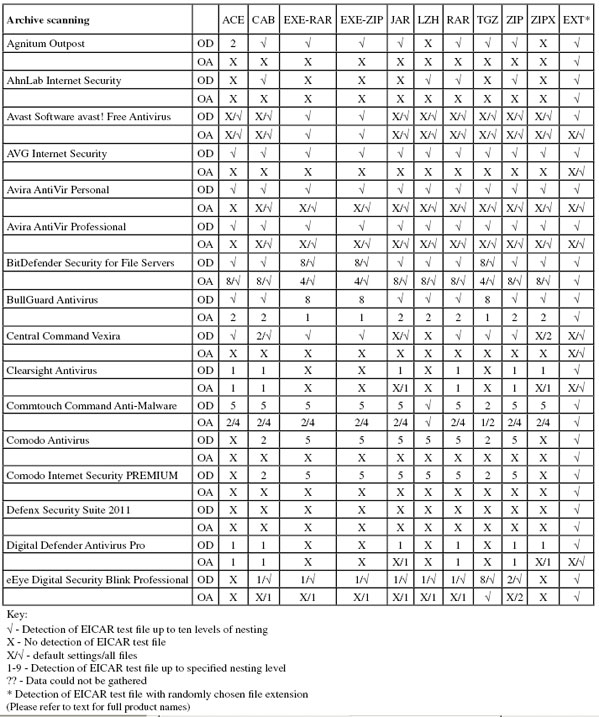

The WildList set included nothing too remarkable, with several more variants of W32/Virut falling off the list, leaving very few complex polymorphic items remaining. As testing drew to a close, the WildList Organization made public its new extended list, with a wider range of malware types included – we plan to include this as part of our requirements for future tests, more on which later.

With everything set up and ready to go, it was time to start working through this month’s list of products, all submitted by the deadline of 22 June. The final list totalled 48 products – a number which a couple of years ago would have been a record, but compared to some recent tests actually seems rather small. To get through the work in reasonable time, we opted to keep to our plan introduced last time, of capping speed measures at two hours to prevent slowpokes taking up too much of our precious time; we also decided to keep a closer eye on just how long each product took to complete the full suite of tests and explicitly report it, along with details of any crashes, hangs or other problems which caused us headaches. Of course, as some of the tests involve unrealistic scenarios – such as intensive bombardment with infected samples – slow completion times and bugs are not considered in themselves cause to deny a product certification, but they may be of some interest to our readers.

Version 3415.520.1248, Anti-Malware database 22/06/2011

First up on this month’s product roster, Agnitum has a solid record in our tests but has been causing some unexpected slowdowns of late. The 101MB install package ran through its process fairly slowly thanks to a large number of stages, including an option to join a community scheme disguised as a standard EULA acceptance. When the set-up was eventually complete, and following the required reboot, speed tests ran through without issues, but took quite some time. There were heavy lag times accessing files, slow scanning speeds, and a hefty impact on our activities suite. CPU use was also high, although RAM use was not excessive.

Getting through the clean set took an outrageously long time – over 58 hours. We later noted that the fastest time for a product to complete this task this month was less than an hour. Given that these are clean files only, it seems unlikely that any real-world user would be prepared to countenance such sluggish scanning speeds. By comparison, the malware sets were processed in quite reasonable time, adding only another day to the total testing time. Detection rates were pretty solid as usual, with respectable scores in the main sets and decent levels in the RAP sets, declining steadily but not catastrophically through the four weeks.

The core requirements in the WildList and clean sets were met without problems, and Agnitum earns another VB100 award. This gives the vendor five passes and one no-entry in the last six tests; eight passes and four no-entries in the last two years. This month’s test showed no crashes or other problems, but the slowdowns meant that testing – which we had hoped to complete within 24 hours – took more than five full days to get through.

Product version 8.0.4.7 (Build 940), Engine version 2011.06.21.90

AhnLab’s current product arrived as a fairly large 180MB install package, although no further updates were required, and the set-up process was speedy and simple, completing in a handful of clicks and a minute or so of waiting, with no need to reboot. On completion however, Windows popped up a dialog suggesting that the product hadn’t installed correctly, although all seemed to be in order.

The interface is clean and neat, and a good level of configuration is available for the numerous components, without too much difficulty navigating – although in some places controls are not grouped quite as one might expect. Scanning speeds were fairly slow, especially in the set of executable files, and on-access lag times were also fairly heavy; CPU use was very high when the system was busy, but RAM use was low and impact on our suite of tasks was not too intrusive either.

The detection tests were hampered by blue screens during the intensive on-access tests, with errors warning of page faults in non-paged areas. We also had problems with the on-demand tests, with logs reporting larger numbers of items found than were displayed in the log viewer utility. After several attempts we managed to get a complete set of data together, showing some pretty good detection rates, with high scores in the main sets and a good level in the RAP sets, declining steadily into the proactive week. The WildList was handled well, but in the clean sets a couple of items, including the popular Thunderbird mail client, were labelled as malware, denying AhnLab a VB100 award this month.

The vendor’s record has been somewhat patchy of late, with two passes, two fails and two tests not entered in the last six. Over the last two years AhnLab has had five passes, four fails and three missed tests. This month testing took five full days to complete, with the main problems being blue screens causing a total system crash twice during heavy bombardment, the on-access component falling over occasionally, and issues with the logging system.

Program version 6.0.01184, Virus definitions version 110622-1

Avast’s version 6 came hot on the heels of version 5, and is pretty similar in many respects, the main addition being a sandboxing system for suspect items. The installer is compact at 58MB including all updates, and runs through rapidly with minimal input required from the user; the main item of note is the offer to install the Google Chrome browser, a fairly typical add-on with free software but not something which pleases everyone. No reboot was needed to complete the set-up process.

The interface remains very easy on the eye and a pleasure to use, with a splendid depth of configuration provided for advanced users without making things seem too complicated for novices. The control system features detailed explanations throughout to allow less knowledgeable users to make informed decisions about how things should run, avoiding the jargon-heavy approach of some lazier developers.

Scanning speeds were blisteringly fast as always, powering through the sets in excellent time with a light touch when accessing files (perhaps helped somewhat by the default approach of not scanning all file types on-read). Resource use was pretty low, with very little impact on our set of activities.

Scores were excellent, with splendid coverage of all our sets, a small drop notable in the proactive portion of the RAP sets but still highly impressive even there. The clean set, which some products plodded through in days, was brushed aside in record time, and the infected sets handled rapidly and accurately too; no problems were encountered in the certification sets, earning Avast another VB100 award, the vendor’s 16th consecutive pass.

All tests completed in well under the 24 hours we hoped all products would manage, with no issues at all, making for an all-round excellent performance.

AVG version 10.0.1382, Virus DB 1513/3719

While fellow Czech company and arch rival Avast routinely submits its free edition for our tests, AVG tends to enter its full premium suite solutions, with this month’s entry being the corporate desktop product. The installer is a fair size at 183MB, with all updates included, and runs through in short order with a half-dozen clicks of ‘next’ and no need to reboot, despite the multiple layers of protection included. Part of the process is the offer of a browser security toolbar, use of a secure search facility, and a groovy Aero sidebar gadget.

The interface is clear and simply laid out, with a sober grey colour scheme suitable for business users. Under the hood is another excellent set of fine-tuning controls, again provided in splendid depth and made reasonably clear and simple to operate even for untrained users. It remained responsive and stable throughout testing.

Speed measures were decent to start with, and sped up hugely in the warm measures, both on demand and on access. Resource use was low, as was impact on our set of tasks. Getting through the larger test sets took a little time but wasn’t excessively slow, and the infected sets were handled excellently, with highly impressive scores in the main sets and the reactive parts of the RAP sets, dropping a little in the proactive week.

The WildList was handled well, but in the clean sets a couple of items were mislabelled as malware, including part of a photo manipulation suite from Canadian developer Corel, which seems to produce regular issues in our testing. Both alerts were only heuristic detections, but this was enough to deny AVG a VB100 award this month, spoiling a solid record of passes dating back to 2007.

This result now brings the vendor to five passes and a single fail in the past year; ten passes, one fail and one test not entered in the last 12. The product completed the full set of tests this month in around 24 hours, our target time, and there were no issues other than the two false positives in the clean sets.

Product version 10.0.0.648, Virus definition file 7.11.10.48

Avira’s free edition has become a regular in our tests in the last few years, and is generally a welcome sight. The installer is small at 51MB, but an additional 44MB update package is also provided. Getting set up is fairly simple, with another half-dozen dialogs offering the usual information and options, with good clarity, and a speedy install with no need to reboot. The only item of note is the rather large advertising screen pushing the paid-for edition, which comes at the end of the process.

The product interface is a little less slick than some, with a rather sparse, angular look to it, and the minimal language marking controls and options is occasionally less than clear. Nevertheless, once again an excellent level of controls is provided, with simple and expert modes to protect the less advanced user from the more frightening technical stuff.

Scanning speeds were decent, improving very slightly in the warm runs but not enough to make a huge impact, while on-access overheads were in the mid-range. Resource consumption was excellent, with very little RAM or CPU used and minimal effect on our set of activities.

The rest of the tests were powered through in splendid time, with no issues, recording a clean run through the false positive sets and splendid detection rates in all the infected sets, dipping only very slightly towards the end of the RAP sets. The WildList caused no issues, easily earning Avira another VB100 award for its free edition.

This version has yet to hit a single snag, with three passes from three attempts in the last six tests, five passes from five attempts in the last two years. Tests completed comfortably within the 24-hour time frame, with no sign of any instability or other problems.

Product version 10.0.0.1012, Virus definition file 7.11.10.48

Weighing in a fraction heavier than the free edition at 58MB, and using the same 44MB update package, Avira’s premium version is pretty similar in a lot of ways, the simple and speedy install process following similar lines and finishing just as rapidly, with again no need to restart. The interface has a very similar look and feel, with again an excellent level of controls tucked away in the ‘Expert mode’ area.

Tests zipped through in good time – the on-demand speeds were noticeably quicker than those shown by the free version, but the on-access measures were hard to tell apart. Use of memory was just as low as the free edition, but CPU use was slightly increased and impact on our set of tasks also a little higher.

Detection rates were identical though, showing that the free version is in no way the lesser. Here again we saw superb coverage of our infected sets, including complete detection of the WildList set, and with no issues in the clean sets another VB100 award is comfortably earned by Avira.

This pro version has a rather longer history in our tests than the free one, and maintains an excellent record, with all of the last six tests passed; a single fail and 11 passes in the last two years. Avira’s popularity with the lab team is strengthened by another easy test month, all tests completing in under a day with no sign of any problems anywhere.

Product version 3.5.17.1, Antivirus signatures 8348918

Somewhat surprisingly, BitDefender submitted its file server edition for this month’s comparative, but since we had seen the same solution in the previous test it presented few problems. The installer was fairly large at 186MB, including updates, but the set-up process is fairly standard with no surprises and ran through quickly, with no reboot needed to complete.

The interface is based on the MMC system, but is more colourful and easy to operate than many similar systems, with plenty of control options (as one would expect from a server-level product). Scanning speeds were middle of the road, with no sign of any speed-up in the warm measures, but some form of caching was clearly present in the on-access mode, which is where it really counts. Overheads were not too heavy to start with, and barely noticeable once files had passed initial checks.

In everyday use, consumption of memory was fairly low, but CPU use was noticeably high, and our suite of standard tasks took a little longer to complete than expected. The on-access detection measures ran through in decent time, with solid stability even under heavy bombardment, but in the on-demand jobs things got a little trickier. We have noticed several products of late storing scan results in memory until the end of the job, only writing to disk once complete (or, in some cases, once the user has acknowledged completion). This is presumably an optimization measure, but seems rather unnecessary – assuming a scan detects little or nothing, as should be the norm, the time spent writing out to file would be minute, whereas when multiple detections occur (the only situation in which such optimization would help), the escalating use of RAM can cause all sorts of problems.

BitDefender’s developers have clearly not thought this through, and have not implemented any sort of checking of how much memory is being eaten up by the product – the scan of our main sets slowly stumbled to a crawl as system resources were drained. Day after day passed, with the team leaving the lab each evening vainly hoping that the scan would be finished in the morning. By the fifth day (the fastest time taken to complete this job this month was less than two hours), almost 2GB of RAM was being used by the scanning process, and the machine was barely responsive. At this point, a power outage hit the test lab thanks to a UPS failure, and a whole week’s worth of work was lost forever, thanks to the product’s failure to back things up to the hard disk.

Re-running the tests in smaller chunks proved a much faster approach, as the lack of excessive memory use kept things ticking over nicely, but of course it required much more hands-on work from the lab team. Eventually, we gathered a full set of results from over 20 scans, and they showed the usual excellent scores. All sets were well covered, with some stellar figures in the RAP sets, only declining slightly in the proactive week. The core requirements were comfortably met and a VB100 award is duly earned after some considerable hard work from us.

BitDefender’s record is solid, with all of the last six tests passed, and ten passes, one fail and one no-entry in the last two years. There were no actual crashes in this month’s test, and the slowdown we saw due to heavy RAM use would only occur in extreme circumstances. Nevertheless, testing took up more than eight full days of lab time.

BullGuard version 10,0,0,26, BpAntivirus version 10,0,0,48

BullGuard’s solution is based around the same engine as BitDefender, and the installer is again fairly large at 155MB. The set-up process involves only a few clicks, but takes a little time to run through, needing no reboot to finish off. The interface is a little quirky, but fairly usable after a little exploration, and operated fairly stably throughout testing, although we did notice some lengthy pauses when opening saved scan logs.

Speed measures were pretty fast, with caching implemented in both on-demand and on-access modes, and RAM and CPU use were around average, while impact on our set of tasks was again a little higher than most. Running through the detection tests proved fairly painless, with no problems getting through large scans, and the on-access measures completed in good time too, although we did note that some sort of lockdown was imposed when large numbers of detections were bombarding the protection system, resulting in many other activities being prevented.

Scores were again excellent throughout the sets, with even the proactive week of the RAP sets very well covered. The WildList set and clean sets threw up no surprises, and BullGuard comfortably earns another VB100 award. It now has four passes and two no-entries in the last six tests; six passes and six no-entries in the last two years. There were no serious stability problems during testing, which completed in around the 24 hours allotted to each product.

Version 7.1.70, Virus scan engine 5.3.0, Virus database 14.0.91

Central Command’s product is now a regular participant in our tests. The current version of the product was submitted as a 68MB installer with a 59MB update bundle, which ran through in quite a few steps, taking some time. Like others based on the VirusBuster engine of late, the permission to join a community feedback scheme was sneakily concealed where the ‘accept’ option for the EULA would usually be found. The slowish process ends with a reboot.

The product interface is highly reminiscent of those seen in VirusBuster products for many years now, but in a garish red. The layout remains fiddly and awkward, lacking more than a little in intuitiveness, but provides a reasonable degree of control. Scanning speeds were fairly decent, but on-access overheads seemed a little above average in some areas, while use of resources and impact on our set of tasks were also higher than many this month, although not outrageously so.

Detection rates were decent in the main sets and reasonable in the RAP sets, tailing off slightly through the weeks. The core certification sets were handled well, and Central Command earns another VB100 award without too much strain. The vendor’s record shows an impeccable nine passes from nine entries since re-emerging in its current form; tests ran smoothly with no problems, and completed within 24 hours.

Version 1.1.68, Definitions version 14.0.90

As usual this month’s test sees a number of very similar products based on the popular VirusBuster engine, using an SDK developed by Preventon. First up is Clearsight, which already has a handful of successful VB100 entries under its belt. The installer is a fairly compact 63MB, including all the latest updates, and runs through swiftly and simply, although it does complain if Internet connectivity is not available at install time. We left the system connected long enough to apply a licence key (needed to access full configuration controls), but blocked any updating past the deadline date. No reboot is needed to complete the set-up.

The interface is simple and hard to get lost in, providing basic controls covering a reasonable range of fine-tuning with minimal fuss. Operation proved smooth and stable, with no issues even under heavy pressure, and testing completed without incident. Scanning speeds were not the fastest, but still pretty decent and very consistent over multiple runs, while on-access overheads and resource consumption measures were similarly middling, with a small but noticeable impact on our suite of tasks.

Detection rates were decent – no threat to the leaders of the pack but far from the tail too – with a steady but not too steep decline through the RAP sets. The WildList and clean sets were handled properly, earning Clearsight another VB100 pass – its third in a row with a single fail and one no-entry since its first appearance five tests ago. Testing ran through without incident, taking just a little longer than the expected full day to complete.

Product version 5.1.14, Engine version 5.3.5, DAT file ID 201106220548

We have grown quite used to the Commtouch name by now, although the product’s pre-acquisition company name, Authentium, still crops up from time to time in team discussions. This is also the case in the product itself, with the installer, a tiny 14MB with an additional 28MB update bundle, dropping a few files and folders still referencing the old brand as part of its speedy, low-interaction set-up process. With no reboot needed even after the updates were applied using a custom script, things were ready to go in only a minute or so.

Operation is fairly straightforward, with a fairly basic set of options available once the button to enable the ‘advanced’ mode has been clicked. Scanning speeds were not super fast, with on-access overheads a little higher than many this month, and while RAM use was not exceptional, both CPU use and impact on our set of activities were pretty high.

Detection rates were unspectacular, with a reasonable showing in the main sets and respectable, surprisingly consistent scores in the RAP sets. The WildList brought no surprises, and with only a handful of suspicious files in the clean sets – alerting on suspicious packing practices and adware – Commtouch has no problems earning a VB100 award this month.

Our records for the product show a somewhat patchy history lately, with three passes and two fails from five entries in the last six tests; four passes, four fails and four tests not entered in the last two years. A single minor issue was observed during testing, when a large log file failed to fully export properly, but the problem did not recur and testing completed just within the 24 hours allotted to the product.

Product version 5.4.191918.1356, Virus signature database version 9154

Comodo is a relative newcomer to our tests, having taken part fairly regularly during the last year. The vendor usually submits both the plain Antivirus edition alongside the full suite. The two products are pretty similar, even down to using the same 60MB installer; the difference only takes effect during install, when the user can select the option to include the suite’s extra components. Installation was run on the deadline day, starting with a wide selection of available languages, some of which are provided by the product’s ‘community’ of fans. Alongside the usual install steps is an option to use Comodo’s own secure DNS servers, and with a reboot to complete, this first step took only a minute or two to get through. On restart, the product goes online to fetch updates, which in this case took 15 to 20 minutes to fetch 122MB of data.

The product is pretty good looking, with clean and elegant lines and a crisp red-and-deep-grey colour scheme. The layout follows a common pattern, making it simple to navigate, and provides a lot of extras alongside the usual basics of anti-virus, including the ‘Defense+’ intrusion prevention system and sandboxing of unknown executables. A good level of controls are provided, with plenty of clear and useful explanation.

Running the first tests was thus simple and rapid, with some fairly fast scanning speeds and on-access overheads medium in some areas and light in others. Resource use was also medium, although impact on our set of tasks was a little high.

Running the larger tests took quite some time though, despite following advice from the submitters to disable cloud look-ups. The on-access run over our main sets took nine full days, and the scan of our clean sets, RAP and main infected sets took considerably longer. Throughout this period the machine remained stable and responsive, with no sign of any other problems, and the slowness is likely only to affect scans of large amounts of infected items – an unlikely scenario in the real world.

With the results finally in, we saw some pretty decent detection rates in the main sets, with the RAP sets starting off pretty good too, but dropping very sharply through the weeks to a rather poor level in the proactive week – implying that detection of more recent items leaves something to be desired. The WildList was handled well, and in the clean sets a number of items were warned about, including any file with more than one extension being alerted on as ‘Heur.Dual.Extension’ – probably a wise move given the ongoing use of such tricks to disguise malware. Several other items were described as ‘Suspicious’, while a few full-blown false positives were also recorded, including the popular FileZilla downloading tool being flagged as a downloader trojan. This was enough to deny Comodo a VB100 award this month.

The records of the Antivirus product show no passes from two previous entries in the last year (the only test Comodo has managed to pass so far was the April 2011 XP test, which featured the vendor’s suite edition). There were no stability problems or crashes during testing, but the slow speed over infected items meant the product hogged one of our test systems for a truly epic 20 days.

Product version 5.4.191918.1356, Virus signature database version 9154

Comodo’s second product this month is the full ‘Premium’ suite, but as far as we could tell it appears to be available free of charge. Using the same installer as the previous product, the experience was unsurprisingly similar, although in this instance we opted to check both the suite and ‘Geek Buddy’ options – the latter being a support system allowing engineers to access the local system to fix problems. After the few minutes of installing and a required reboot, the update once again ran for around 20 minutes, downloading the 122MB of data for the main product (we chose not to update the Geek Buddy component, having observed this taking quite some time in previous tests).

With the product set up and an image taken for testing, there was little difference between this and the previous product; the most obvious thing is that this version includes a firewall, but otherwise the interface and operation was identical – clear and easy to use with a good range of components and well laid out controls.

The results of the performance tests were decent – better than average in most areas, with average use of resources and, somewhat oddly, a much lower impact on our set of activities than the product’s counterpart. Again the main tests took forever to complete, showing no signs of instability or unresponsiveness but just dawdling enormously. Results showed a similar pattern, with good detection rates in the older areas but considerably lower over more recent items, and although the WildList was fully detected, the same crop of false alarms that tripped up the Antivirus version also deny this product a VB100 award.

The suite’s history is a little better, with one pass and now three fails in the last year, with two tests not entered. There were no crashes, but the slow scanning meant the product also took more than 20 days to get through our tests; between them, Comodo’s two solutions took up enough machine time to process 40 products operating at the expected pace – almost the whole of the rest of this month’s field.

Version 2011 (3390.519.1248)

Swiss firm Defenx is another relative newcomer which has started to become a regular sight on our test bench over the last few years, with a strong record of passes. Of late we have noted some increasing slowness in our tests, with both malware and speed tests taking a long time to complete; we hoped for a return to previous speeds this month.

The installer weighed in at just under 100MB, and after a few standard stages, including the trick of hiding the option to join a feedback scheme alongside the EULA acceptance (which currently seems standard for products based on the VirusBuster engine), it ran through its set-up tasks in a few minutes, with a reboot to finish off.

The interface is very similar to that of the Agnitum product, of which this is a spin-off of sorts, and thus devotes much attention to the firewall components in its design – but it still makes some space for the anti-malware module, providing a basic range of controls. Scanning speeds were not fast to start with, with one scan, covering our set of executables, just hitting the two-hour cut-off limit imposed on these measures; in repeat runs things were much quicker. On-access overheads were very heavy though, and bizarrely actually got slower in the warm measures. Use of RAM was a little high, but CPU cycles went through the roof, with a hefty impact on our set of tasks too.

The slowness worsened in the larger detection tests, with the on-access test taking over 20 hours to get through, and the large scan of the full selection of sets trundling along for over eight full days. Thankfully, there was no instability and logging was maintained well throughout.

Detection rates were good in the main sets, dwindling somewhat through the RAP sets, but the WildList was well handled and there were no issues in the clean sets, earning Defenx another VB100 award. The vendor has five passes in the last six tests, having skipped the annual Linux comparative, and eight passes since its first entry nine tests ago. No stability problems were noted this month, but the slow scanning speed meant more than ten days’ test machine time was used to complete all our work.

Version 2.1.68, Definitions version 14.0.90

The second of this month’s set of products based on the Preventon SDK and VirusBuster engine, Digital Defender has become a fairly regular sight on our test bench. The 63MB installer runs through in good time, requiring web access to run and apply a licence key, and needing no reboot to complete. The GUI is sparse and simple, with basic controls only, but is perfectly usable for the undemanding user. As with the rest of this range, logging is somewhat troublesome, defaulting to ‘Extended Logging’ which means that every item looked at is noted down in the logs. To combat the bloating effects of this, logs are abandoned after reaching a few MB in size, or a few thousand files scanned, and while the verbosity can be switched off from the GUI, a registry tweak is required to prevent logs from being dumped.

With this done, and a reboot performed for the setting change to take effect, tests moved along nicely with no problems. One minor issue we observed was that changes to the settings for on-demand scans seem only to affect scans run from within the product GUI, while right-click scans continue to use the default set of options.

Speeds were not bad, and overheads not heavy, with low use of resources and little effect on the running time of our set of tasks. Detection rates were decent if not stellar, and with the WildList and clean sets handled properly, another VB100 award goes to Digital Defender. The vendor’s history shows a period of recovery after a rocky spell, with three passes, two fails and one no-entry in the last six tests, four passes and four fails since its first entry nine tests ago. With no crashes or stability problems, all tests completed in a little over 24 hours, just about on schedule.

.

Version 4.8.2, Rule version 1622, Antivirus version 1.1.1560

Another product using an OEM engine, eEye’s Blink includes Norman’s detection technology alongside the company’s own vulnerability expertise. The product installer is a sizeable 176MB, accompanied by an additional 116MB of offline updates, but it trips through rapidly, with half a dozen clicks and no need to reboot.

The interface features warm colours and a friendly layout, with a mysterious black-hatted figure icon representing the anti-malware component, which runs alongside the vulnerability management, firewall, intrusion prevention and other features. Controls are not as complete as in some solutions, but provide a reasonable degree of fine-tuning, and tests ran through reasonably quickly and without too much trouble.

Scanning speeds were slow, perhaps in part due to the sandboxing system, with fairly hefty overheads on access too. However, use of RAM wasn’t too heavy, while CPU use was a little above average and impact on our set of tasks was noticeable but not overly intrusive. Detection rates were decent in the main sets, a little less impressive in the later weeks of the RAP sets, but the WildList caused no problems. The clean sets threw up a number of alerts, several of which were for suspicious items, but a few described clean items as malware. These included a component of the ICQ chat program and, slightly more controversially, a handful of files from a leading PC optimization suite – which a few other programs this month labelled as potentially unwanted and some have described as having ‘dubious value’ – were labelled W32/Agent, which was adjudged a step too far.

As a result, there is no VB100 award for eEye this month, the vendor’s history now showing three passes, two fails and one no-entry in the last six tests; three passes, five fails and four tests skipped in the last two years. With no stability problems, slow scanning times were the only issue this month, causing the full set of tests to take around two days to complete.

Version 5.1.0.15, Malware signatures 5,511,662

Emsisoft’s solution, formerly known as ‘A-Squared’, includes the Ikarus detection engine alongside some of its own stuff. The installer isn’t too large though, at 109MB with all updates included, and the set-up process is fairly speedy, with a few more clicks required than some, but no reboot. The initial install is followed by a set-up wizard with some further options, and things are quickly up and running.

The GUI is a little unusual in the way it switches between areas, leading to occasional confusion, and some of the options are perhaps not as clear as they could be, but it is generally usable, providing a basic set of options. Running seemed stable in the on-demand tests, showing some fairly slow scanning speeds, but the on-access component was decidedly flaky – running fine for a while but regularly bringing whatever test was running to a halt; while the cursor could be moved around the screen after one of these freezes, the system refused to respond otherwise, and only a hard reboot got things moving again. This occurred most often during the runs over the infected sets, but was also observed a few times when running the standard speed and performance measures, using only clean samples and fairly standard actions.

When we finally got all the tests completed, after several runs through and several re-installs, we saw some surprisingly light overheads for the on-access measures, with low use of RAM and unexceptional use of CPU cycles and impact on our set of tasks. Detection rates, meanwhile, were excellent, with superb coverage in all sets, even the proactive part of the RAP sets handled admirably. The WildList was brushed aside effortlessly, but in the clean sets a couple of items – one of them a driving simulation game – were alerted on as malware, denying Emsisoft a VB100 award this month.

The vendor’s test record is a little rocky of late, with one pass and four fails in the last six tests, the annual Linux test having been skipped. Since its first entry nine tests ago, it has managed two passes, with five fails and two tests having been skipped. There were some clear problems with the on-access component in this test, freezing the system when under pressure on several occasions, and the retesting this necessitated meant that more than three full days were taken up.

Version 11.0.1139.1003

A long-term regular, eScan is rarely absent from our tests. This month’s product came as a large 172MB installer, although all updates were included. The set-up process was a little lengthy, needing no reboot to complete but still taking a fair while. When the system was rebooted for other purposes, the UAC subsystem popped up several warnings about the mail scanning components.

The interface is bright and flashy, with a slick animated bar of icons along the bottom, but the layout is clear and logical, making navigation easy. Excellent configuration is available under the shiny covers in a much more sober and logical style.

Scanning speeds were slow, with several scans taking more than the maximum allotted two hours, including scans of the local C: partition. Meanwhile, the scan of the clean sets – which many products got through in the space of a morning – took over 58 hours to complete. On access things were a little better, with pleasantly light overheads, and use of resources and impact on our set of activities were notably on the low side.

Detection rates were splendid though, thanks to the underlying BitDefender engine, with all sets covered excellently. The WildList caused no problems, and the clean sets were handled well, earning eScan another VB100 award; the vendor now boasts five passes and a single fail in the last six tests; nine passes and three fails in the last two years.

Stability seemed fine for the most part, but some of the scans were extremely slow, meaning the total testing time was more than five days.

Version 4.2.71.2, Virus signature database 6229

Still maintaining the record for the longest run of passes, ESET is one of our most regular participants. The latest product version was provided as a fairly small 51MB package, including all required updates, and installed in a handful of standard steps, enlivened only by the usual step of forcing a decision on whether or not to detect ‘potentially unwanted’ items. The process doesn’t take long, and needs no reboot to finish.

The interface is attractive and elegant, glossy without losing a sense of solid quality. Configuration is provided in massive depth and is generally easy to navigate if seeming a little repetitive in places. Operation was pretty straightforward, with no problems with stability. Scanning speeds were pretty fast and very consistent, while on-access overheads were light, resource consumption average and impact on our activities not too heavy.

Detection rates were decent, with again impressive consistency across the weeks of the RAP sets. The clean sets did throw up a fair number of alerts – mostly for toolbars and adware bundled with freeware packages, but also several items from a suite of system cleaning and optimization tools were labelled as potentially unwanted (the same items having been described by another vendor as having ‘dubious usefulness’).

None of these could be described as a false alarm though, as the descriptions were pretty accurate, and with the WildList handled well ESET comfortably maintains its unbroken record of VB100 passes, having entered and passed every test since the summer of 2003. With no crashes or other problems of any sort, and good speeds, all tests were comfortably completed within 24 hours.

FortiClient version 4.1.3.143, Virus signatures version 10.855, AntiVirus engine 4.3.366

Fortinet’s showings in our tests have been steadily improving of late, and we looked forward to seeing if it could maintain this impressive trend. The submission took the form of a tiny 10MB main installer, with 126MB of updates in a separate bundle, and the install process was very fast and simple, running through the standard steps in good time with no reboot required. The interface is efficient and businesslike, with a good level of fine-tuning but little chance of getting lost amongst the clear, simple dialogs. Operation seemed smooth and reliable, and tests proceeded without incident.

Scanning speeds were fairly average, but overheads were light, although CPU use was a little high; RAM use and impact on our set of tasks were around average for this test. Detection rates showed that the upward trend has not yet reached an end, with some very impressive figures across all the sets. While the RAP sets trailed off a little into the later weeks, scores remained pretty decent. The WildList and clean sets presented no problems, and Fortinet earns a VB100 award.

The vendor now has four passes and a single fail in the last six tests, the annual Linux test having been skipped; seven passes and three fails over the last two years, again just missing the Linux comparatives. With no stability problems this month and good speeds, all tests were completed within 24 hours.

Version number 6.0.9.5, Scanning engine version number 4.6.2, Virus signature file from 19/06/2011, 20:48

Another long-term regular, F-PROT has been a reliable and seldom changing entrant for many years now. The current product is a compact 31MB installer with 27MB of updates, and installs in just a few steps, finishing very quickly but needing a reboot to finish off. The interface is simple in the extreme with only the bare minimum of controls, but is pretty easy to use and tests proceeded well.

Scanning speeds were not bad, although overheads seemed a little high on access. Use of memory and processor cycles was low, with only a small effect on the runtime of our set of tasks. Detection rates were not the highest, but were reasonably decent in most sets, with the core certification sets handled well and a VB100 award is comfortably earned.

Frisk now has four passes and two fails in the last six tests; seven passes and five fails in the last two years, with all comparatives entered. With little fuss and no crashes or other problems, all tests completed comfortably within the one-day target time.

Version 911 build 411

F-Secure’s Client Security line appears designed for business use, with the installer (63MB, with 139MB of updates) providing options to connect to a central management system for policy control, although of course we opted for a standalone version. Other installation stages were more standard, and the process completed in reasonable time, with a reboot at the end.

The product interface is pretty pared down, with little configuration made available to the end-user, although presumably a centrally managed version will have more options for the administrator to impose fine control. It is fairly easy to use, although we did note a few problems: the custom scan failed to do anything several times and there was some rather odd behaviour when we did manage to start scans. Several times, we noted scans claiming to have completed, but recording much lower numbers of files scanned than we would expect – implying that the scanner was giving up part-way through the job assigned to it. In some cases, it seemed that the number reported could not represent the actual number scanned either, suggesting that the reporting system first counts the number of files in a folder, adding that to its total so far, then claims that total as completed even if the scan gives up halfway through the folder in question.

Scanning speeds proved to be reasonable on first attempt with huge improvements in the warm runs, with on-access speeds similarly impressive, while all our resource use measures were very low and our set of tasks zipped through very quickly.

After several repeat runs, we got together what seemed to be a full set of results from the infected sets too, but with the reports clearly misleading it was hard to tell if everything had in fact been covered. On processing the figures, RAP scores were lower than we would expect from the product, with several other solutions using the same BitDefender engine doing much better this month, but with limited time we could not retest further to get closer to the truth.

The core certification sets were well handled though (the clean sets were run through on access to ensure no lurking false alarms had been skipped over by the flighty scanner), and a VB100 award is just about earned; this brings F-Secure’s recent record to four passes in the last six tests, with two tests skipped; nine passes and three no-entries in the last two years. The suspect scanning and logging behaviour, and resulting multiple retests, meant the product took up nearly five full days of testing time.

Version 22.0.2.38

G Data’s 2012 edition isn’t greatly different from the previous version, with the very hefty 346MB install package taking slightly more than the usual number of clicks to run through, with a reboot needed at the end. The interface is simple and clear, but has a wealth of fine-tuning tucked away beneath the surface, all presented in a pleasant and easy-to-use style.

Initial scan times were a little slower than many, as might be expected from a multi-engine product, but some very efficient optimization meant repeat scans blazed through very quickly, and on-access measures also improved greatly, from a reasonable starting point. Resource use was low, particularly CPU use, but for some reason our suite of tasks took quite some extra time to run through.

Detection rates were as eye-opening as ever, with splendid coverage across the sets, pushing very close to 100% in all but the latest weeks of the RAP sets. The WildList was demolished and the clean sets left untouched, easily earning G Data another VB100 award. The vendor’s record stands at four passes and a single fail in the last year, with no entry in the Linux test; eight passes and two fails in the last two years, with two tests (both on Linux) not entered. Stability was solid as a rock throughout testing, and thanks to the splendid use of optimization techniques all our tests completed well on schedule, in less than 24 hours.

Software version 4.4.4194, Definitions version 9660, VIPRE engine version 3.9.2495.2

With the takeover of Florida’s Sunbelt Software by Malta-based GFI a few months back, we’ve finally managed to adjust our systems to correctly reference the new company name, and put it in the right order alphabetically. The product is largely unchanged though, and still bares Sunbelt rather than GFI branding in most places. The product installer is compact at just 13MB, with updates of 68MB. The set-up process is pretty straightforward but does have a few longish pauses. A reboot is required, which is followed by some initial configuration steps. Our first attempt brought some warnings that the on-access component could not be started after the reboot, but a second reboot soon fixed things; the same issue re-emerged on a subsequent install too.

The interface is simple, unflashy and a little text-heavy, but is fairly easy to find one’s way around, providing a little more than the minimum set of controls, but not as much as some. Some of the options are a little less than clear at first glance, but usage is not too difficult after a little experimentation. Scanning speeds were pretty slow, and access overheads a little heavy in some areas, but resource use was tiny and our set of tasks ran through almost unimpeded.

Scanning our infected sets is always a little tricky with VIPRE, it being another product that takes the rather suspect route of storing all detection data in memory until the end of a scan. Thus, when scans fail (as they did regularly this month, with an error message reading starkly ‘Your scan has failed’), not a scrap of data can be retrieved. Attempts to get through all but the smallest sub-division of our sets led to problems, with large amounts of memory being eaten up, so we had to run many little tests and pile all the results together at the end.

Detection rates, once pulled out of rather unwieldy logs, showed some decent scores with good levels across the main and RAP sets, dropping a little in the proactive RAP week as expected. The core certification sets were well handled, and GFI (formerly Sunbelt) earns a VB100 award.

VIPRE now has four passes in the last six tests, having skipped two, and six passes and one fail in the last two years, with the rest not entered. Other than the odd reboot issue after installation, and the problems scanning large infected sets, there were no other stability problems, but with the extra work imposed by large scans failing, and the many repeat runs required, testing took around six days to complete.

Product version 2.0.42, Virus database version 78656

Another pretty compact product, the install package sent in by Ikarus measured just 18MB, although an additional 68MB of updates was also provided. The install system was clear and well explained, but needed around a dozen clicks to complete, making it one of the longer set-up processes this month. No reboot was needed though, and the actual business of putting files and settings in place was pretty speedy.

The interface is simple and minimalist – one of few to make use of the .NET framework for its displays – and has been somewhat flaky in the past, but this month it ran fairly stably on the whole. Operation is reasonably straightforward, and testing proceeded well, but part way through one test the power in the lab died unexpectedly, causing some rather nasty problems. The test system failed to boot even as far as the logon screen, on several attempts, but booting into safe mode and disabling the guard process fixed things relatively easily.

Scanning speeds were pretty good, and on-access overheads not bad either, with low RAM use, medium use of CPU cycles, and an average impact on our set of tasks. Detection rates were extremely high, challenging the very best in this month’s test, but this excellent coverage of malware was counterbalanced by a couple of false alarms in the clean sets, as happened to another product using the same engine earlier. The developers informed us that at least one of the issues had already been fixed some time before we told them about it, but no VB100 award can be granted this month.

The product’s history now shows one pass and three fails in the last six tests, with two not entered; two passes and five fails over the past two years, with five tests skipped. There were no stability issues other than the bootup problems following the power outage, and testing ran through in very good time, comfortably under our 24-hour goal.

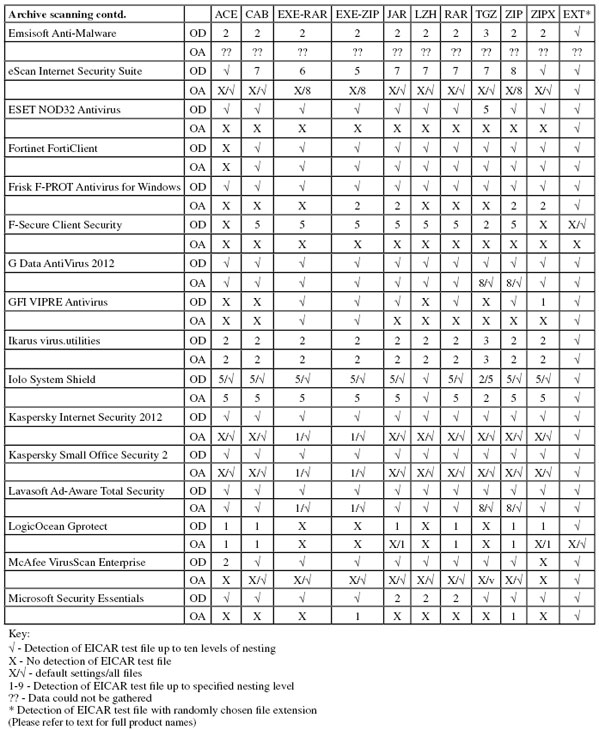

Version 4.2.4

With anti-malware protection based on the Frisk engine – already successful this month in a number of other forms – Iolo’s chances for success looked good from the off. The product arrived initially as a tiny downloader of less than 500KB, which proceeded to fetch the full 48MB install package from the Internet, taking seven to eight minutes. An offer is made to keep a copy of this file in case of future reinstalls, which we thought was rather a sensible move. The set-up follows the usual lines, taking only about a minute to complete, and requests a reboot at the end. An update is then run, completing very quickly, and all is good to go.

The product interface is crisp and professional-looking, with no surprises and a sensible, logical layout. A reasonable if not exhaustive level of configuration is provided, which is clear and easy to use. Options to respond to detections by logging or blocking access only, which are preferred for our testing, were sadly absent, so we resorted to allowing the product to rename. This involved changing the file extensions of detected items to ‘.INFECTED’. Logs could not be exported from the product interface, and despite an urgent request for information from the developers (not the first time such a request has been submitted), the bizarre log format refused to yield its secrets. Some manual hacking of the file produced some usable data, which was compared with the lists of renamed files in our sets to confirm accuracy.

Scanning speeds were not bad, but on-access overheads were noticeably heavier than most, with heavy use of CPU cycles and a significant impact on our test of activities. RAM use, rather oddly, was high with the system idle, but more normal when hectic file processing was going on.

Detection rates, when finally deciphered, were unspectacular at best, and poorer than expected in the RAP sets, which implies that we may not have made as good a job as we thought of spotting all detections. Nevertheless, the WildList was well managed, and the clean sets confirmed to be properly handled, earning Iolo a VB100 award.

The product has made only sporadic appearances in our tests, with one pass and two fails from three entries in the last six; one pass and three fails from four attempts in the past two years. There were no crashes or stability problems, and testing did not take too long, even factoring in the time needed to set our test sets back to normal and to process the log data. Everything was dealt with in about 36 hours.

Version 12.0.0.374 (a)

There was much excitement in the lab at seeing the latest offering from Kaspersky Lab, the 2012 product hitting the market good and early. Initial impressions were good, with the mid-sized 68MB installer running through with a minimum of interaction and maximum of speed, getting everything ready in excellent time. Updates were applied from a large bundle containing data for the company’s full product line.

The interface is very slick and attractive – a little quirky as usual, but quickly becoming easy to operate. Configuration for the huge range of components is exemplary in its detail and clarity, making it very simple to take complete control of how protection is implemented.

Running through the tests was pretty impressive at first, with good initial scan times becoming lightning fast in the warm runs, and similarly superb speeds in the on-access tests. Resource use was low, and our suite of activities took very little extra time to complete.

On-access detection tests powered through and showed the expected solid scores, and on-demand scans completed overnight with no problems. Trying to view the results, however, proved something of a problem. With the report database measuring around 400MB, it seemed too much for the product to handle, and we decided to reboot to clear the air a little. With the system up and running, we found the product failing to open, and tried again. After some investigation, and discussion with the developers, it emerged that such large logs were expected to cause significant delays in starting the product (which seems to need to load in all log data before it can get going). Leaving it overnight, we saw it using up almost 1GB of memory, but the interface still crashed whenever we tried to open it. Of course, our test scenarios are far outside the normal usage pattern of the product, but we would expect QA procedures to include some heavy stress testing to ensure this sort of edge case cannot completely disable the product.

Trying to move on, we looked at the log database, only to find yet another gnarly and awkward proprietary format had been used. Contacting the developers once again, we were informed that no information was available on the format of the database, and that no tool other than the product itself was capable of converting it into usable form. Having already tried inserting the log into a second install of the product, and had the same resulting problems, we had a go at ripping the data out using some fiddly manual tricks, with some success. To double-check, we re-ran the tests in a series of smaller scans, clearing out the log history in-between each, and finally got some usable results which compared closely with our initial findings. On one of the reinstalls, having gone no further than installing the product and tweaking the on-access settings, the machine crashed with a blue screen.

Finally putting results together, we saw the expected solid detection rates across the test sets, with the WildList and clean sets properly handled and a VB100 award just about earned. The product itself seemed far from solid though, to the extent that our initial reaching out to the developers included a query as to whether this was actually a pre-release beta build – they insisted it was a full shipping edition, but we hope that some urgent tidying up will be going on to ensure customers are not hit by as many problems as we were.

Kaspersky’s history for the I.S. line is decent, with four passes and one fail in the last six tests, one test having been skipped; eight passes, a single fail and three tests not entered in the last two years. With several blue screens, and the product GUI crashing repeatedly under the weight of its own log data, this was one of the least stable performances this month, and despite actual testing running through in good time, the extra effort of trying to load and convert the results along with the numerous crashes and re-tests meant it took up one of our test systems for more than six full days.

Version 9.1.0.59

A slightly more familiar product, this small business edition is closer to the company’s 2011 and ‘PURE’ product lines. The installer is notably larger, at 214MB, and again updates were applied from a bundle mirroring a complete online update source. The install process was simple and rapid, all done in under a minute with no reboot required.

The interface is more standard than the newer edition, offering a wide range of components in a smoothly integrated fashion, and again a massively detailed level of configuration is available, in a splendidly clear format. Testing ran through with minimal effort, the product showing responsiveness and stability throughout.

Scanning speeds were superb, with barely noticeable overheads on access, low use of CPU even when busy and RAM use low at idle and no more than average during heavy activity. Impact on our set of tasks was noticeable, but not excessive.

All detection tests completed in good time, with no problems converting data into a readable format, and results looked solid, with good coverage across the sets. The WildList presented no difficulties, but in the clean sets a single item, a developer tool from Microsoft, was alerted on as a threat, denying Kaspersky’s second offering a VB100 award this month despite a far more convincing performance. The developers inform us that the false alarm would have been mitigated by the company’s online reputation look-up system in real-world use, but under the current test rules such systems cannot be taken into account (we plan to introduce some significant changes in the near future which will include coverage of these ‘cloud’ components).

For now, Kaspersky’s business line must take the hit, but it has a decent record, with four passes and two fails in the last six tests; eight passes, three fails and a single test not entered in the last two years. The product ran very stably throughout, comfortably completing all tests within our target time of 24 hours.

Ad-Aware AntiVirus version 21.1.0.28

This month Lavasoft only entered its ‘Total’ product, which is based around the G Data engine with some extras of its own; we expect to see the company’s ‘Pro’ product (which includes the VIPRE engine) appearing once again in an upcoming test. The installer for the Total solution is a chunky 482MB with all updates rolled in, but the set-up process is not overly long considering the size. A reboot is needed to complete, and after the restart we noted the machine took a long time to come back to life. Once up and running we saw an error message stating that Ad-Aware could not be loaded, apparently due to ‘insufficient memory’ (the test machines boast a mere 4GB of RAM, which is perhaps not as enormous these days as it was a year or two ago). After a few moments though things settled down nicely, and the product loaded up fine.

The interface is pretty similar to G Data’s, with only the branding noticeably different, and this means it is admirably clear and well laid out, with a wealth of options easily accessible. After the initial wobble it ran smoothly, powering through the speed tests with excellent optimization in the warm runs after a fairly sluggish first look, and a little slower when the default cap on the size of files to scan was disabled. Use of resources was not bad at all, and impact on our set of tasks was not too heavy either.

Detection rates were superb, the main sets completely blown away and the RAP sets dealt with well (although not scoring quite as well as G Data, hinting that perhaps the definitions provided were not quite as recent) – presumably real-world users would have an even better experience.

The clean sets were splendidly well handled, and with no problems in the WildList Lavasoft earns another VB100 award for the Total solution. Having entered four of the last seven tests, the product now has two passes and two fails, with initial teething problems apparently sorted out and a long and glorious VB100 career on the horizon. The only issue noted was the slow initial startup, which was not repeated on subsequent reboots, and from there on testing romped through in excellent time, completing in under the target 24-hour period.

Version 1.1.68, Definitions version 14.0.90

Another from the Preventon stable, Gprotect has only one previous entry under its belt but promised few surprises for the lab team. Like others in this cluster of products, the installer weighed in at 63MB, installed in a simple and rapid manner with no need to reboot, but a web connection was needed to activate. A reasonable degree of controls were offered in a clear and simple interface. Once again logging defaulted to extreme verbosity, and dumped data older than a few busy minutes of scanning, but some tweaks to the GUI and registry easily fixed these oddities.

Running through the tests was untaxing, though a little uncomfortable on the eye thanks to the garish purple, green and orange colour scheme. Scanning speeds were decent, with reasonable overheads on access. Resource consumption and impact on our set of tasks were not bad either, and detection rates were solid and workmanlike, if a little less than inspiring.

The core certification sets were handled well, earning Gprotect its second VB100 award from its second entry in the last three tests, the middle one having been skipped. Stability was solid throughout, and testing took only slightly over the planned 24 hours to complete.

Scan engine version 5400.1158, DAT version 6383.0000

McAfee’s corporate solution is one of few to have changed little over several years of regular testing, and remains grey and serious as befits its business target market. The installer is a smallish 37MB, accompanied by updates measuring 107MB unpacked, and sets up in reasonable time after a fair number of questions, including an offer to disable Windows Defender. A reboot is not demanded, but is required for some components to become fully operational.

The interface is plain and simple, with an olde worlde feel to it, but is very easy to use and offers an impeccable level of fine-tuning to suit the most demanding of users. It ran very stably throughout the test period, showing some good on-demand speeds, with a little speed-up in the warm runs, and on-access overheads a shade on the high side of medium. RAM use was a little higher than most, but CPU use and impact on our set of tasks was not excessive.

Detection rates were very good in the main sets and decent in the RAP sets, with scores declining very slightly through the weeks. The core certification sets were handled perfectly, earning McAfee another VB100 award. The product seems to be recovering from something of a rough patch, with two passes and one fail in the last six tests, and three not entered; six passes and two fails over the past two years, with four tests skipped. No problems were observed this month, and testing completed in good time, around the one day hoped for from all products.

Product version 2.0.0657.0, Signature version 1.105.2231.0

The base installer for Microsoft’s free-for-home-use solution was one of the smallest this month, at just 9.6MB, but updates of 64MB brought the total download required to a more standard size. The set-up process was simple and fairly speedy, with minimal user interaction, but a reboot was needed at the end. The interface is well integrated into Windows styling, as one would expect, and is generally usable although the language used is occasionally a little unclear. Configuration is fairly basic, but a few options are provided, and stability was firm and reliable throughout.

Scanning speeds were not the fastest but overheads were very light indeed, with minimal use of resources and only the tiniest impact on our set of tasks. Detection rates, when usable data had been pulled out of rather unfriendly logs, were good in the main sets and decent in the RAP sets too, dropping off a little into the later weeks. The core WildList and clean sets were dealt with well, and a VB100 award is duly granted to Microsoft.

This product is usually only entered for alternate tests, with the company’s Forefront solution submitted for server platforms. Security Essentials’ history now shows two passes from two entries in the last six tests; four passes and a single fail since first appearing in December 2009.

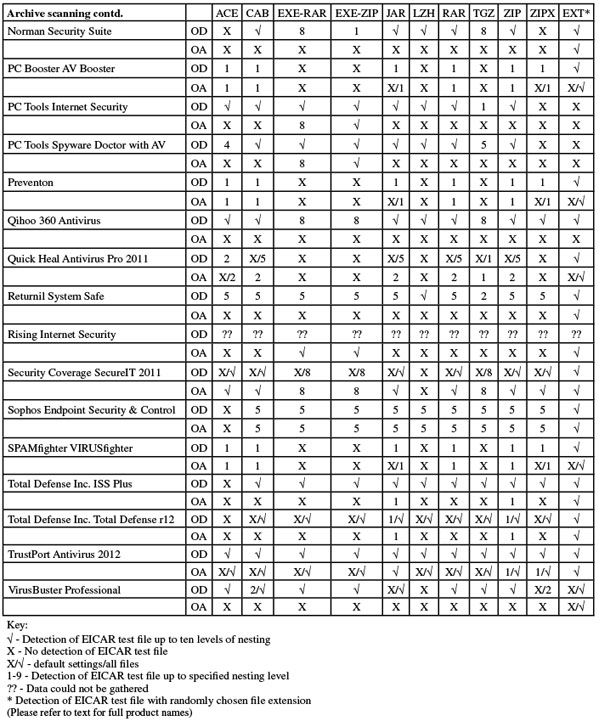

Antivirus version 8.00, Norman scanner engine version 6.07.10

Norman’s suite solution has raised some eyebrows in the past with the occasional moment of eccentricity, and we looked forward to more surprises this month. The 135MB installer ran through surprisingly quickly, with only a few steps to click through, but ended with a request to reboot.

The interface remains quirky and occasionally flaky, with the status page frequently warning that anti-malware components are not installed despite them clearly being fully operational. Scanning speeds were as slow as ever thanks to the in-depth sandboxing system, and on-access overheads were heavy too. Use of CPU cycles when busy was pretty high, but memory use and impact on our set of tasks were surprisingly low.

Getting through our large infected sets took some time, but not excessively long, and results showed some pretty decent scores in the main sets, with a reasonable showing in the RAP sets too. In the clean sets however (and as expected, given the results of other products using the same engine), a number of items were labelled as malware, and Norman does not quite make the grade for a VB100 award this month.

The vendor’s recent history is good, with five passes and just this one fail over the last six tests; longer term things look a little more rocky, with five passes and five fails in the last two years, two tests having been skipped. Other than the occasional odd message from the GUI, stability was mostly pretty good this month, and having been carefully scheduled to run over a weekend, testing only took up two full days of live lab time.

Version 1.1.68, Definitions version 14.0.90

Another member of the Preventon clan, with two previous appearances in our tests, AV Booster followed the familiar pattern of a 63MB installer, which was quick to run but needed web access to function and to apply a licence code at the end. No reboot was needed. Operating the simple, minimal GUI was straightforward, and tests ran through smoothly.

Scanning speeds were OK, overheads a little lighter than average, with reasonable resource use and an unintrusive effect on our set of activities. Scores were generally decent too, tailing off through the RAP sets as expected. No problems in the WildList or clean sets mean a VB100 award is earned by PC Booster, giving the vendor two passes and one fail in the five tests since its first appearance.

Stability was good with no freezes, crashes or other problems, although the product’s verbose logging was a little strange. With decent speeds in the larger sets, all tests were completed in only just over the 24-hour target limit.

Version 2011 (8.0.0.654), Database version 6.17760

As usual a brace of products was submitted by PC Tools, now a subsidiary of the mighty Symantec, whose own product is absent from this month’s test. This version of PC Tools includes a firewall and other components on top of the standard malware protection, and the installer is a fair size at 216MB, including all updates. The set-up process has only a few dialogs but takes several minutes to complete, with no reboot needed to finish off.

The interface hasn’t changed much in the few years since we first encountered this range, but it doesn’t look too dated. The design more or less follows standard practice, but configuration is pretty sparse in places, and where there are options they are often less than clear. Operation proved reasonably straightforward though, and the tests ran through without too much difficulty.

Scanning speeds were pretty good, with some splendid optimization in the warm runs, and on-access overheads were very light. Use of system resources was perhaps a little above average, but our set of activities were completed in decent time. The scanning of the main sets took rather longer than we would have hoped, but completed without any problems, showing some very solid scores across the sets. The WildList caused no problems, but in the clean sets a number of items were alerted on as ‘Zero.Day.Threat’, including some components from a major business package from IBM. This spoiled PC Tools’ chances of a VB100 award this month.

The suite’s history is good, with entries only on desktop platforms resulting in two passes and now a single fail in the last six tests, with three tests skipped; five passes and a fail from six entries in the last two years. No crashes or stability issues were encountered, but slow handling of our large test sets meant that testing took around 48 hours to complete.

Version 8.0.0.624, Database version 6.17760

The Spyware Doctor brand has a long history, and again the current version is much the same as those we have seen over the past few years. The installer weighed in at 197MB – slightly smaller than its suite counterpart thanks to a slightly smaller range of components, and the install process was a little quicker, completing in a couple of minutes with again no need to reboot.

The GUI is bright and shiny, with lots of status information on the front page and settings sections for a wide range of sub-components. For the most part, however, these controls are limited to ‘on’ or ‘off’, with little fine-tuning available. Usage was not too difficult though, and the tests ran through without problems.

Scanning speeds were impressive and overheads very light, especially on the warm runs. Use of resources was around average, with a middling hit on our set of tasks. Good detection rates extended across all sets, drifting downwards into the later weeks of the RAP sets, and the WildList was covered flawlessly. As feared though, the same handful of false alarms – clearly caused by over-sensitive heuristics – cropped up in the clean sets, denying the product certification this month.

The product’s history again reflects the pattern of desktop comparatives alternating with server platforms, with two passes and a fail from three entries in the last six; five passes and this one fail in the last two years, with all six server tests skipped. Stability was generally sound throughout, although the on-access run over our infected sets did have to be repeated when it appeared the protection had simply switched off halfway through the first attempt. Obtaining a full set of results thus took close to three full days of testing.

Version 4.3.68, Definitions version 14.0.90

The source of a number of this month’s products, and itself based on the VirusBuster engine, Preventon closely followed an already well established pattern. The 64MB installer set things up quickly, with no reboot, and after some tweaks to the simple settings and some registry changes to allow reliable logging, tests ran through pleasantly smoothly. Speeds and resource usage were on the good side of average, with only the suite of activities taking a little longer than expected.

Detection rates were not bad in the main sets, less than stellar in the RAPs but still respectable, and with no problems in the core certification sets a VB100 award is comfortably earned. Preventon’s history is slightly longer than many of its partners, showing three passes and a single fail in the last six tests, with two not entered; five passes and two fails in the last two years. No issues were noted during testing, which took just a little longer than the target 24 hours to complete.

App version 2.0.0.2033, Signature date 2001-06-20

Qihoo’s product is a little quirky in its implementation, but with the BitDefender engine under the covers we usually manage to coax a decent showing out of it. The installer measured 122MB, including all required updates, and installed rapidly with little fuss and no need for a reboot. The interface is tidy and simple, providing a fair degree of fine-tuning, and is mostly easy to use, although language translation is a little uneven in places.

Scanning speeds were only medium, but overheads pretty light, and use of resources and impact on our set of activities were impressively low too. This may in part be down to one of the oddities of implementation in this product, which became particularly clear when running the on-access test over infected sets. I hesitate to say that the product doesn’t work properly, the case perhaps being more that it functions differently from expected norms. When a detection occurs on access, be it on read or on write, access to the file is not always prevented; instead, in most cases the product simply produces a pop-up claiming to have blocked access (this could, of course, be another translation oddity). When multiple detections occur in close proximity, these pop-ups can take several hours to appear, rendering the protection much less secure than it suggests. Log entries suffer a similar delay, showing that the problem is with the detections themselves, rather than merely the pop-up system.

However, as our rules do not insist on blocking access, only on recording detections in logs, it just about scrapes by, showing some solid scores in the main sets when the logs were eventually populated. Moving on to the on-demand tests, we hit another snag when it became clear that logging was once again not being written out to file, but instead accumulating in memory, and again no sort of checking was in place to ensure that excessive amounts of RAM were not being consumed. After a couple of days’ run time, with the system steadily getting slower and slower and close to 2GB of memory taken up by the scanner process, the job crashed, leaving no salvageable data for us to use. Instead we had to start from scratch, running multiple smaller jobs once more.

With full results finally in, the excellent scores expected from the BitDefender engine were recorded, with very good coverage across the sets. A VB100 award is just about granted, although it does seem that ‘not working properly’ is not so very far from the truth. Qihoo has managed to scrape four passes from the last six tests, with two not entered, and in the ten tests since its first appearance shows six passes and a single fail, with three no-entries.