Virus Bulletin

Copyright © Virus Bulletin 2017

In an era where one dramatic statement after another is made about the state of security, it's a good idea sometimes to take stock and look at how far we have come.

When the ILOVEYOU virus wreaked havoc 17 years ago [1], all it took for a victim to become infected was to open the email attachment. To make matters worse, spam filters were still in their infancy and many email accounts weren't protected at all.

In 2017, it would be rare to find an email account that wasn't somehow protected by a spam filter. Moreover, while malware that executes upon opening an attachment does exist, such attacks are a lot less common these days, and when they do happen they almost always depend on the user running a vulnerable version of the affected software [2].

Email remains an important attack vector though, and five malicious emails that caused problems for some of the products in this month's test provide a good illustration of how users' machines get infected via emails. The emails in question appeared to reference an invoice, about which the attachment – which was a PDF file – promised to contain more details.

Upon opening the attachment, however, the recipient was asked to open a second file. For many users, alarm bells would go off here, and rightly so, but for many others they wouldn't, which isn't too surprising, given that Adobe's PDF reader also asks for permission to print a document. If the second file was indeed opened, another prompt would be given, asking the user to enable macros. Once enabled, these macros would download the actual payload. (Though we did perform some basic analysis of the attached PDF and embedded document, executing the full chain was beyond the scope of this test. Moreover, it would have given different malware in different circumstances. It is likely that the final payload would be ransomware or a banking trojan.)

At each step in the infection process (receipt of email, opening of attachment, opening of second document, enabling of macros), the likely number of successful infections decreases – and anti-virus running on the endpoint reduces this probability even further. But nothing reduces it as much as a spam filter which, as data in this test demonstrates, could block 99% or more of the emails with malicious attachments. (We feel obliged to add a disclaimer that the numbers in this report should be seen in the context of the test and don’t automatically translate to a real-world environment. For this particular, non-targeted threat, however, we believe the figure to be quite accurate.)

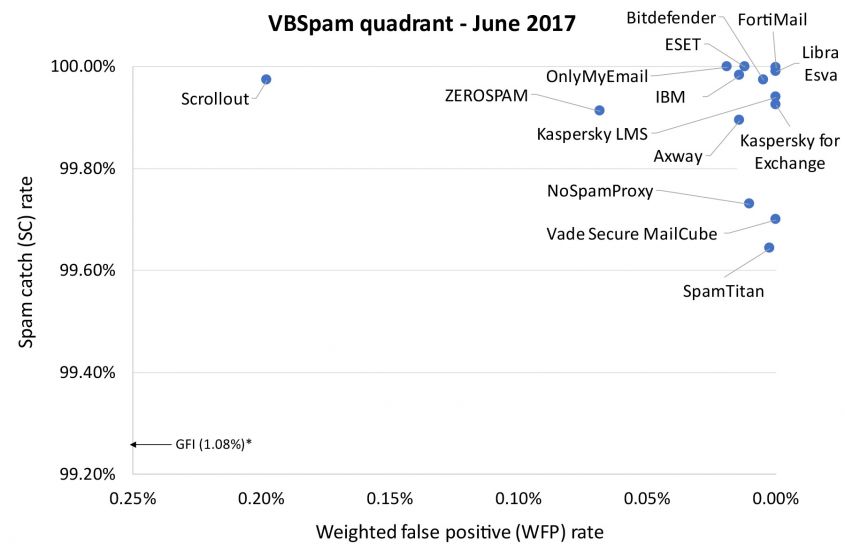

Of course, malware isn't the only threat spreading via email, and the 15 email security solutions we tested performed even better on 'general' spam (spam without malicious attachments). With one exception, those products all achieved a VBSpam award, with eight products performing so well they achieved a VBSpam+ award. We also tested seven DNS-based blacklists of various kinds.

Of the spam emails we received in this test, around one in 100 was malicious. This number was not significantly higher than in the last test and may still be a consequence of the volatility of spam discussed in that report. And while the ratio of malicious to non-malicious spam may be very different in different email streams, part of the reason why it may seem that malicious spam is more prevalent than 1% is that it's harder to block than non-malicious spam.

Indeed, in our test, the 15 full solutions on our test bench were more than six times as likely to miss a malicious spam email as they were to miss a 'general' spam email.

Performance on those malicious emails was still good though: the products blocked on average more than 99% of them, and eight products blocked all 1,958 of them.

Only around eight per cent of malware managed to bypass at least one full solution, with five emails – the fake invoices mentioned earlier – missed by three products. (The test did not check whether a user would have been able to retrieve those malicious emails from quarantine. In some cases, stubborn users have been known to infect themselves this way.)

Among the 'general' spam, only about one and a half per cent of the emails managed to bypass at least one product, proving once again that sending spam is a game of numbers: the spammer must send a (very) large number of emails to ensure that enough of them reach enough users. As on previous occasions, fraudulent and scammy emails, which are typically sent in smaller batches and are thus better able to stay under the radar, were among those that were hardest for products to block.

With one exception, products performed well on the ham feed of legitimate emails, blocking either very few or none at all. An email in Brazilian Portuguese proved the most difficult to filter, but even in this case only three products blocked it erroneously.

Among the performances on the spam corpus, OnlyMyEmail, ESET and Fortinet stood out for each missing fewer than ten emails in the spam corpus. Alongside that, Fortinet didn't block any legitimate email, giving it the highest final score and making it one of eight products to achieve a VBSpam+ award, the others being Axway, Bitdefender, both Kaspersky products, Libra Esva, MailCube and Net At Work's NoSpamProxy – which returns to the public test bench after a three-year absence, gaining its first VBSpam+ award.

SC rate: 99.89%

FP rate: 0.00%

Final score: 99.82

Project Honey Pot SC rate: 99.86%

Abusix SC rate: 99.95%

Newsletters FP rate: 1.7%

Malware SC rate: 98.62%

| 10% | 50% | 95% | 98% |

SC rate: 99.97%

FP rate: 0.00%

Final score: 99.95

Project Honey Pot SC rate: 99.96%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.6%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.999%

FP rate: 0.01%

Final score: 99.94

Project Honey Pot SC rate: 99.998%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.997%

FP rate: 0.00%

Final score: 99.997

Project Honey Pot SC rate: 99.996%

Abusix SC rate: 99.997%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.25%

FP rate: 1.05%

Final score: 93.85

Project Honey Pot SC rate: 99.07%

Abusix SC rate: 99.56%

Newsletters FP rate: 5.2%

Malware SC rate: 99.54%

| 10% | 50% | 95% | 98% |

GFI's high false positive rate is unusual for the product, which hasn't failed a test for a long time. We are hopeful that in the next test its scores will return to the values that we are more used to seeing, and show that this glitch was due to a misconfiguration of the product.

SC rate: 99.98%

FP rate: 0.01%

Final score: 99.91

Project Honey Pot SC rate: 99.97%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.3%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.92%

FP rate: 0.00%

Final score: 99.92

Project Honey Pot SC rate: 99.89%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.0%

Malware SC rate: 96.17%

| 10% | 50% | 95% | 98% |

SC rate: 99.94%

FP rate: 0.00%

Final score: 99.94

Project Honey Pot SC rate: 99.91%

Abusix SC rate: 99.98%

Newsletters FP rate: 0.0%

Malware SC rate: 96.42%

| 10% | 50% | 95% | 98% |

SC rate: 99.99%

FP rate: 0.00%

Final score: 99.99

Project Honey Pot SC rate: 99.98%

Abusix SC rate: 99.999%

Newsletters FP rate: 0.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

|

SC rate: 99.73%

FP rate: 0.00%

Final score: 99.69

Project Honey Pot SC rate: 99.64%

Abusix SC rate: 99.90%

Newsletters FP rate: 1.2%

Malware SC rate: 98.72%

| 10% | 50% | 95% | 98% |

SC rate: 99.999%

FP rate: 0.01%

Final score: 99.90

Project Honey Pot SC rate: 99.999%

Abusix SC rate: 100.00%

Newsletters FP rate: 0.9%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.97%

FP rate: 0.14%

Final score: 98.98

Project Honey Pot SC rate: 99.97%

Abusix SC rate: 99.99%

Newsletters FP rate: 7.0%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 99.64%

FP rate: 0.00%

Final score: 99.63

Project Honey Pot SC rate: 99.63%

Abusix SC rate: 99.67%

Newsletters FP rate: 0.3%

Malware SC rate: 97.29%

| 10% | 50% | 95% | 98% |

SC rate: 99.70%

FP rate: 0.00%

Final score: 99.70

Project Honey Pot SC rate: 99.53%

Abusix SC rate: 99.997%

Newsletters FP rate: 0.0%

Malware SC rate: 99.90%

| 10% | 50% | 95% | 98% |

SC rate: 99.91%

FP rate: 0.06%

Final score: 99.57

Project Honey Pot SC rate: 99.86%

Abusix SC rate: 99.999%

Newsletters FP rate: 1.2%

Malware SC rate: 100.00%

| 10% | 50% | 95% | 98% |

SC rate: 94.29%

FP rate: 0.01%

Final score: 94.23

Project Honey Pot SC rate: 92.11%

Abusix SC rate: 98.23%

Newsletters FP rate: 0.0%

Malware SC rate: 98.31%

SC rate: 97.81%

FP rate: 0.01%

Final score: 97.75

Project Honey Pot SC rate: 97.13%

Abusix SC rate: 99.05%

Newsletters FP rate: 0.0%

Malware SC rate: 98.31%

SC rate: 74.00%

FP rate: 0.00%

Final score: 74.00

Project Honey Pot SC rate: 87.49%

Abusix SC rate: 49.65%

Newsletters FP rate: 0.0%

Malware SC rate: 0.00%

SC rate: 35.73%

FP rate: 0.00%

Final score: 35.73

Project Honey Pot SC rate: 53.18%

Abusix SC rate: 4.25%

Newsletters FP rate: 0.0%

Malware SC rate: 0.10%

SC rate: 93.93%

FP rate: 0.00%

Final score: 93.93

Project Honey Pot SC rate: 90.79%

Abusix SC rate: 99.60%

Newsletters FP rate: 0.0%

Malware SC rate: 99.54%

SC rate: 96.11%

FP rate: 0.00%

Final score: 96.11

Project Honey Pot SC rate: 94.15%

Abusix SC rate: 99.65%

Newsletters FP rate: 0.0%

Malware SC rate: 99.54%

SC rate: 66.96%

FP rate: 0.06%

Final score: 66.67

Project Honey Pot SC rate: 72.45%

Abusix SC rate: 57.06%

Newsletters FP rate: 0.0%

Malware SC rate: 0.00%

| True negatives | False positives | FP rate | False negatives | True positives | SC rate | VBSpam | Final score | |

| Axway | 8418 | 0 | 0.00% | 223 | 209245 | 99.89% | 99.82 | |

| Bitdefender | 8418 | 0 | 0.00% | 58 | 209410 | 99.97% | 99.95 | |

| ESET | 8417 | 1 | 0.01% | 3 | 209465 | 99.999% | 99.94 | |

| FortiMail | 8418 | 0 | 0.00% | 7 | 209461 | 99.997% | 99.997 | |

| GFI MailEssentials§ | 8330 | 88 | 1.05% | 1580 | 207888 | 99.25% | X | 93.85 |

| IBM Lotus Protector | 8417 | 1 | 0.01% | 38 | 209430 | 99.98% | 99.91 | |

| Kaspersky for Exchange | 8417 | 0 | 0.00% | 159 | 209309 | 99.92% | 99.92 | |

| Kaspersky LMS | 8417 | 0 | 0.00% | 129 | 209339 | 99.94% | 99.94 | |

| Libra Esva | 8418 | 0 | 0.00% | 23 | 209445 | 99.99% | 99.99 | |

| NoSpamProxy | 8418 | 0 | 0.00% | 556 | 208912 | 99.73% | 99.69 | |

| OnlyMyEmail | 8417 | 1 | 0.01% | 2 | 209466 | 99.999% | 99.90 | |

| Scrollout | 8406 | 12 | 0.14% | 56 | 209412 | 99.97% | 98.98 | |

| SpamTitan | 8418 | 0 | 0.00% | 748 | 208720 | 99.64% | 99.63 | |

| Vade Secure MailCube | 8418 | 0 | 0.00% | 630 | 208838 | 99.70% | 99.70 | |

| ZEROSPAM | 8413 | 5 | 0.06% | 186 | 209282 | 99.91% | 99.57 | |

| IBM X-Force IP* | 8417 | 1 | 0.01% | 11955 | 197513 | 94.27% | N/A | 94.23 |

| IBM X-Force Combined* | 8417 | 1 | 0.01% | 4584 | 204884 | 97.81% | N/A | 97.75 |

| IBM X-Force URL* | 8418 | 0 | 0.00% | 54470 | 154998 | 74.00% | N/A | 74.00 |

| Spamhaus DBL* | 8418 | 0 | 0.00% | 134631 | 74837 | 35.73% | N/A | 35.73 |

| Spamhaus ZEN* | 8418 | 0 | 0.00% | 12711 | 196757 | 93.93% | N/A | 93.93 |

| Spamhaus ZEN+DBL* | 8418 | 0 | 0.00% | 8140 | 201328 | 96.11% | N/A | 96.11 |

| URIBL* | 8413 | 5 | 0.06% | 69207 | 140261 | 66.96% | N/A | 66.67 |

*The IBM X-Force, Spamhaus and URIBL products are partial solutions and their performance should not be compared with that of other products.

§Please refer to earlier note.

(Please refer to the text for full product names and details.)

| Newsletters | Malware | Project Honey Pot | Abusix | STDev† | Speed | ||||||||

| False positives | FP rate | False negatives | SC rate | False negatives | SC rate | False negatives | SC rate | 10% | 50% | 95% | 98% | ||

| Axway | 6 | 1.7% | 27 | 98.62% | 189 | 99.86% | 34 | 99.95% | 0.41 | ||||

| Bitdefender | 2 | 0.6% | 0 | 100.00% | 57 | 99.96% | 1 | 99.999% | 0.13 | ||||

| ESET | 0 | 0.0% | 0 | 100.00% | 3 | 99.998% | 0 | 100.00% | 0.02 | ||||

| FortiMail | 0 | 0.0% | 0 | 100.00% | 5 | 99.996% | 2 | 99.997% | 0.03 | ||||

| GFI MailEssentials§ | 18 | 5.2% | 9 | 99.54% | 1253 | 99.07% | 327 | 99.56% | 0.94 | ||||

| IBM Lotus Protector | 1 | 0.3% | 0 | 100.00% | 37 | 99.97% | 1 | 99.999% | 0.11 | ||||

| Kaspersky for Exchange | 0 | 0.0% | 75 | 96.17% | 145 | 99.89% | 14 | 99.98% | 0.68 | ||||

| Kaspersky LMS | 0 | 0.0% | 70 | 96.42% | 117 | 99.91% | 12 | 99.98% | 0.64 | ||||

| Libra Esva | 0 | 0.0% | 0 | 100.00% | 22 | 99.98% | 1 | 99.999% | 0.07 | ||||

| NoSpamProxy | 4 | 1.2% | 25 | 98.70% | 483 | 99.64% | 73 | 99.90 | 0.42 | ||||

| OnlyMyEmail | 3 | 0.9% | 0 | 100.00% | 2 | 99.999% | 0 | 100.00% | 0.04 | ||||

| Scrollout | 24 | 7.0% | 0 | 100.00% | 46 | 99.97% | 10 | 99.99% | 0.15 | ||||

| SpamTitan | 1 | 0.3% | 53 | 97.29% | 503 | 99.63% | 245 | 99.67% | 1.24 | ||||

| Vade Secure MailCube | 0 | 0.0% | 2 | 99.90% | 628 | 99.53% | 2 | 99.997% | 0.41 | ||||

| ZEROSPAM | 4 | 1.2% | 0 | 100.00% | 185 | 99.86% | 1 | 99.999% | 0.41 | ||||

| IBM X-Force IP* | 0 | 0.0% | 33 | 98.31% | 10632 | 92.11% | 1323 | 98.23% | 5.5 | N/A | N/A | N/A | N/A |

| IBM X-Force Combined* | 0 | 0.0% | 33 | 98.31% | 3874 | 97.13% | 710 | 99.05% | 3.71 | N/A | N/A | N/A | N/A |

| IBM X-Force URL* | 0 | 0.0% | 1958 | 0.00% | 16861 | 87.49% | 37612 | 49.65% | 13.18 | N/A | N/A | N/A | N/A |

| Spamhaus DBL* | 0 | 0.0% | 1956 | 0.10% | 63104 | 53.18% | 71530 | 4.25% | 19.88 | N/A | N/A | N/A | N/A |

| Spamhaus ZEN* | 0 | 0.0% | 9 | 99.54% | 12414 | 90.79% | 299 | 99.60% | 5.71 | N/A | N/A | N/A | N/A |

| Spamhaus ZEN+DBL* | 0 | 0.0% | 9 | 99.54% | 7882 | 94.15% | 259 | 99.65% | 4 | N/A | N/A | N/A | N/A |

| URIBL* | 0 | 0.0% | 1958 | 0.00% | 37132 | 72.45% | 32078 | 57.06% | 13.74 | N/A | N/A | N/A | N/A |

*The Spamhaus products, IBM X-Force and URIBL are partial solutions and their performance should not be compared with that of other products. None of the queries to the IP blacklists included any information on the attachments; hence their performance on the malware corpus is added purely for information.

†The standard deviation of a product is calculated using the set of its hourly spam catch rates.

| 0-30 seconds | 30 seconds to two minutes | two minutes to 10 minutes | more than 10 minutes |

§Please refer to earlier note.

(Please refer to the text for full product names.)

| Hosted solutions | Anti-malware | IPv6 | DKIM | SPF | DMARC | Multiple MX-records | Multiple locations |

| OnlyMyEmail | Proprietary (optional) | √ | √ | * | √ | √ | |

| Vade Secure MailCube | DrWeb; proprietary | √ | √ | √ | √ | √ | |

| ZEROSPAM | ClamAV | √ | √ | √ |

* OnlyMyEmail verifies DMARC status but doesn't provide feedback at the moment.

(Please refer to the text for full product names.)

| Local solutions | Anti-malware | IPv6 | DKIM | SPF | DMARC | Interface | |||

| CLI | GUI | Web GUI | API | ||||||

| Axway MailGate | Kaspersky, McAfee | √ | √ | √ | √ | ||||

| Bitdefender | Bitdefender | √ | √ | √ | √ | ||||

| ESET | ESET Threatsense | √ | √ | √ | √ | √ | √ | ||

| FortiMail | Fortinet | √ | √ | √ | √ | √ | √ | √ | |

| GFI MailEssentials | Five anti-virus engines | √ | √ | √ | |||||

| IBM | Sophos; IBM Remote Malware Detection | √ | √ | √ | |||||

| Kaspersky LMS | Kaspersky Lab | √ | √ | √ | √ | ||||

| Kaspersky SMG | Kaspersky Lab | √ | √ | √ | √ | ||||

| Libra Esva | ClamAV; others optional | √ | √ | √ | √ | ||||

| NoSpamProxy | Cyren | √ | √ | √ | √ | √ | |||

| Scrollout | ClamAV | √ | √ | √ | √ | ||||

| Sophos | Sophos | √ | √ | √ | |||||

| SpamTitan | Kaspersky; ClamAV | √ | √ | √ | √ | √ | √ | ||

(Please refer to the text for full product names.)

| Product | Final score |

| FortiMail | 99.997 |

| Libra Esva | 99.99 |

| Bitdefender | 99.95 |

| ESET | 99.94 |

| Kaspersky LMS | 99.94 |

| Kaspersky for Exchange | 99.92 |

| IBM | 99.91 |

| OnlyMyEmail | 99.90 |

| Axway | 99.82 |

| Vade Secure MailCube | 99.70 |

| NoSpamProxy | 99.69 |

| SpamTitan | 99.63 |

| ZEROSPAM | 99.57 |

| Scrollout | 98.98 |

| GFI MailEssentials | 93.85 |

(Please refer to the text for full product names and details.)

*Please refer to earlier note. (Please refer to text for full product names and details.)

*Please refer to earlier note. (Please refer to text for full product names and details.)

This was another good test for almost all products, with spam catch rates extremely high and the block rates of malicious emails also very good. Users can rest assured that these products greatly reduce the likeliness of them falling victim to an email-borne attack.

The next test report, which is to be published in September 2017, will continue to look at all aspects of spam. Those interested in submitting a product are asked to contact [email protected].

[1] https://www.virusbulletin.com/virusbulletin/2015/05/throwback-thursday-when-love-came-town-june-2000.

[2] https://www.virusbulletin.com/blog/2017/06/cve-2017-0199-new-cve-2012-0158/.

[3] http://www.postfix.org/XCLIENT_README.html.

The full VBSpam test methodology can be found at https://www.virusbulletin.com/testing/vbspam/vbspam-methodology/.

The test ran for 19 days, from 12am on 13 May to 12am on 1 June 2017.

The test corpus consisted of 218,231 emails. 209,468 of these were spam, 134,768 of which were provided by Project Honey Pot, with the remaining 74,706 spam emails provided by spamfeed.me, a product from Abusix. There were 8,418 legitimate emails ('ham') and 345 newsletters.

Moreover, 1,958 emails from the spam corpus were found to contain a malicious attachment; though we report separate performance metrics on this corpus, it should be noted that these emails were also counted as part of the spam corpus. (Note: the 'malware SC rate' refers to products blocking the emails as spam and not necessarily detecting the attachments as malicious.)

Emails were sent to the products in real time and in parallel. Though products received the email from a fixed IP address, all products had been set up to read the original sender's IP address as well as the EHLO/HELO domain sent during the SMTP transaction, either from the email headers or through an optional XCLIENT SMTP command [3]. Consequently, products were able to filter email in an environment that was very close to one in which they would be deployed in the real world.

For those products running in our lab, we ran them as virtual machines on a VMware ESXi cluster. As different products have different hardware requirements – not to mention those running on their own hardware, or those running in the cloud – there is little point comparing the memory, processing power or hardware the products were provided with; we followed the developers' requirements and note that the amount of email we receive is representative of that received by a small organization.

Although we stress that different customers have different needs and priorities, and thus different preferences when it comes to the ideal ratio of false positives to false negatives, we created a one-dimensional 'final score' to compare products. This is defined as the spam catch (SC) rate minus five times the weighted false positive (WFP) rate. The WFP rate is defined as the false positive rate of the ham and newsletter corpora taken together, with emails from the latter corpus having a weight of 0.2:

WFP rate = (#false positives + 0.2 * min(#newsletter false positives , 0.2 * #newsletters)) / (#ham + 0.2 * #newsletters)

Final score = SC - (5 x WFP)

In addition, for each product, we measure how long it takes to deliver emails from the ham corpus (excluding false positives) and, after ordering these emails by this time, we colour-code the emails at the 10th, 50th, 95th and 98th percentiles:

| (green) = up to 30 seconds | |

| (yellow) = 30 seconds to two minutes | |

| (orange) = two to ten minutes | |

| (red) = more than ten minutes |

Products earn VBSpam certification if the value of the final score is at least 98 and the 'delivery speed colours' at 10 and 50 per cent are green or yellow and that at 95 per cent is green, yellow or orange.

Meanwhile, products that combine a spam catch rate of 99.5% or higher with a lack of false positives, no more than 2.5% false positives among the newsletters and 'delivery speed colours' of green at 10 and 50 per cent and green or yellow at 95 and 98 per cent earn a VBSpam+ award.